9/18/2023 Admin

Processing Large Amounts of Text in LLM Models

Writing fiction stories with large language models (LLMs) can be challenging, especially when the story spans multiple chapters or scenes. LLMs have a hard time processing large amounts of text because they have a limited context window. This means that they can only remember a certain number of tokens or words at a time.

This causes them to ‘forget’ information outside of their context window, such as the names, traits, and actions of the characters. This is problematic if you are using an LLM to write fiction stories because characters will suddenly disappear from the story or a character who has passed away in a previous chapter will suddenly be alive in the next one.

In this blog post, we will explore how to resolve this by using a vector database. A vector database will allow an entire book to be stored and the LLM will be able to search this vector database and use it as its unlimited memory.

The Sample Application

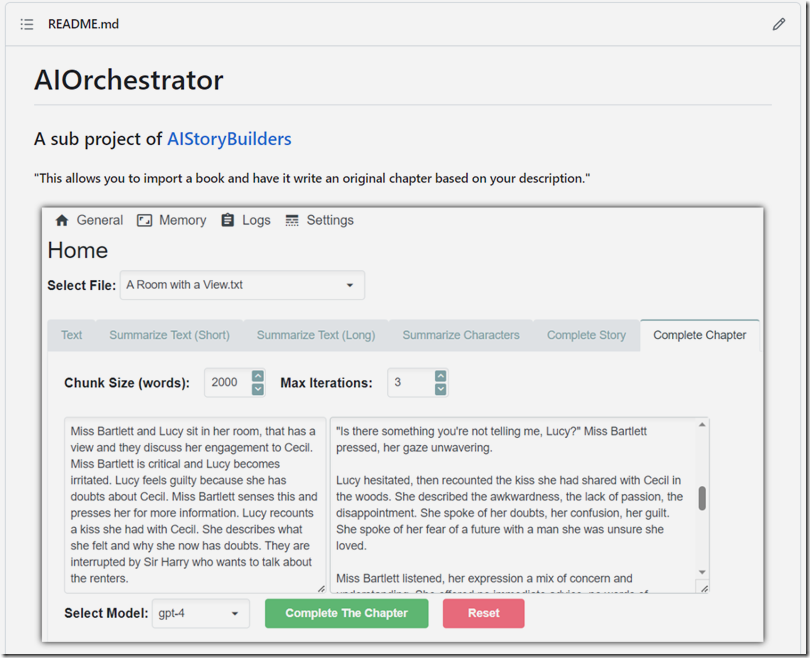

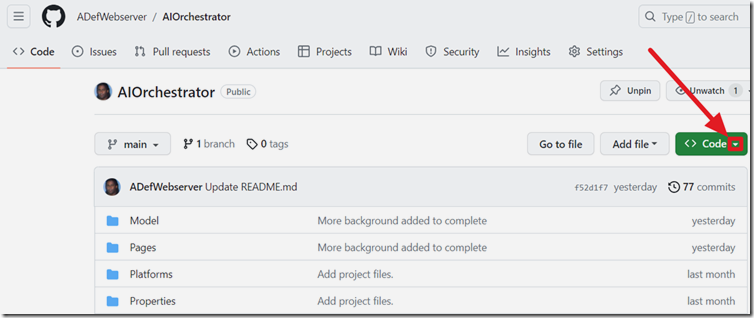

The sample application, located at: https://github.com/ADefWebserver/AIOrchestrator allows you to import a book and have it write an original chapter based on your description. It also contains other features that allow you to explore and test using the ChatGPT LLM to process large amounts of text.

Download and Configure

Download the code for the application from https://github.com/ADefWebserver/AIOrchestrator and open it in Visual Studio.

Ensure you have the MAUI workload installed and at least net7.0 installed.

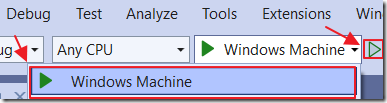

Run the application (note: it will run only on Windows).

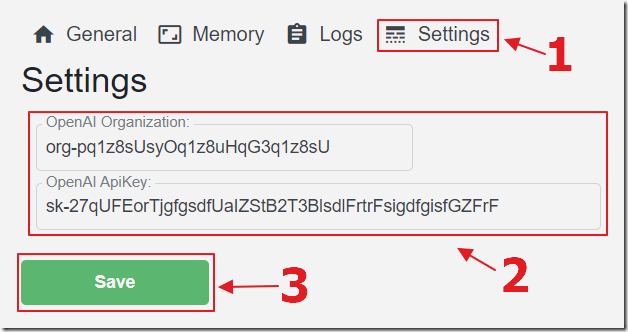

After opening the program, the first step is to click on the Settings button and to set your OpenAI keys.

If you don’t already have an OpenAI account you can get one here: https://platform.openai.com/signup?launch

After you create your account…

- You can get your Organization key here: https://platform.openai.com/account/org-settings

- You can create your API key here: https://platform.openai.com/account/api-keys

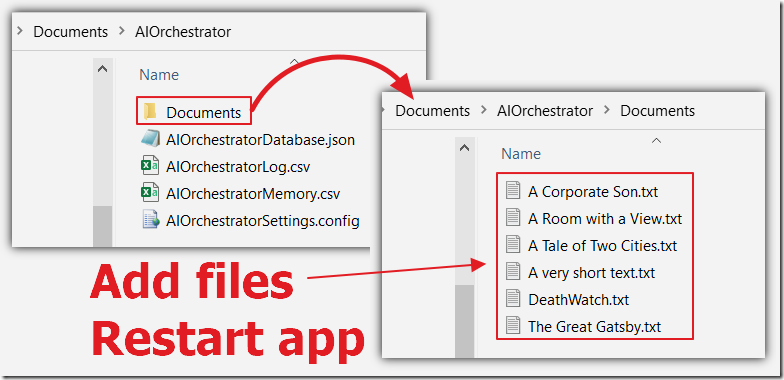

You can add your own files to the app by adding them to the [Your User Name]/Documents/AIOrchestrator/Documents directory and restarting the app.

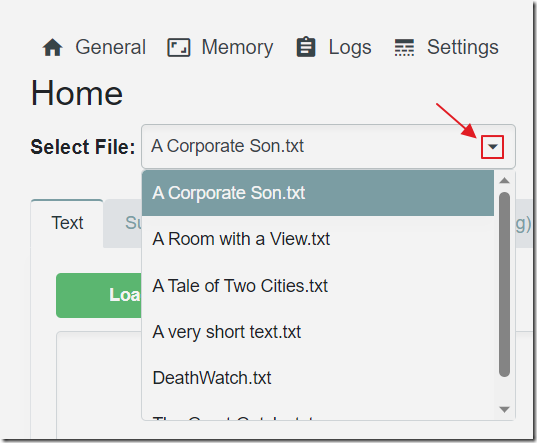

This will allow your text story to be selected in the Select File download and to be used in the application.

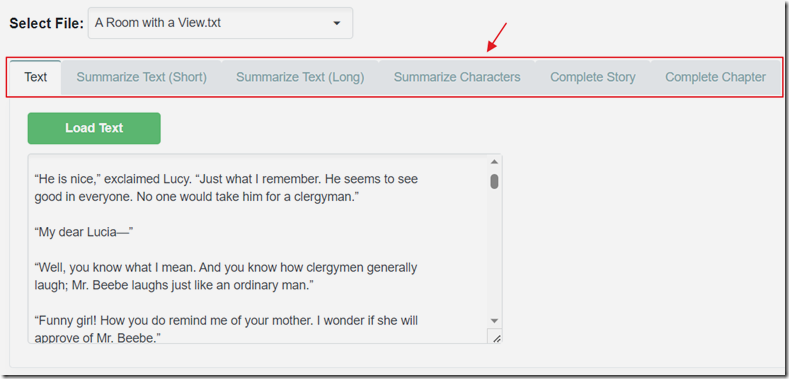

The Selected File is used as the file source for each tab that provides the following functionality:

- Text – Loads the text of the selected file so you can see the contents

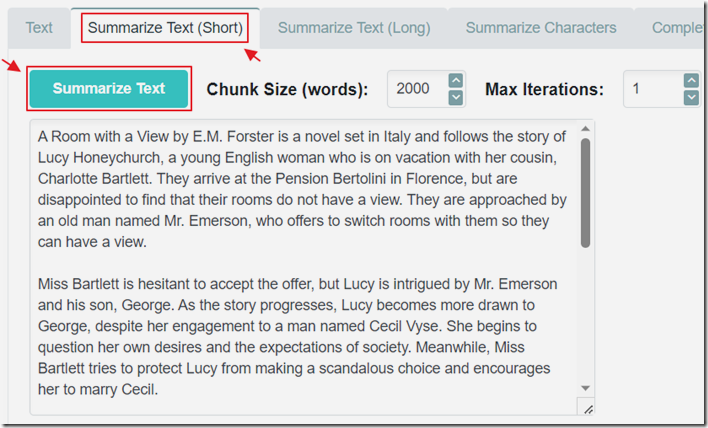

- Summarize Text (Short) – Creates a summary of the text by continually re-summarizing the current summary as the text is processed

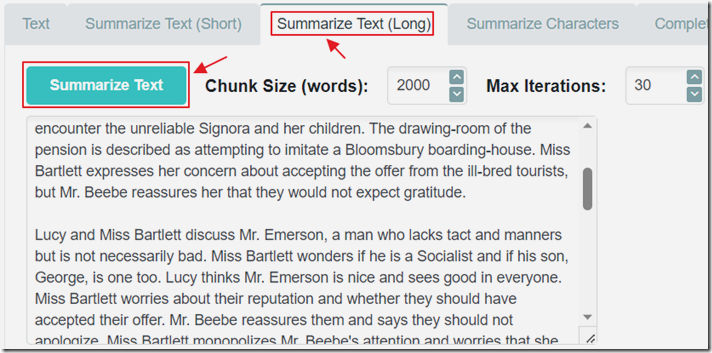

- Summarize Text (Long) – Creates a summary of the text by simply creating a summary of each section as the text is processed

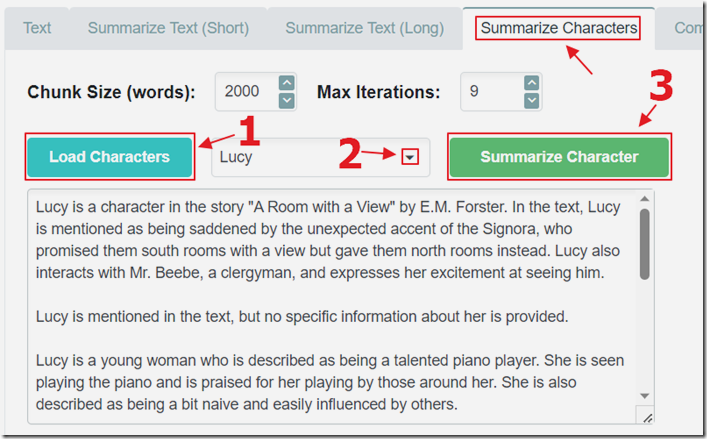

- Summarize Characters – Detects the characters in the text and after selecting one character produces a summary of the characters actions in the text

- Complete Story – Allows you to write the start of a paragraph and the AI will complete the paragraph based on the contents of the text

- Complete Chapter – Allows you to write an outline of a chapter and the AI will write that chapter based on the contents of the text

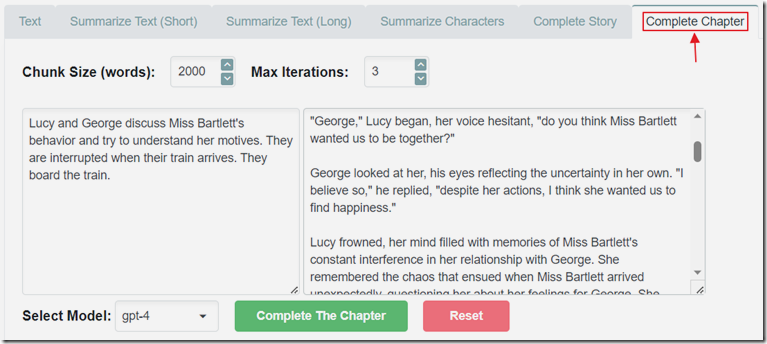

Complete Chapter

The application is mostly a proof of concept to demonstrate the strategies of processing large amounts of text using an LLM. The most advanced example is on the Complete Chapter tab.

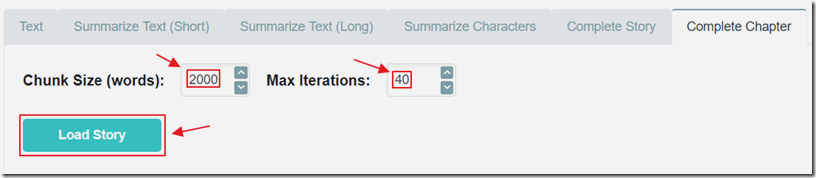

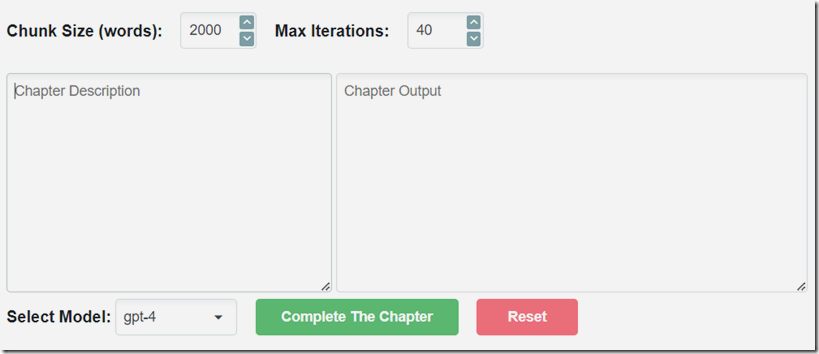

As with most of the other tabs you select the Chunk Size that indicates how many words are to be read at one time, and Max Iterations which indicates how many sections are to be read and stored in a vector database stored in a text file.

If the Chunk Size is too small the sections of the text that are later fed to the LLM may be too small, and the LLM may not have the context to understand their meaning. Making the Chunk Size too big may create sections of text that contain a lot of irrelevant information and use up valuable space in the limited context window that the LLM has.

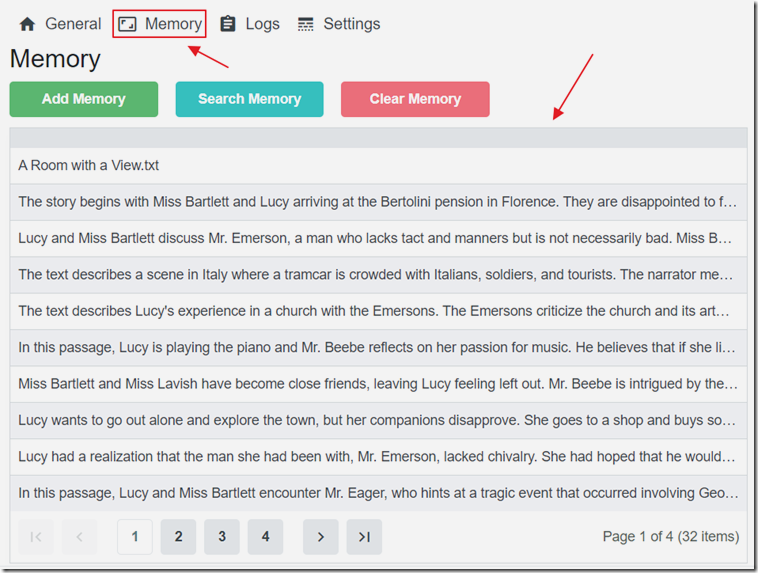

If we click the Memory button we can see that a summary of the story has been created, for each chunk processed.

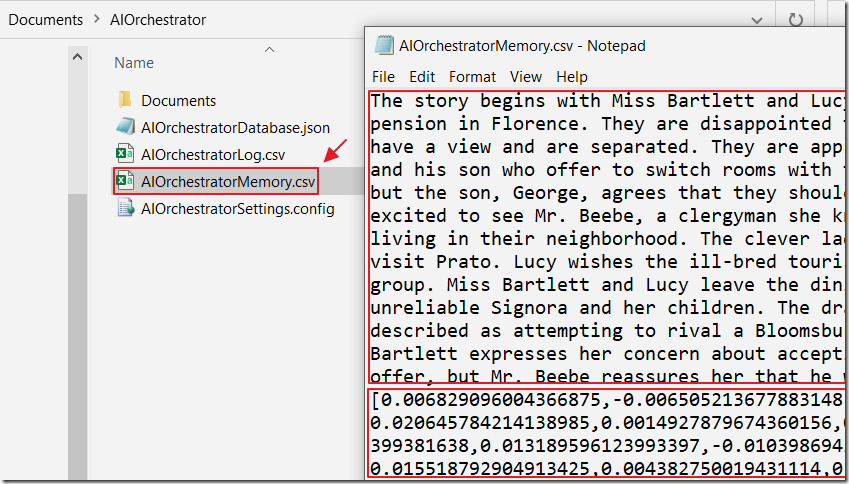

If we look at the database text file that was created, we see that each summary chunk is paired with its embedding that consists of a series of vectors that represent that text.

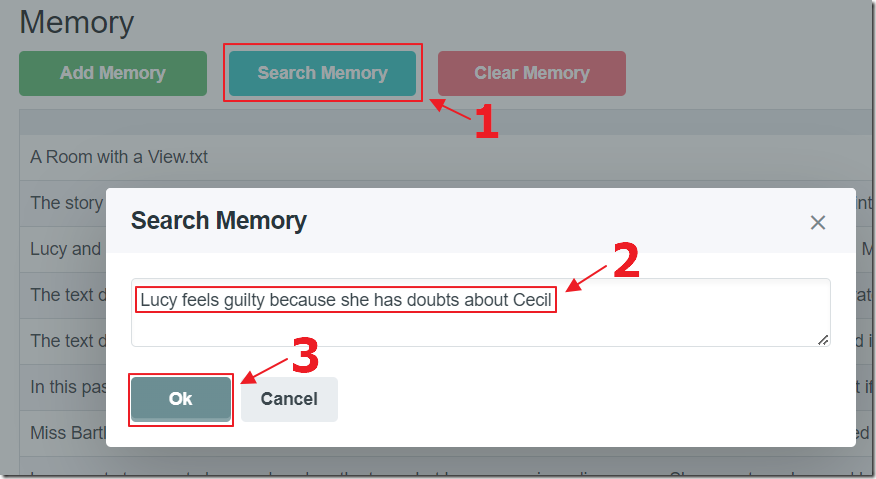

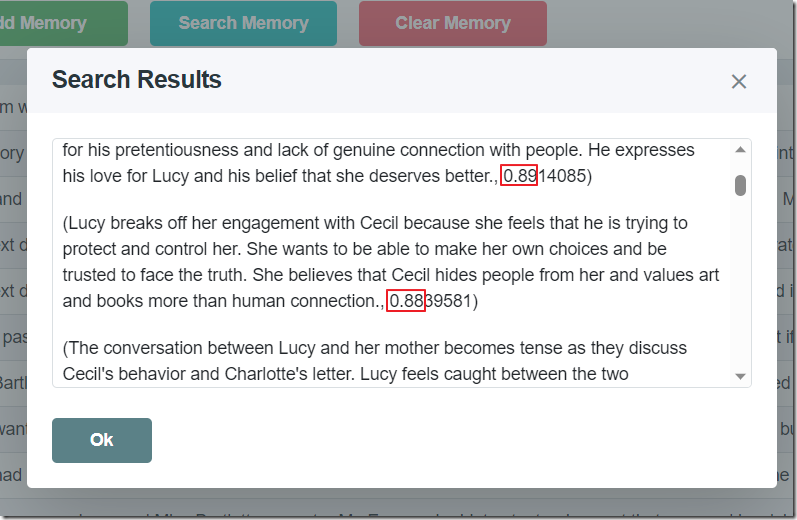

We can click the Search Memory button to open a dialog that allows us to enter a search term.

The search term will be converted into an embedding consisting of a series of vectors. Those vectors are calculated against the vectors of the chunks of summary text, and the top matching summary sections are returned. This is the process that be performed to provide the LLM background text to use when writing the chapter.

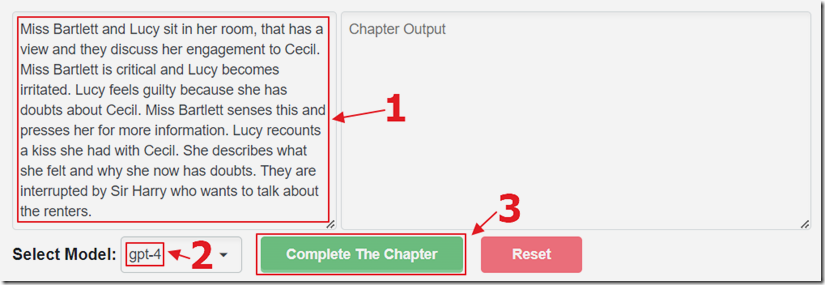

You can return to the Complete Chapter tab and after selecting the same text in the Selected File dropdown, you will then be presented with a screen that asks you to enter a description of the chapter you want written.

This can be a new chapter that takes place at any point of the story.

Enter the text that describes the outline for the chapter, select a OpenAI model (gpt-4 provides the best results, but is the slowest and costs the most) and click the Complete The Chapter button.

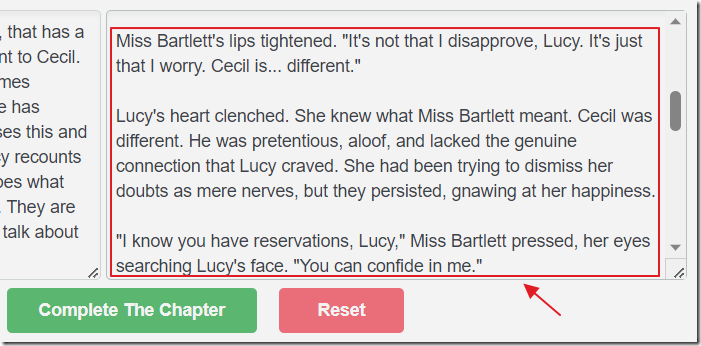

An original chapter will be created by the LLM and will display in the right-hand text box.

The Experiments

One of the challenges of using a large language model (LLM) to complete a story is to provide it with enough context. If the story is an entire book, you need to summarize a large amount of text and convey the main plot, characters, and setting. However, you have a limited number of tokens in your ‘context window’.

This sample application was created to explore some strategies for addressing this.

Summarize Text (Short)

The first example creates a single summary of the text no matter how many iterations that are passed to it.

The key code is contained in this file: https://github.com/ADefWebserver/AIOrchestrator/blob/main/Model/OrchestratorMethods.SummarizeTextShort.cs

The following code constructs the prompt that is sent to the LLM:

private string CreateSystemMessage(string paramCurrentSummary, string paramNewText){// The AI should keep this under 1000 words but here we will ensure itparamCurrentSummary = EnsureMaxWords(paramCurrentSummary, 1000);return "You are a program that will produce a summary not to exceed 1000 words. \n" +"Only respond with the contents of the summary nothing else. \n" +"Output a summary that combines the contents of ###Current Summary### with the additional content in ###New Text###. \n" +"In the summary only use content from ###Current Summary### and ###New Text###. \n" +"Only respond with the contents of the summary nothing else. \n" +"Do not allow the summary to exceed 1000 words. \n" +$"###Current Summary### is: {paramCurrentSummary}\n" +$"###New Text### is: {paramNewText}\n";}

You will find, when experimenting with the code that is does well on less than four 2000 word chunks, but degrades significantly when you have a larger collection of chunks.

Summarize Text (Long)

The second example creates a summary of each chuck that is passed to it. It does not attempt to create an overall summary.

The key code is contained in this file: https://github.com/ADefWebserver/AIOrchestrator/blob/main/Model/OrchestratorMethods.SummarizeTextLong.cs

The disadvantage of this method is that it produces too much data to be contained in the LLM’s context window. However, this is not an issue if the summary data is stored in a vector database where key pieces can later be retrieved.

This does that using the following code:

private async Task CreateVectorEntry(string VectorContent){// **** Call OpenAI and get embeddings for the memory text// Create an instance of the OpenAI clientvar api = new OpenAIClient(new OpenAIAuthentication(SettingsService.ApiKey, SettingsService.Organization));// Get the model detailsvar model = await api.ModelsEndpoint.GetModelDetailsAsync("text-embedding-ada-002");// Get embeddings for the textvar embeddings = await api.EmbeddingsEndpoint.CreateEmbeddingAsync(VectorContent, model);// Get embeddings as an array of floatsvar EmbeddingVectors = embeddings.Data[0].Embedding.Select(d => (float)d).ToArray();// Loop through the embeddingsList<VectorData> AllVectors = new List<VectorData>();for (int i = 0; i < EmbeddingVectors.Length; i++){var embeddingVector = new VectorData{VectorValue = EmbeddingVectors[i]};AllVectors.Add(embeddingVector);}// Convert the floats to a single stringvar VectorsToSave = "[" + string.Join(",", AllVectors.Select(x => x.VectorValue)) + "]";// Write the memory to the .csv filevar AIOrchestratorMemoryPath = $"{Environment.GetFolderPath(Environment.SpecialFolder.MyDocuments)}/AIOrchestrator/AIOrchestratorMemory.csv";using (var streamWriter = new StreamWriter(AIOrchestratorMemoryPath, true)){streamWriter.WriteLine(VectorContent + "|" + VectorsToSave);}}

Note: For more information on this process see: What Are Embeddings and Vectors (And Why Should I Care?)

Summarize Characters

While not used further in the example application, the Summarize Characters tab demonstrates a capability that the LLM has that can be used to address limitations discussed at the end of this blog post.

The code to identify the characters in the text is contained in the following file: https://github.com/ADefWebserver/AIOrchestrator/blob/main/Model/OrchestratorMethods.LoadCharacters.cs

The following code is used to construct the prompt that identifies the characters:

private string CreateSystemMessageCharacters(string paramNewText){return "You are a program that will identify the names of the named characters in the content of ###New Text###.\n" +"Only respond with the names of the named characters nothing else.\n" +"Only list each character name once.\n" +"List each character on a separate line.\n" +"Only respond with the names of the named characters nothing else.\n" +$"###New Text### is: {paramNewText}\n";}

After identifying the characters, the character dropdown is populated.

After a character is selected and the Summarize Character button is clicked, the code in the following file: https://github.com/ADefWebserver/AIOrchestrator/blob/main/Model/OrchestratorMethods.SummerizeCharacter.cs is used to create a summary of the actions of the character.

This is the code used to construct the prompt:

private string CreateSystemMessageCharacterSummary(string paramCharacterName, string paramNewText){return "You are a program that will produce a short summary about ###Named Character### in the content of ###New Text###.\n" +"Only respond with a short summary about ###Named Character### nothing else.\n" +"If ###Named Character### is not mentioned in ###New Text### return [empty] as a response.\n" +$"###Named Character### is: {paramCharacterName}\n" +$"###New Text### is: {paramNewText}\n";}

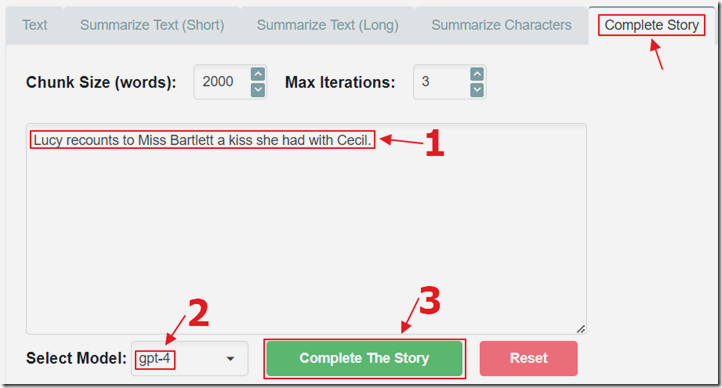

Complete Story

The Complete Story tab allows you to enter the starting part of an original paragraph in the story and then click the Complete The Story button to have the LLM complete the paragraph.

The paragraph will be completed using information from the text.

The code for this tab is contained in the following file: https://github.com/ADefWebserver/AIOrchestrator/blob/main/Model/OrchestratorMethods.CompleteStory.cs

This is the code used to construct the prompt:

private string CreateSystemMessageStory(string paramNewText, string paramBackgroundText){return "You are a program that will write a paragraph to continue a story starting " +"with the content in ###New Text###. Write the paragraph \n" +"only using information from ###New Text### and ###Background Text###.\n" +"Only respond with a paragraph that completes the story nothing else.\n" +"Only use information from ###New Text### and ###Background Text###.\n" +$"###New Text### is: {paramNewText}\n" +$"###Background Text### is: {paramBackgroundText}\n";}

Complete Chapter

The Complete Chapter code is contained in the following file: https://github.com/ADefWebserver/AIOrchestrator/blob/main/Model/OrchestratorMethods.CompleteChapter.cs

Limitations and The Future

Writing original text using background text from a vector database is a challenging task that requires a lot of intelligence and creativity. However, it is not enough to just feed the text to a large language model (LLM) and hope for the best.

There are many factors that can affect the coherence and consistency of the generated text, such as the events, characters, locations, and timelines of the story. What if a character dies or a house burns down in Chapter 4 and you are writing Chapter 5? The AI will get information of the character and location because others are referring to it, but not know the ‘state’ of the character or location. To address these issues, a computer program has to track time timelines and background information and elements and surgically feed the LLM information.

This is what is being planned with http://AIStoryBuilders.com an application that aims to address these issues and more. It is an open source project, and the source code is being developed at: https://github.com/ADefWebserver/AIStoryBuilders.

Links

https://github.com/ADefWebserver/AIOrchestrator

What Are Embeddings and Vectors (And Why Should I Care?)

What Is Azure OpenAI And Why Would You Want To Use It?

Creating A Blazor Chat Application With Azure OpenAI

Bring Your Own Data to Azure OpenAI

Azure OpenAI RAG Pattern using a SQL Vector Database

Recursive Azure OpenAI Function Calling

Azure OpenAI RAG Pattern using Functions and a SQL Vector Database

Algorithm of Thoughts: Enhancing Exploration of Ideas in Large Language Models