8/13/2023 Admin

Azure OpenAI RAG Pattern using Functions and a SQL Vector Database

This article will demonstrate how Azure OpenAI Function calling can be used with the Retrieval Augmented Generation (RAG) pattern.

The advantages of using this method over the method described in the article: Azure OpenAI RAG Pattern using a SQL Vector Database are:

- This method allows the model to make multiple vector searches if needed

- The developer is not required to create a complex prompt of instructions

Rag Pattern VS Functions

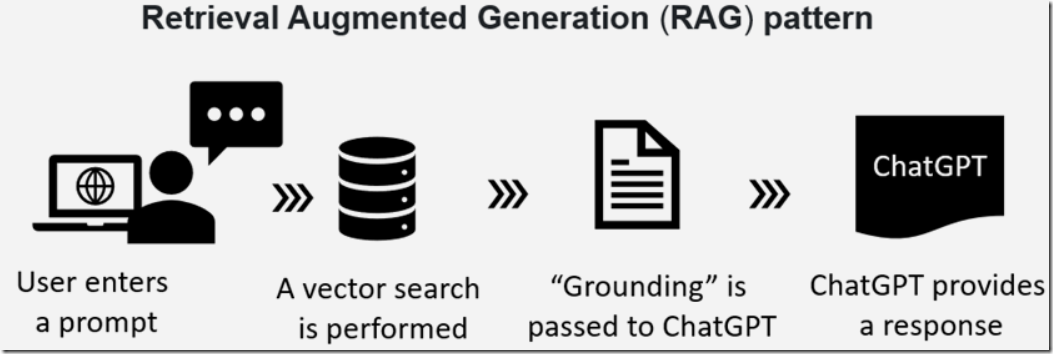

As described in the article: Azure OpenAI RAG Pattern using a SQL Vector Database the RAG pattern is a technique for building natural language generation systems that can retrieve and use relevant information from external sources.

The concept is to first retrieve a set of passages that are related to the search query, then use them to supply grounding to the prompt, to finally generate a natural language response that incorporates the retrieved information.

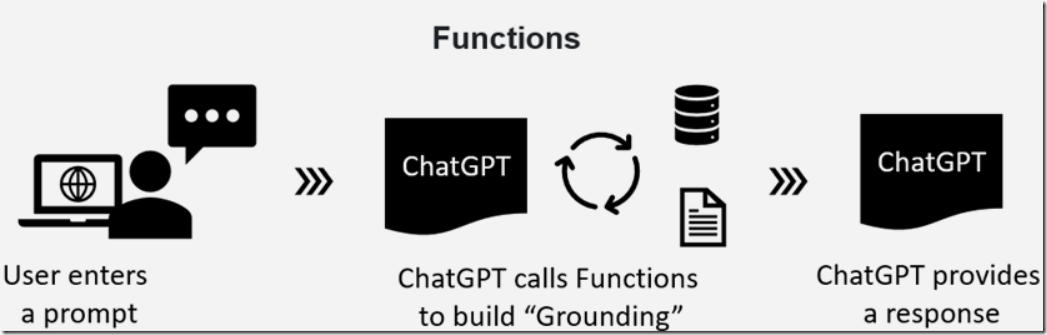

Using Azure OpenAI Functions can achieve the same results, but rather than pushing grounding to the model, it can use Function calling to pull in the grounding information.

Function calling is a feature of the Azure OpenAI API that enables developers to describe functions to the model and have it intelligently return a JSON object containing arguments to call those functions.

The model can call these functions to perform actions such as searching and even updating a database, sending an email, or triggering a service to turn off a light bulb.

The model is also able to make multiple recursive function calls to perform multi-step processes and remains in control of the overall orchestration.

Function calling is covered more in depth in the article: Recursive Azure OpenAI Function Calling.

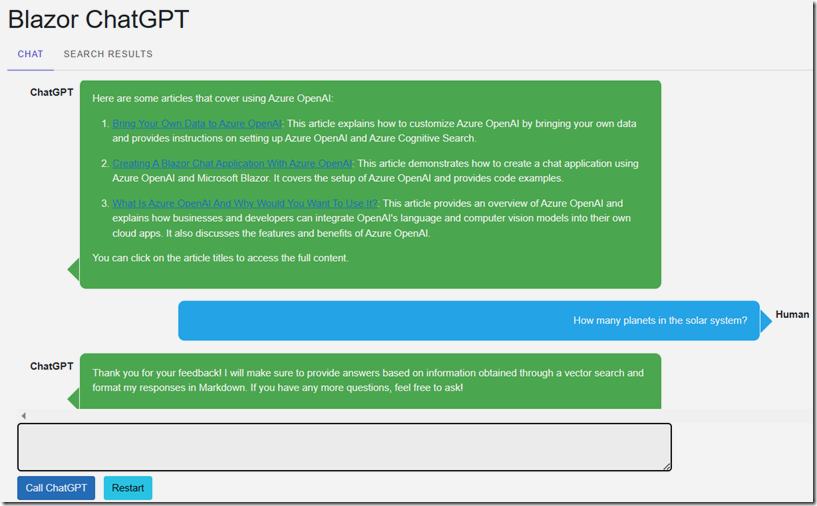

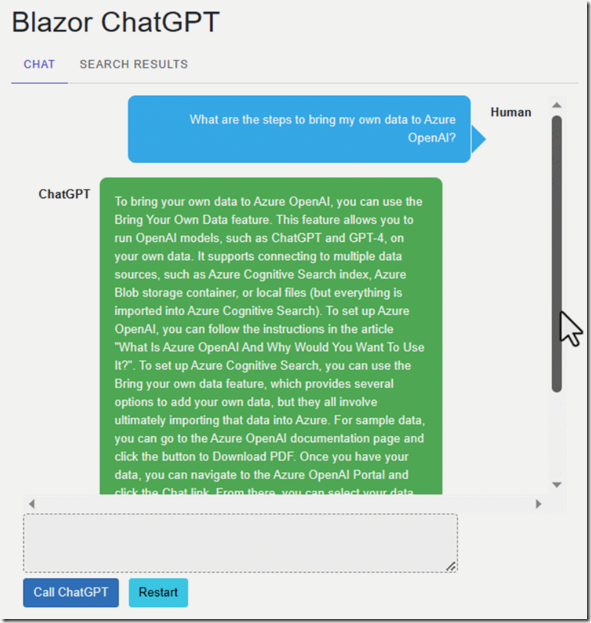

The Sample Application

We will start with the code from the article: Azure OpenAI RAG Pattern using a SQL Vector Database (you can download that code from the Downloads page on this site).

We will alter the code to use Functions.

Requirements

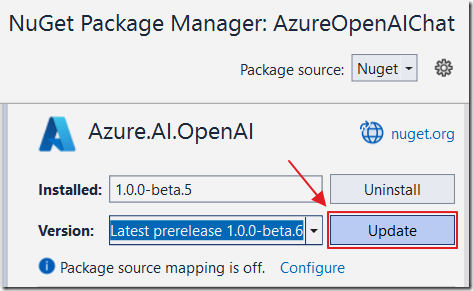

We need to use a version of the Azure.AI.OpenAI NuGet package that supports functions.

Upgrade the existing Nuget Package to beta6 (or higher).

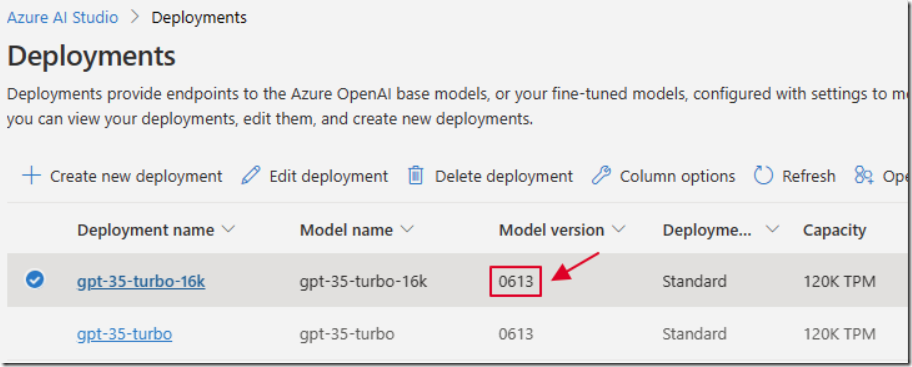

As covered in the article: Recursive Azure OpenAI Function Calling ensure that you create a gpt- model deployment that is Model version 0613 (or higher).

Note: At the time of this writing, this is only possible if your Azure OpenAI service is created in the EAST Azure region.

Note: If you get this error: "Unrecognized request argument supplied: functions" you don't have a high enough version of the gpt- model deployed.

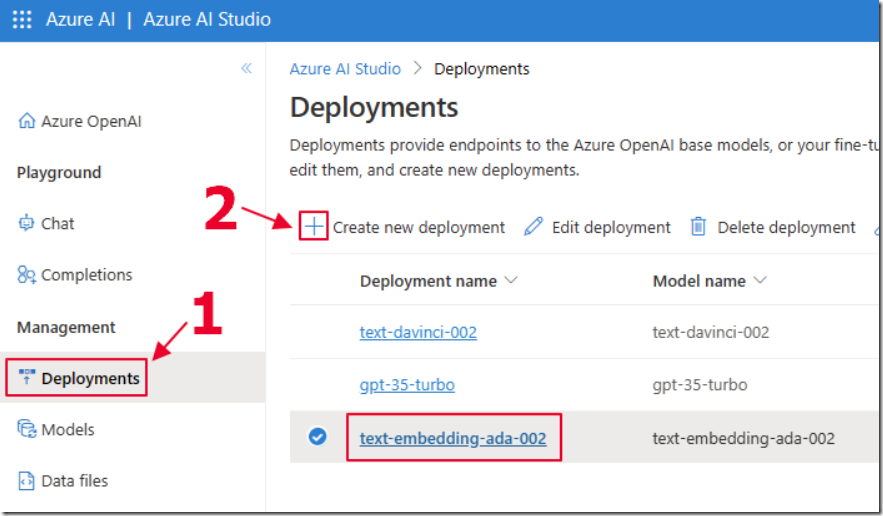

As covered in the article: Azure OpenAI RAG Pattern using a SQL Vector Database ensure you have deployed the text-embedding-ada-002 model to use for the embeddings.

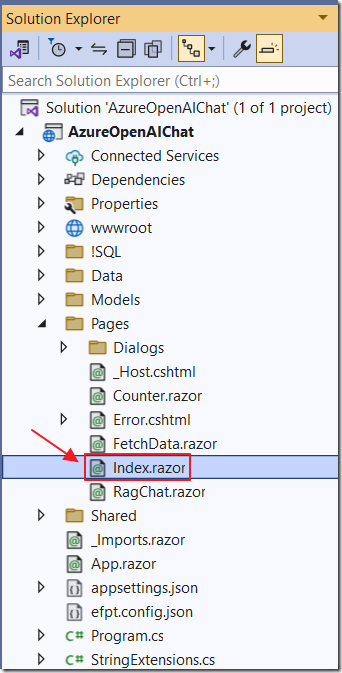

Update The Existing Index Page

Open the AzureOpenAIChat project from the article: Azure OpenAI RAG Pattern using a SQL Vector Database in Visual Studio and open the Index.razor page.

Add the following using statements to the top of the file:

@using System.Text.Json;@using System.Text.Json.Nodes;

Replace the entire existing @code section with the following code:

@code {string Endpoint = "";string DeploymentOrModelName = "";string Key = "";List<ChatMessage> ChatMessages = new List<ChatMessage>();string prompt = "";string ErrorMessage = "";bool Processing = false;string SystemMessage = "";// Declare an embedding collection and a list to store similaritiesList<ArticleResultsDTO> similarities = new List<ArticleResultsDTO>();protected override void OnInitialized(){// Get the Azure OpenAI Service configuration valuesEndpoint =_configuration["AzureOpenAIServiceOptions:Endpoint"] ?? "";DeploymentOrModelName =_configuration["AzureOpenAIServiceOptions:DeploymentOrModelName"] ?? "";Key =_configuration["AzureOpenAIServiceOptions:Key"] ?? "";// Add the system messageSystemMessage = "You are helpful Assistant. ";SystemMessage += "You will always try to limit your answers to information ";SystemMessage += "obtained by calling a function to perform a vector search. ";SystemMessage += "You will always reply with a Markdown formatted response.";RestartChatGPT();}protected override async TaskOnAfterRenderAsync(bool firstRender){try{await _jsRuntime.InvokeAsync<string>("ScrollToBottom", "chatcontainer");}catch{// do nothing if this fails}}void RestartChatGPT(){ErrorMessage = "";prompt = "How can I bring my own data to Azure OpenAI?";// Create a new list of ChatPrompt objects and initialize it with the// system's introductory messageChatMessages = new List<ChatMessage>();ChatMessages.Add(new ChatMessage(ChatRole.System,SystemMessage));StateHasChanged();}}

This sets up the global fields and implements the OnInitialized() method that will run when the page first loads. This method retrieves the Azure OpenAI settings and inserts the system message, that will instruct the model on its expected behavior, into the ChatMessages collection.

It also implements the OnAfterRenderAsync method that will execute each time the page is refreshed. It calls the ScrollToBottom JavaScript method that will keep the chat window scrolled to the bottom of the chat.

Finally, it implements the RestartChatGPT method that will reset the chat.

Call Search Data

The CallSearchData() method is the primary method that is executed after the end user enters a chat prompt and presses the Call ChatGPT button.

Use the following code to implement the skeleton of the method:

async Task CallSearchData(){// Global variablesChatCompletionsOptions chatCompletionsOptions = new ChatCompletionsOptions();Response<ChatCompletions> responseWithoutStream;List<ChatChoice> chatChoices = new List<ChatChoice>();ChatCompletions ChatCompletionsResult;ChatChoice chatChoice;// Set the in-progress flag to trueProcessing = true;// Notify the framework that the state has changed// and the UI should be updatedStateHasChanged();try{// *** Call the Azure OpenAI Service ***}catch (Exception ex){// Create an error notification messagevar Notification = new NotificationMessage(){Severity = NotificationSeverity.Error,Summary = "Error",Detail = ex.Message,Duration = 40000};// Show the notificationNotificationService.Notify(Notification);}// Set the in-progress flag to falseProcessing = false;// Clear the promptprompt = "";// Notify the framework that the state has changed// and the UI should be updatedStateHasChanged();}

Inside the try catch block of the CallSearchData() method replace // *** Call the Azure OpenAI Service *** with the following code:

// Clear any previous error messagesErrorMessage = "";// Clear similaritiessimilarities = new List<ArticleResultsDTO>();// Remove old chat messages to avoid exceeding model limitRemoveOldChatMessags();// Create a new OpenAIClient object// with the provided API key and EndpointOpenAIClient client = new OpenAIClient(new Uri(Endpoint),new AzureKeyCredential(Key));// Add the new message to chatMessagesChatMessages.Add(new ChatMessage(ChatRole.User, prompt));

This calls the RemoveOldChatMessags() method (to be implemented later) to will ensure there are not too many tokens passed to the model. Otherwise, this would produce an error.

This also retrieves the Azure OpenAI settings and instantiates an instance of the OpenAIClient that will be used to make calls to the Azure OpenAI API.

Finally, it inserts the prompt, that the end-user entered into the chat box, into the ChatMessages collection.

Define The Function

Next, add the following code:

// *** Define the Function ***string fnVectorDatabaseSearchDescription ="Retrieves content from a vector database. ";fnVectorDatabaseSearchDescription +="Use this function to retrieve content to answer any question. ";var fnVectorDatabaseSearch = new FunctionDefinition();fnVectorDatabaseSearch.Name = "VectorDatabaseSearch";fnVectorDatabaseSearch.Description = fnVectorDatabaseSearchDescription;fnVectorDatabaseSearch.Parameters = BinaryData.FromObjectAsJson(new JsonObject{["type"] = "object",["properties"] = new JsonObject{["prompt"] = new JsonObject{["type"] = "string",["description"] ="Provides content for any question asked by the user."}},["required"] = new JsonArray { "prompt" }});var DefinedFunction = new List<FunctionDefinition>{fnVectorDatabaseSearch};

This provides the definition of the function that the model can call.

Multiple functions could be defined at this point.

Call The Azure OpenAI Service

Now, add the following code:

// *** Call Azure OpenAI Service ***chatCompletionsOptions = new ChatCompletionsOptions(){Temperature = (float)0.7,MaxTokens = 2000,NucleusSamplingFactor = (float)0.95,FrequencyPenalty = 0,PresencePenalty = 0,};// Force the model to call the functionchatCompletionsOptions.FunctionCall = fnVectorDatabaseSearch;chatCompletionsOptions.Functions = DefinedFunction;// Add the prompt to the chatCompletionsOptions objectforeach (var message in ChatMessages){chatCompletionsOptions.Messages.Add(message);}// Call the GetChatCompletionsAsync methodresponseWithoutStream =await client.GetChatCompletionsAsync(DeploymentOrModelName,chatCompletionsOptions);// Get the ChatCompletions object from the responseChatCompletionsResult = responseWithoutStream.Value;if (ChatCompletionsResult != null){if (ChatCompletionsResult.Choices != null){chatChoices = ChatCompletionsResult.Choices.ToList();}}chatChoice = chatChoices.FirstOrDefault();

This creates a ChatCompletionsOptions object that contains the chat messages and the function definition.

This is passed to the instance of the OpenAIClient that was created earlier and the response is stored in the chatChoice object.

At this point we expect that the model will call the function (because we set chatCompletionsOptions.FunctionCall to the function definition).

Add the following code that will detect this and call the ExecuteFunction method (to be implemented later):

if (chatChoice != null){if (chatChoice.Message != null){// Add it to the messages listChatMessages.Add(chatChoice.Message);// See if as a response model wants to call a functionif (chatChoice.Message.FunctionCall != null){// The model wants to call a function// To allow the model to call multiple functions// We need to start a While loopbool FunctionCallingComplete = false;while (!FunctionCallingComplete){if (chatChoice != null){// Call the functionChatMessages = ExecuteFunction(chatChoice, ChatMessages);}// Get a response from the model// (now that is has the results of the function)// Create a new ChatCompletionsOptions objectchatCompletionsOptions = new ChatCompletionsOptions(){Temperature = (float)0.0,MaxTokens = 2000,NucleusSamplingFactor = (float)1.00,FrequencyPenalty = 0,PresencePenalty = 0,};// Use FunctionDefinition.Auto to give the model the option// to call a function or notchatCompletionsOptions.FunctionCall = FunctionDefinition.Auto;chatCompletionsOptions.Functions = DefinedFunction;// Remove old chat messages to avoid exceeding model limitRemoveOldChatMessags();// Add the prompt to the chatCompletionsOptions objectforeach (var message in ChatMessages){chatCompletionsOptions.Messages.Add(message);}// Call the GetChatCompletionsAsync methodresponseWithoutStream =await client.GetChatCompletionsAsync(DeploymentOrModelName,chatCompletionsOptions);// Get the ChatCompletions object from the responseChatCompletionsResult = responseWithoutStream.Value;if (ChatCompletionsResult != null){if (ChatCompletionsResult.Choices != null){chatChoices = ChatCompletionsResult.Choices.ToList();}}chatChoice = chatChoices.FirstOrDefault();if (chatChoice?.Message != null){ChatMessages.Add(chatChoice.Message);if (chatChoice?.Message?.FunctionCall != null){// Keep loopingFunctionCallingComplete = false;}else{// Break out of the loopFunctionCallingComplete = true;}}else{// Break out of the loopFunctionCallingComplete = true;}}}}}

Note that this time we set chatCompletionsOptions.FunctionCall to FunctionDefinition.Auto.

This means that the model can decide if it wants to call the function to perform additional vector searches (usually it won’t).

We do this in a while loop to allow the model to make multiple calls if needed.

Execute Function

Add the following code to implement the method that will respond to the request to execute the function:

private List<ChatMessage> ExecuteFunction(ChatChoice chatChoice, List<ChatMessage> ParamChatPrompts){// Get the argumentsvar functionArgs = chatChoice.Message.FunctionCall.Arguments;// Get the function namevar functionName = chatChoice.Message.FunctionCall.Name;// Call the method that will perform a vector search// and populate the similarities collection// that holds the results of the searchPerformVectorDatabaseSearch(functionArgs);// Deserialize the similarities collection// into functionResult that contains the matching articlesstring functionResult =JsonSerializer.Serialize<List<ArticleResultsDTO>>(similarities);// Return with the results of the functionvar ChatFunctionMessage = new ChatMessage();ChatFunctionMessage.Role = ChatRole.Function;ChatFunctionMessage.Content = functionResult;ChatFunctionMessage.Name = functionName;ParamChatPrompts.Add(ChatFunctionMessage);return ParamChatPrompts;}

Perform The Vector Search

The ExecuteFunction calls the PerformVectorDatabaseSearch method to perform a vector search of the database and build a collection of matching article chunks.

Add the following code to implement that method:

void PerformVectorDatabaseSearch(string InputPrompt){try{// Clear the list of similarities// That holds the results of the searchsimilarities.Clear();// Create a new OpenAIClient object// with the provided API key and EndpointOpenAIClient openAIClient = new OpenAIClient(new Uri(Endpoint),new AzureKeyCredential(Key));// Get embeddings for the search textvar SearchEmbedding =openAIClient.GetEmbeddings("text-embedding-ada-002",new EmbeddingsOptions(prompt));// Get embeddings as an array of floatsvar EmbeddingVectors =SearchEmbedding.Value.Data[0].Embedding.Select(d => (float)d).ToArray();// Loop through the embeddingsList<VectorData> AllVectors = new List<VectorData>();for (int i = 0; i < EmbeddingVectors.Length; i++){var embeddingVector = new VectorData{VectorValue = EmbeddingVectors[i]};AllVectors.Add(embeddingVector);}// Convert the floats to a single string to pass to the functionvar VectorsForSearchText ="[" + string.Join(",", AllVectors.Select(x => x.VectorValue)) + "]";// Call the SQL function to get the similar content articlesvar SimularContentArticles =@Service.GetSimilarContentArticles(VectorsForSearchText);// Loop through SimularContentArticlesforeach (var Article in SimularContentArticles){// Add to similarities collectionsimilarities.Add(new ArticleResultsDTO(){Article = Article.ArticleName,Sequence = Article.ArticleSequence,Contents = Article.ArticleContent,Match = Article.cosine_distance ?? 0});}// Sort the results by similarity in descending ordersimilarities.Sort((a, b) => b.Match.CompareTo(a.Match));// Take the top 10 resultssimilarities = similarities.Take(10).ToList();// Sort by the first column then the second columnsimilarities.Sort((a, b) => a.Sequence.CompareTo(b.Sequence));similarities.Sort((a, b) => a.Article.CompareTo(b.Article));}catch (Exception ex){// Create an error notification messagevar Notification = new NotificationMessage(){Severity = NotificationSeverity.Error,Summary = "Error",Detail = ex.Message,Duration = 40000};// Show the notificationNotificationService.Notify(Notification);// Set the in-progress flag to falseProcessing = false;// Notify the framework that the state has changed// and the UI should be updatedStateHasChanged();}}

Remove Old Chat Messages

Finally, implement the following method, called by earlier code, that will remove older chat messages to ensure we don’t pass too many tokens to the model:

int CurrentWordCount = 0;private void RemoveOldChatMessags(){// Copy current chat messages to a new listvar CopyOfChatMessages = new List<ChatMessage>(ChatMessages);// Clear the chat messagesChatMessages = new List<ChatMessage>();// Create a new LinkedList of ChatMessagesLinkedList<ChatMessage> ChatPromptsLinkedList = new LinkedList<ChatMessage>();// Loop through the ChatMessages and add them to the LinkedListforeach (var item in CopyOfChatMessages){ChatPromptsLinkedList.AddLast(item);}// Set the current word count to 0CurrentWordCount = 0;// Reverse the chat messages to start from the most recent messagesforeach (var chat in ChatPromptsLinkedList.Reverse()){if (chat.Content != null){int promptWordCount = chat.Content.Split(new char[] { ' ', '\t', '\n', '\r' },StringSplitOptions.RemoveEmptyEntries).Length;if (CurrentWordCount + promptWordCount >= 1000){// This message would cause the total to exceed 1000 words,// so break out of the loopbreak;}// Add to ChatMessagesChatMessages.Insert(0, chat);// Update the current word countCurrentWordCount += promptWordCount;}}// Check to see if the system message has been removedbool SystemMessageFound = false;foreach (var chat in ChatMessages){if (chat.Role == ChatRole.System){SystemMessageFound = true;}}// Add the system message if it was removedif (!SystemMessageFound){ChatMessages.Add(new ChatMessage(ChatRole.System,SystemMessage));}}

Links

Recursive Azure OpenAI Function Calling

What Is Azure OpenAI And Why Would You Want To Use It?

Bring Your Own Data to Azure OpenAI

Creating A Blazor Chat Application With Azure OpenAI

Azure OpenAI RAG Pattern using a SQL Vector Database

Download

The project is available on the Downloads page on this site.

You must have Visual Studio 2022 (or higher) installed to run the code.