7/16/2023 Admin

Azure OpenAI RAG Pattern using a SQL Vector Database

Azure OpenAI is a powerful natural language generation service that can create engaging and realistic text. However, sometimes you may want to customize your text with your own business data, such as your company name, products, services, etc.

In this article, we will show you how to use a vector database to store and retrieve your business data and use it to enhance your OpenAI completions text. You will learn how to create a vector database, how to query it using natural language, and how to integrate it with OpenAI completions.

Most importantly we will show how to use a normal SQL server database to save hundreds, even thousands of dollars than if you used a normal vector database.

One of the advantages of using a vector database is that you can feed the granular search results to an OpenAI prompt to produce completions, which can provide answers based on the facts from your data.

This is similar to what Bing Chat and Google Bard are doing, but with your own private data. Also, when using the Microsoft OpenAI service, your data stays private and secure.

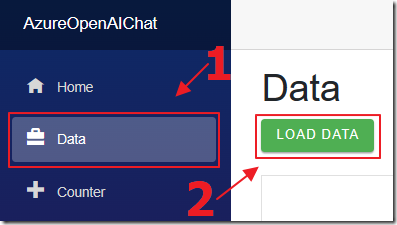

Explore The Sample Application

When you set-up and run the sample application (located on the Downloads page of this site), the first step is to navigate to the Data page and click the LOAD DATA button.

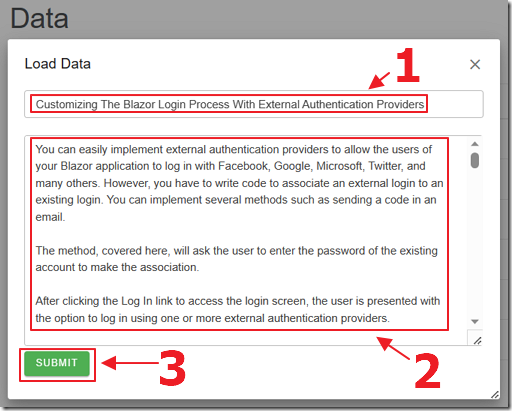

Enter a title for the Article and paste in the contents for the Article and click the SUBMIT button.

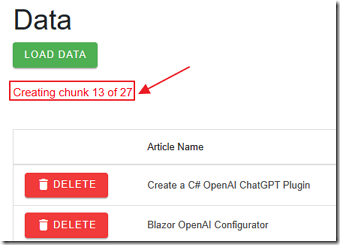

The contents of the Article will be split up into chunks of 200 word segments and will be passed to OpenAI to create embeddings.

The embeddings will consist of an array of vectors that will be stored in the SQL server database.

A popup will indicate when the process is complete.

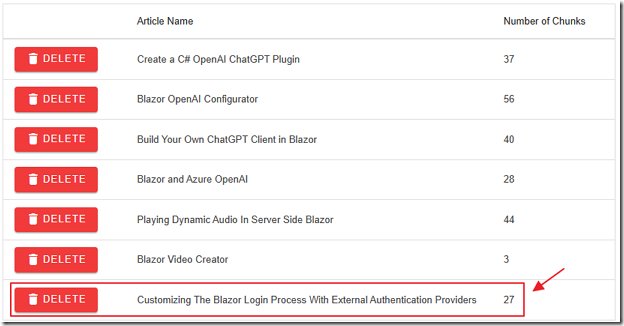

The Article will display in the Article list.

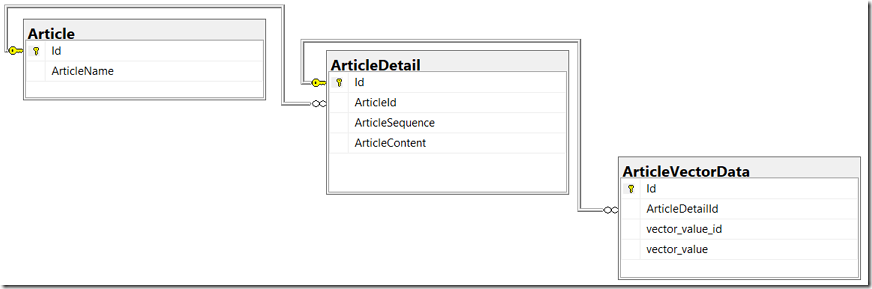

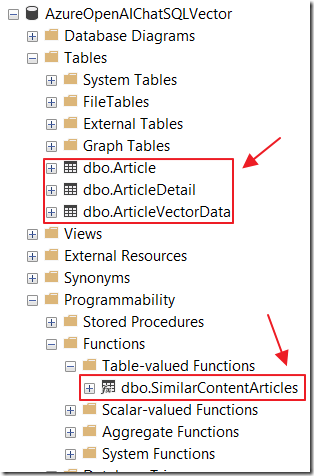

The structure of the database is that the Article table has associated records stored in the ArticleDetail table and they have associated vectors stored in the ArticleVectorData table.

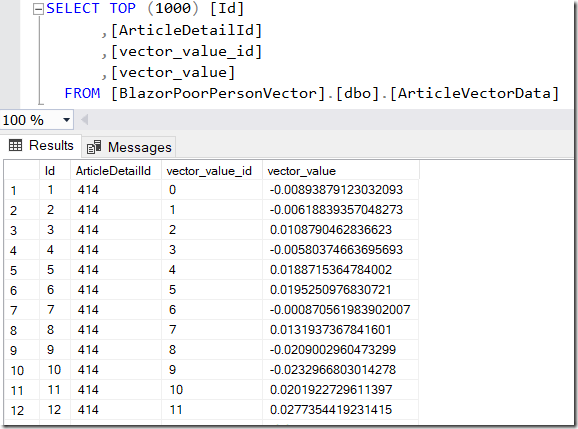

Each ArticleDetail contains an array of 1536 vectors, each stored in a separate row in the ArticleVectorData table.

Each vector for a ArticleDetail has a vector_value_id that is the sequential position of the vector in the array of vectors returned by the embedding for an ArticleDetail.

To compare the vectors (stored in the vector_value field) that we get from the search request (in the next step), we will use a cosign similarity calculation.

We will match each vector using its vector_value_id for each vector.

Navigate to the Home page.

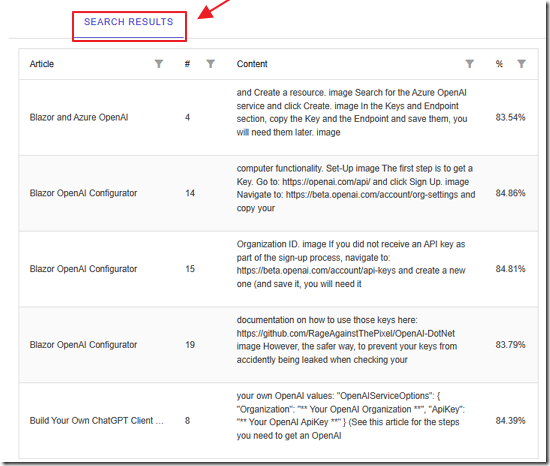

When we enter a search request and press the SEARCH button, we can click on the SEARCH RESULTS tab to see the top 10 results retrieved from the vector search.

The results will indicate the Article and the content from the chunk.

The match percentage will be shown, and the chunks in each Article will be listed in the order that they appear in the Article.

This is important because we need to feed the chunks to the prompt that will be sent to the OpenAI completion API in order so that the information makes sense to the OpenAI language model.

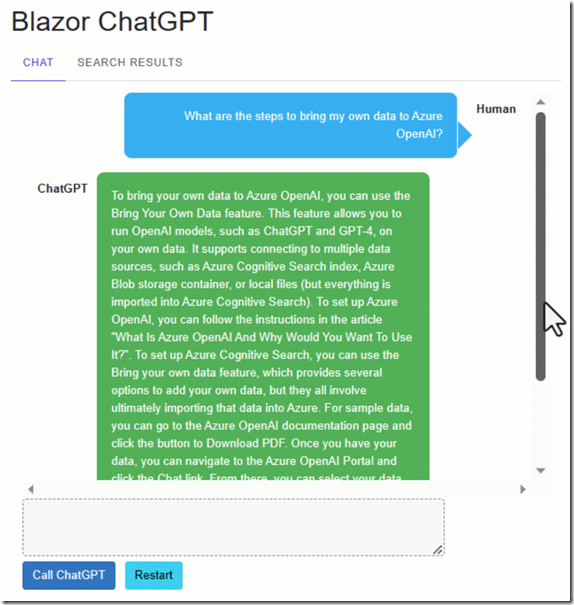

Clicking on the Chat tab will display the response from the model after the results of the vector search have been passed to the completion prompt.

The RAG Pattern

As described in the article: Bring Your Own Data to Azure OpenAI the RAG pattern is a technique for building natural language generation systems that can retrieve and use relevant information from external sources.

The concept is to first retrieve a set of passages that are related to the search query, then use them to supply grounding to the prompt, to finally generate a natural language response that incorporates the retrieved information.

Getting Embeddings

To obtain the vectors needed for the vector search, we process the text by calling an OpenAI model that produces embeddings that consist of an array of floats.

An embedding is a mathematical representation of a word or phrase that captures its meaning in a high-dimensional space.

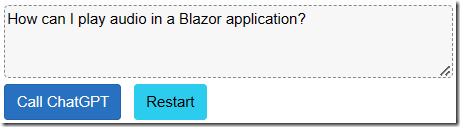

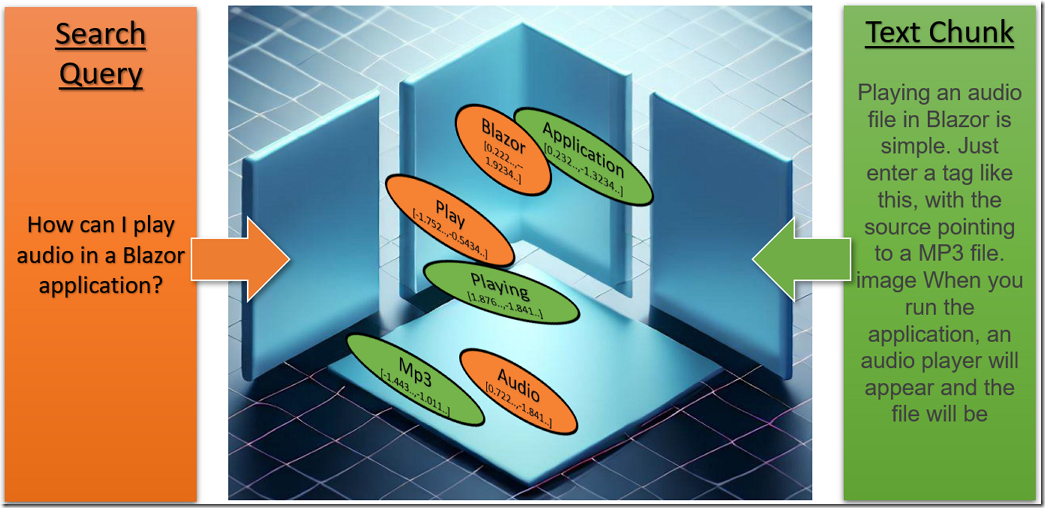

For example, if a query is entered regarding playing audio in a Blazor application.

The query is turned into embeddings and cosign similarity is used to calculate its vectors against the vectors in the text chunks stored in the database.

The text chunks, associated with the closest matching vectors, is then provided to the prompt, as grounding, that is then passed to the Azure OpenAI service.

Setting Up Azure OpenAI

See the article: What Is Azure OpenAI And Why Would You Want To Use It? for instructions on gaining access and setting up Azure OpenAI.

Follow the Deploy A Model section in that article for instructions on how to deploy a model.

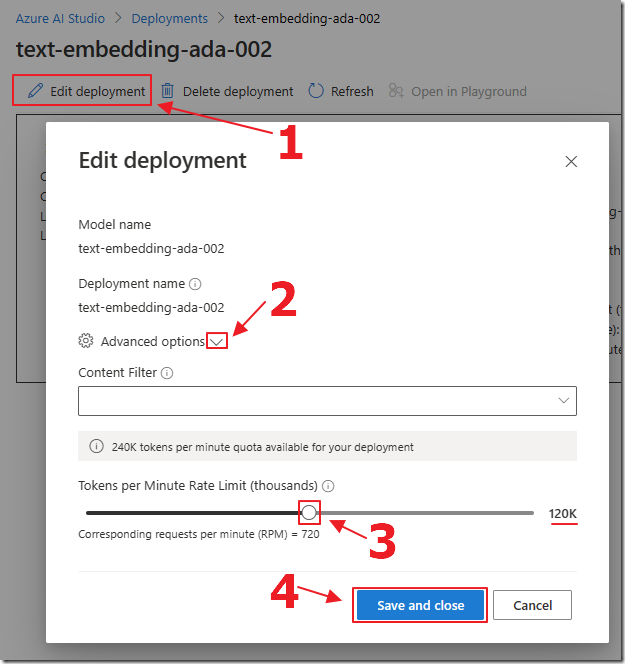

In this case, select the text-embedding-ada-002 model and give it the Deployment name of text-embedding-ada-002.

This model will be used to obtain the embeddings that will provide the vector data we will then store in the database.

Select the model deployment, select Edit deployment, expand Advanced options, and set the Tokens per Minute to at least 120K.

Create The Application

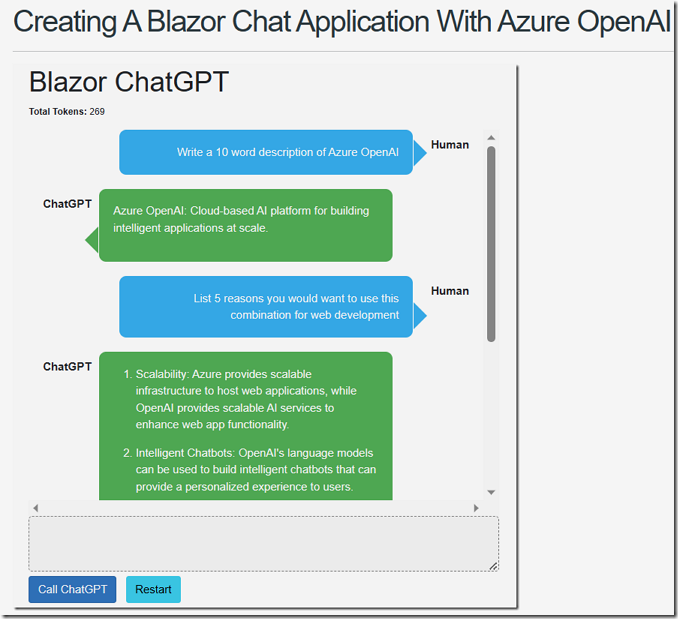

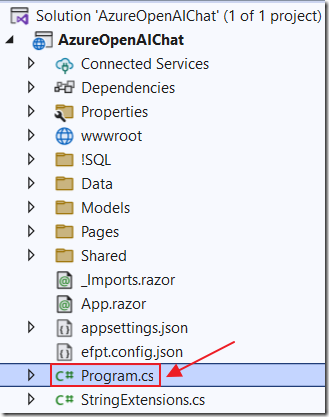

We will start with the code for the project AzureOpenAIChat created in the article Creating A Blazor Chat Application With Azure OpenAI (you can download the code from the Downloads page on this site).

Open it in Visual Studio 2022 (or higher).

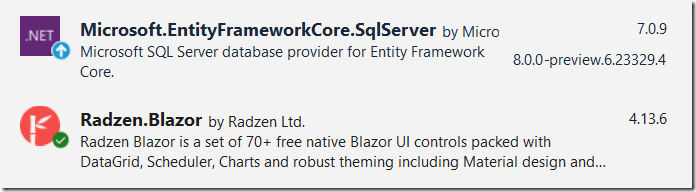

Install the following Nuget packages:

Note: Radzen requires additional set-up. Follow the directions here: https://blazor.radzen.com/get-started

Ensure you configure Dialog, Notification, ContextMenu and Tooltip components.

Also, install EF Core Power Tools in your Visual Studio. This will be used to create the files needed to implement the DataContext.

Create The Database

You will need access to a SQL Server.

You can download the free SQL Server 2022 Developer edition at the following link: https://www.microsoft.com/en-us/sql-server/sql-server-downloads.

In the SQL Server, create a new database called: AzureOpenAIChatSQLVector.

Add a user with db owner permissions. Note the account username and password, you will need it later.

Run the following script to create the required tables, that will store the article contents and the associated vector data, and the function used to perform searches on the vector data:

SET ANSI_NULLS ONGOSET QUOTED_IDENTIFIER ONGOIF NOT EXISTS (SELECT * FROM sys.objects WHERE object_id = OBJECT_ID(N'[dbo].[Article]') AND type in (N'U'))BEGINCREATE TABLE [dbo].[Article]([Id] [int] IDENTITY(1,1) NOT NULL,[ArticleName] [nvarchar](250) NOT NULL,CONSTRAINT [PK_Article] PRIMARY KEY CLUSTERED([Id] ASC)WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON) ON [PRIMARY]) ON [PRIMARY]ENDGOSET ANSI_NULLS ONGOSET QUOTED_IDENTIFIER ONGOIF NOT EXISTS (SELECT * FROM sys.objects WHERE object_id = OBJECT_ID(N'[dbo].[ArticleDetail]') AND type in (N'U'))BEGINCREATE TABLE [dbo].[ArticleDetail]([Id] [int] IDENTITY(1,1) NOT NULL,[ArticleId] [int] NOT NULL,[ArticleSequence] [int] NOT NULL,[ArticleContent] [nvarchar](max) NULL,CONSTRAINT [PK_ArticleDetail] PRIMARY KEY CLUSTERED([Id] ASC)WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON) ON [PRIMARY]) ON [PRIMARY] TEXTIMAGE_ON [PRIMARY]ENDGOSET ANSI_NULLS ONGOSET QUOTED_IDENTIFIER ONGOIF NOT EXISTS (SELECT * FROM sys.objects WHERE object_id = OBJECT_ID(N'[dbo].[ArticleVectorData]') AND type in (N'U'))BEGINCREATE TABLE [dbo].[ArticleVectorData]([Id] [int] IDENTITY(1,1) NOT NULL,[ArticleDetailId] [int] NOT NULL,[vector_value_id] [int] NOT NULL,[vector_value] [float] NOT NULL,CONSTRAINT [PK_ArticleVectorData] PRIMARY KEY CLUSTERED([Id] ASC)WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON) ON [PRIMARY]) ON [PRIMARY]ENDGOSET ANSI_NULLS ONGOSET QUOTED_IDENTIFIER ONGOIF NOT EXISTS (SELECT * FROM sys.objects WHERE object_id = OBJECT_ID(N'[dbo].[SimilarContentArticles]')

BEGINexecute dbo.sp_executesql @statement = N'/*From GitHub project: Azure-Samples/azure-sql-db-openai*/CREATE function [dbo].[SimilarContentArticles](@vector nvarchar(max))returns tableasreturn with cteVector as(selectcast([key] as int) as [vector_value_id],cast([value] as float) as [vector_value]fromopenjson(@vector)),cteSimilar as(select top (10)v2.ArticleDetailId,sum(v1.[vector_value] * v2.[vector_value]) /(sqrt(sum(v1.[vector_value] * v1.[vector_value]))*sqrt(sum(v2.[vector_value] * v2.[vector_value]))) as cosine_distancefromcteVector v1inner joindbo.ArticleVectorData v2 on v1.vector_value_id = v2.vector_value_idgroup byv2.ArticleDetailIdorder bycosine_distance desc)select(select [ArticleName] from [Article] where id = a.ArticleId) as ArticleName,a.ArticleContent,a.ArticleSequence,r.cosine_distancefromcteSimilar rinner joindbo.[ArticleDetail] a on r.ArticleDetailId = a.id'ENDGOIF NOT EXISTS (SELECT * FROM sys.indexes WHERE object_id = OBJECT_ID(N'[dbo].[ArticleVectorData]') AND name = N'ArticleDetailsIdClusteredColumnStoreIndex')CREATE NONCLUSTERED COLUMNSTORE INDEX [ArticleDetailsIdClusteredColumnStoreIndex] ON [dbo].[ArticleVectorData]([ArticleDetailId])WITH (DROP_EXISTING = OFF, COMPRESSION_DELAY = 0) ON [PRIMARY]GOIF NOT EXISTS (SELECT * FROM sys.foreign_keys WHERE object_id = OBJECT_ID(N'[dbo].[FK_ArticleDetail_Article]') AND parent_object_id = OBJECT_ID(N'[dbo].[ArticleDetail]'))ALTER TABLE [dbo].[ArticleDetail] WITH CHECK ADD CONSTRAINT [FK_ArticleDetail_Article] FOREIGN KEY([ArticleId])REFERENCES [dbo].[Article] ([Id])ON DELETE CASCADEGOIF EXISTS (SELECT * FROM sys.foreign_keys WHERE object_id = OBJECT_ID(N'[dbo].[FK_ArticleDetail_Article]') AND parent_object_id = OBJECT_ID(N'[dbo].[ArticleDetail]'))ALTER TABLE [dbo].[ArticleDetail] CHECK CONSTRAINT [FK_ArticleDetail_Article]GOIF NOT EXISTS (SELECT * FROM sys.foreign_keys WHERE object_id = OBJECT_ID(N'[dbo].[FK_ArticleVectorData_ArticleDetail]') AND parent_object_id = OBJECT_ID(N'[dbo].[ArticleVectorData]'))ALTER TABLE [dbo].[ArticleVectorData] WITH CHECK ADD CONSTRAINT [FK_ArticleVectorData_ArticleDetail] FOREIGN KEY([ArticleDetailId])REFERENCES [dbo].[ArticleDetail] ([Id])ON DELETE CASCADEGOIF EXISTS (SELECT * FROM sys.foreign_keys WHERE object_id = OBJECT_ID(N'[dbo].[FK_ArticleVectorData_ArticleDetail]') AND parent_object_id = OBJECT_ID(N'[dbo].[ArticleVectorData]'))ALTER TABLE [dbo].[ArticleVectorData] CHECK CONSTRAINT [FK_ArticleVectorData_ArticleDetail]GO

A key to this solution is that the function, used to search the vector data, runs fast because we created the following columstore index when we created the database table.

(See: Vector Similarity Search with Azure SQL database and OpenAI for more information):

CREATE NONCLUSTERED COLUMNSTORE INDEX[ArticleDetailsIdClusteredColumnStoreIndex] ON[dbo].[ArticleVectorData]([ArticleDetailId])WITH (DROP_EXISTING = OFF, COMPRESSION_DELAY = 0) ON [PRIMARY]

Create The DataContext

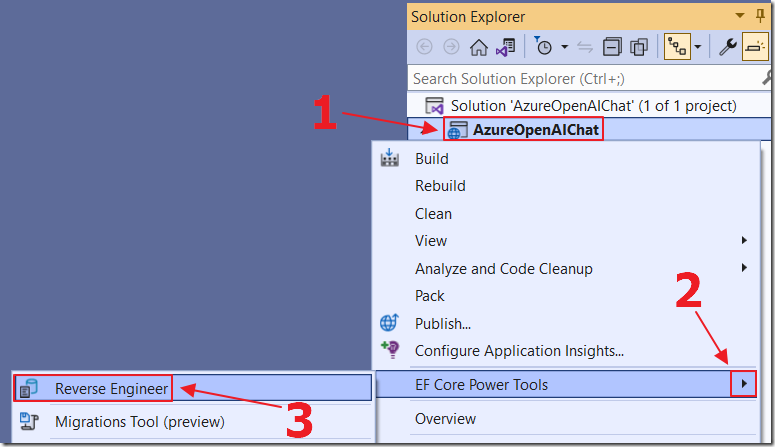

Right-click on the Project node, then select EF Core Power Tools, then Reverse Engineer.

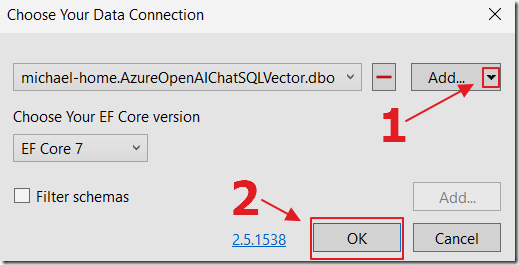

Click Add to open the dialog to add a connection to the database.

Once the database connection is selected, click OK.

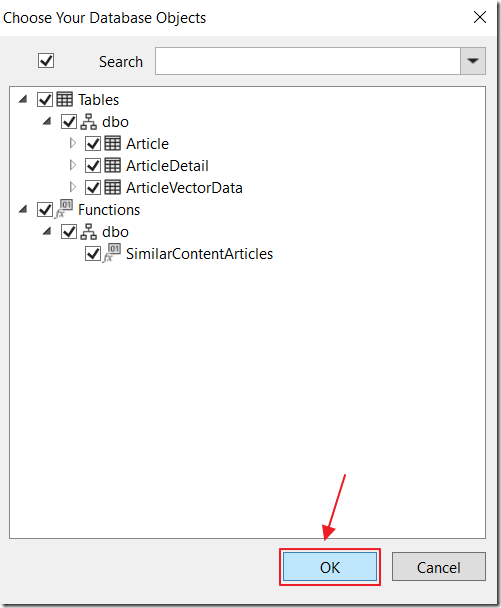

Select all the database objects then click OK.

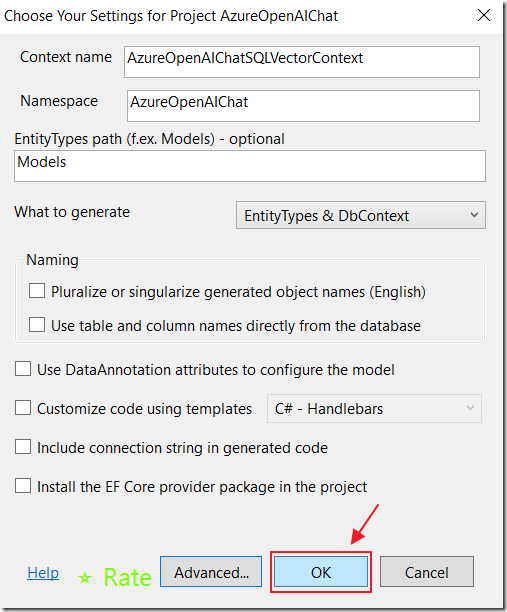

Keep the default values and click OK.

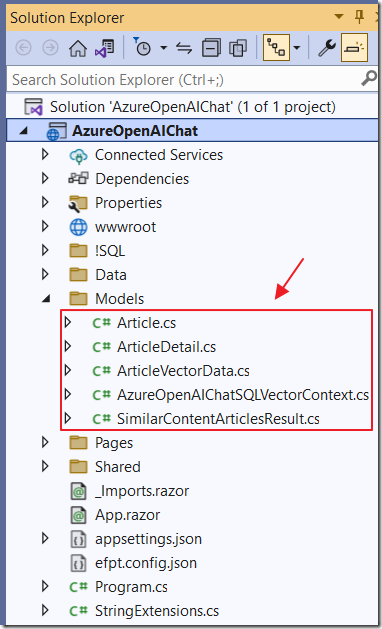

The DataContext and the associated class files will be created.

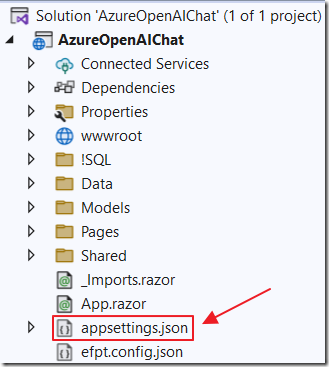

Open the appsettings.json file and add a connection string like the following (replacing the values with values that will connect to your database):

"ConnectionStrings": {"DefaultConnection": "Data Source=(local);initial catalog=AzureOpenAIChatSQLVector;TrustServerCertificate=True;persist security info=True;user id={your database user};password={your password};"}

Finally, add the following lines to the Program.cs file to instantiate the DataContext and to pass it the database connection from appsettings.json:

// Database connectionbuilder.Services.AddDbContext<AzureOpenAIChatSQLVectorContext>(options =>options.UseSqlServer(builder.Configuration.GetConnectionString("DefaultConnection")));

Create The Service

Next we will add the data service and its supporting classes that will be called by the code in the .razor pages.

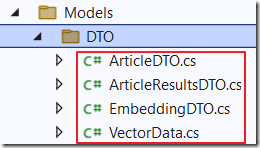

In the Models folder, add a folder called DTO with the following classes:

ArticleDTO.cs

#nullable disablenamespace AzureOpenAIChat.Models.DTO{public class ArticleDTO{public string Title { get; set; }public string Contents { get; set; }}}

ArticleResultsDTO.cs

#nullable disablenamespace AzureOpenAIChat.Models.DTO{public class ArticleResultsDTO{public string Article { get; set; }public int Sequence { get; set; }public string Contents { get; set; }public double Match { get; set; }}}

EmbeddingDTO.cs

#nullable disableusing AzureOpenAIChat;namespace AzureOpenAIChat.Models.DTO{public class EmbeddingDTO{public string Text { get; set; }public float[] Values { get; set; }}}

VectorData.cs

namespace AzureOpenAIChat.Models.DTO;public class VectorData{public double VectorValue { get; set; }}

In the Models folder add a file called AzureOpenAIChatSQLVectorService.cs using the following code:

#nullable disableusing Azure.AI.OpenAI;using AzureOpenAIChat.Models;using Microsoft.EntityFrameworkCore;namespace AzureOpenAIChat.Data{public class AzureOpenAIChatSQLVectorService{private readonly AzureOpenAIChatSQLVectorContext _context;public AzureOpenAIChatSQLVectorService(AzureOpenAIChatSQLVectorContext context){_context = context;}public async Task<List<Article>>GetArticlesAsync(){// Get Articles including ArticleDetailreturn await _context.Article.Include(a => a.ArticleDetail).AsNoTracking().ToListAsync();}public async Task<Article> GetArticleByIdAsync(int id){// Get Article including ArticleDetailreturn await _context.Article.Include(a => a.ArticleDetail).AsNoTracking().FirstOrDefaultAsync(m => m.Id == id);}// Add Articlepublic async Task<Article> AddArticleAsync(Article article){_context.Article.Add(article);await _context.SaveChangesAsync();return article;}// Add ArticleDetailpublic async Task<ArticleDetail> AddArticleDetailAsync(ArticleDetail articleDetail, Embeddings embeddings){_context.ArticleDetail.Add(articleDetail);await _context.SaveChangesAsync();// Get Embedding Vectorsvar EmbeddingVectors =embeddings.Data[0].Embedding.Select(d => (float)d).ToArray();// Instert all Embedding Vectorsfor (int i = 0; i < EmbeddingVectors.Length; i++){var embeddingVector = new ArticleVectorData{ArticleDetailId = articleDetail.Id,VectorValueId = i,VectorValue = EmbeddingVectors[i]};_context.ArticleVectorData.Add(embeddingVector);}await _context.SaveChangesAsync();return articleDetail;}// Delete Articlepublic async Task<Article> DeleteArticleAsync(int id){var article = await _context.Article.FindAsync(id);_context.Article.Remove(article);await _context.SaveChangesAsync();return article;}// GetSimilarContentArticlespublic List<SimilarContentArticlesResult>GetSimilarContentArticles(string vector){return _context.SimilarContentArticles(vector).ToList();}}}

Finally, add the following line to the Program.cs file to register the service:

// Servicebuilder.Services.AddScoped<AzureOpenAIChatSQLVectorService>();

The Data Page

We now want to create the page that will allow data to be added to the database (and the vectors created).

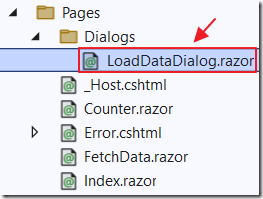

In the Pages folder, add a folder called Dialogs and a page called LoadDataDialog.razor using the following code:

@using AzureOpenAIChat.Models.DTO@inject DialogService DialogService<div class="row"><div class="col-md-12"><RadzenTextBox Placeholder="Article Title" @bind-Value="@article.Title"Style="width:100%" /></div></div><br /><div class="row"><div class="col-md-12"><RadzenTextArea Placeholder="Article Contents" @bind-Value="@article.Contents"Style="height: 300px; width:100%" /></div></div><div class="row"><div class="col-md-12"><RadzenButton Text="Submit" ButtonStyle="ButtonStyle.Success" Click="@Submit" /></div></div>

@code {ArticleDTO article = new ArticleDTO();void Submit(){// Pass the article back to the page that opened this dialogDialogService.Close(article);}}

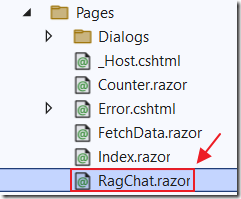

Next, add a page called RagChat.razor using the following code:

@page "/ragchat"@using Azure.AI.OpenAI;@using Azure;@using AzureOpenAIChat.Data;@using AzureOpenAIChat.Models;@using AzureOpenAIChat.Models.DTO;@using AzureOpenAIChat.Pages.Dialogs;@using System.Text.RegularExpressions;@inject IConfiguration _configuration@inject DialogService dialogService@*Using OwningComponentBase ensures that the service and related servicesthat share its scope are disposed with the component.Otherwise DbContext in ForecastService will live for the life of theconnection, which may be problematic if clients stayconnected for a long time.We access WeatherForecastService using @Service*@@inherits OwningComponentBase<AzureOpenAIChatSQLVectorService><PageTitle>RagChat</PageTitle><h1>Data</h1><RadzenButton Text="Load Data" ButtonStyle="ButtonStyle.Success"Click=@OpenLoadDataDialog /><br />@if (Status != ""){<br /><p style="color:red">@Status</p>}<br /><RadzenGrid Data="@articles" TItem="Article"><Columns><RadzenGridColumn TItem="Article"Title=""Width="20%"><Template Context="article"><RadzenButton Text="Delete" Icon="delete"ButtonStyle="ButtonStyle.Danger"Click="@(() => Delete(article))" /></Template></RadzenGridColumn><RadzenGridColumn TItem="Article"Property="ArticleName"Title="Article Name" /><RadzenGridColumn TItem="Article"Property="ArticleDetail.Count"Title="Number of Chunks"Width="20%" /></Columns></RadzenGrid>@code {#nullable disablestring Status = "";private List<Article> articles;string Endpoint = "";string DeploymentOrModelName = "";string Key = "";}

Add the following methods to the page…

This method will retrieve the Azure OpenAI key and model from the appsettings.json file:

// Initialize the component by setting the organization and API keyprotected override void OnInitialized(){// Get the Azure OpenAI Service configuration valuesEndpoint =_configuration["AzureOpenAIServiceOptions:Endpoint"] ?? "";DeploymentOrModelName =_configuration["AzureOpenAIServiceOptions:DeploymentOrModelName"] ?? "";Key =_configuration["AzureOpenAIServiceOptions:Key"] ?? "";}

This method will call the service to get the list of Articles that have been loaded into the database:

protected override async Task OnInitializedAsync(){// Get the articles from the databasearticles = await @Service.GetArticlesAsync();}

This method will open the Dialog (created earlier) and split the contents into chunks of 200 words each and pass the data to Azure OpenAI to get the vectors and store the data in the database:

public async Task OpenLoadDataDialog(){Status = "";try{// Show the Load Data dialogvar result = await dialogService.OpenAsync<LoadDataDialog>($"Load Data",null,new DialogOptions(){Width = "700px",Height = "512px",Resizable = true,Draggable = true});// Get the article from the dialogvar article = (ArticleDTO)result;// Check that article contains dataif ((article.Title.Trim() != "") && (article.Contents.Trim() != "")){// Split the article into chunksvar ArticleChuks = SplitTextIntoChunks(article.Contents.Trim(), 200);// Create Article objectvar objNewArticle = new Article();objNewArticle.ArticleName = article.Title;// Add article to databasevar InsertArticle = await @Service.AddArticleAsync(objNewArticle);// Create a new OpenAIClient object// with the provided API key and EndpointOpenAIClient openAIClient = new OpenAIClient(new Uri(Endpoint),new AzureKeyCredential(Key));// Add article details to databaseforeach (var chunk in ArticleChuks){// Update the statusStatus =$"Creating chunk {ArticleChuks.IndexOf(chunk) + 1} of {ArticleChuks.Count}";StateHasChanged();// Get embeddings for the chunkvar embeddings =openAIClient.GetEmbeddings("text-embedding-ada-002",new EmbeddingsOptions(chunk));// Create ArticleDetail objectvar objNewArticleDetail = new ArticleDetail();objNewArticleDetail.Article = InsertArticle;objNewArticleDetail.ArticleContent = chunk;objNewArticleDetail.ArticleSequence = ArticleChuks.IndexOf(chunk) + 1;// Add article detail to databasevar InsertArticleDetail =await @Service.AddArticleDetailAsync(objNewArticleDetail, embeddings);}// Refresh the gridStatus = "";StateHasChanged();// Get the articles from the databasearticles = await @Service.GetArticlesAsync();// Show a success dialogawait dialogService.Alert("Article added to database","Success",new AlertOptions() { OkButtonText = "Ok" });}}catch (Exception ex){Status = ex.Message;}}

Add the following two methods that will be called by the preceding code:

// The method that splits the text into chunks of a given sizepublic static List<string> SplitTextIntoChunks(string text, int chunkSize){// Initialize an empty list to store the chunksList<string> chunks = new List<string>();// Fix up textvar NormalizedText = NormalizeText(text);// Split the text by whitespace charactersstring[] words = NormalizedText.Split();// Loop through the words and add them to the current chunkstring currentChunk = "";foreach (string word in words){// If adding the word would exceed the chunk size,// add the current chunk to the list and start a new oneif (currentChunk.Length + word.Length + 1 > chunkSize){chunks.Add(currentChunk);currentChunk = "";}// Add the word to the current chunk with a spacecurrentChunk += word + " ";}// Add the last chunk to the list if it is not emptyif (currentChunk != ""){chunks.Add(currentChunk);}// Return the list of chunksreturn chunks;}public static string NormalizeText(string s, string sepToken = " \n "){// Replace multiple whitespace with single space and trims = Regex.Replace(s, @"\s+", " ").Trim();// Remove all instances of ". ,"s = Regex.Replace(s, @". ,", "");// Remove all instances of multiple dotss = s.Replace("..", ".");s = s.Replace(". .", ".");// Remove all instances of multiple commass = s.Replace("\n", "");s = s.Trim();return s;}

Finally, add the following method to allow an article to be deleted from the database:

public async Task Delete(Article article){// Show a custom dialog before deletingvar result = await dialogService.OpenAsync("Delete article", ds =>@<RadzenCard Style="padding: 20px;"><p Style="margin-bottom: 10px;">Are you sure you want to delete this article?</p><div class="row"><div class="col-md-12"><RadzenButton Text="Yes"Click="() => ds.Close(true)"ButtonStyle="ButtonStyle.Danger"Style="margin-bottom: 10px; width: 150px" /><RadzenButton Text="No"Click="() => ds.Close(false)"ButtonStyle="ButtonStyle.Secondary"Style="margin-bottom: 10px; width: 150px" /></div></div></RadzenCard>,new DialogOptions() { Width = "600px", Height = "220px" });if (result){// Delete the article from the databasevar deleteresult = await @Service.DeleteArticleAsync(article.Id);articles = await @Service.GetArticlesAsync();}}

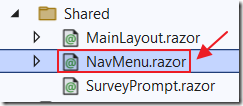

In the Shared folder, open the NavMenu.razor page and use the following code to add a link to the RagChat.razor page:

<div class="nav-item px-3"><NavLink class="nav-link" href="ragchat"><span class="oi oi-briefcase" aria-hidden="true"></span> Data</NavLink></div>

The Index Page

We will now create the page that will be used to chat with the data loaded into the database.

Replace all the code in the Index.razor page with the following code, that will add the using statements and inject the services:

@using Azure;@using Markdig;@using AzureOpenAIChat.Data;@using AzureOpenAIChat.Models;@using AzureOpenAIChat.Models.DTO;@using AzureOpenAIChat.Pages.Dialogs;@using System.Text.RegularExpressions;@inject IJSRuntime _jsRuntime@inject IConfiguration _configuration@inject NotificationService NotificationService@inject DialogService dialogService@*Using OwningComponentBase ensures that the service and related servicesthat share its scope are disposed with the component.Otherwise DbContext in ForecastService will live for the life of theconnection, which may be problematic if clients stayconnected for a long time.We access WeatherForecastService using @Service*@@inherits OwningComponentBase<AzureOpenAIChatSQLVectorService>

Add the following code that will create the UI:

<PageTitle>Index</PageTitle><style>textarea {border: 1px dashed #888;border-radius: 5px;width: 80%;overflow: auto;background: #f7f7f7}/* improved CSS for speech bubbles */.assistant, .user {position: relative;font-family: arial;font-size: 1.1em;border-radius: 10px;padding: 20px;margin-bottom: 20px;}.assistant:after, .user:after {content: '';border: 20px solid transparent;position: absolute;margin-top: -30px;}.user {background: #03a9f4;color: #fff;margin-left: 20%;margin-right: 100px;top: 30%;text-align: right;}.assistant {background: #4CAF50;color: #fff;margin-left: 100px;margin-right: 20%;}.user:after {border-left-color: #03a9f4;border-right: 0;right: -20px;}.assistant:after {border-right-color: #4CAF50;border-left: 0;left: -20px;}.msg {font-size: medium;}</style><h1>Blazor ChatGPT</h1><RadzenTabs RenderMode="TabRenderMode.Client"><Tabs><RadzenTabsItem Text="Chat"><div id="chatcontainer" style="height:550px; width:100%; overflow: scroll;">@foreach (var item in ChatMessages){<div>@if (item.Role == ChatRole.User){<div style="float: right; margin-right: 20px; margin-top: 10px"><b>Human</b></div><div class="@item.Role"><div class="msg">@item.Content</div></div>}@if (item.Role == ChatRole.Assistant){<div style="float: left; margin-left: 20px; margin-top: 10px"><b>ChatGPT </b></div><div class="@item.Role"><div class="msg">@if (item.Content != null){@((MarkupString)item.Content.ToHtml())}</div></div>}</div>}</div>@if (!Processing){<textarea rows="3" @bind="prompt" /><br /><button class="btn btn-primary"@onclick="CallSearchData">Call ChatGPT</button><span> </span><button class="btn btn-info"@onclick="RestartChatGPT">Restart</button>}else{<br><h4>Processing...</h4>}</RadzenTabsItem><RadzenTabsItem Text="Search Results"><RadzenDataGrid Data="@similarities" TItem="ArticleResultsDTO"AllowFiltering="true" AllowSorting="true" AllowPaging="true"><Columns><RadzenDataGridColumn TItem="ArticleResultsDTO"Property="Article" Title="Article" Width="30%"></RadzenDataGridColumn><RadzenDataGridColumn TItem="ArticleResultsDTO"Property="Sequence" Title="#" Width="10%"></RadzenDataGridColumn><RadzenDataGridColumn TItem="ArticleResultsDTO"Property="Contents" Title="Content"><Template Context="data"><p style="white-space:pre-wrap">@data.Contents</p></Template></RadzenDataGridColumn><RadzenDataGridColumn TItem="ArticleResultsDTO"Property="Match" Title="%"FormatString="{0:P}" Width="10%"></RadzenDataGridColumn></Columns></RadzenDataGrid></RadzenTabsItem></Tabs></RadzenTabs><br /><p style="color:red">@ErrorMessage</p>

Add the code block to hold the procedural code:

@code {string Endpoint = "";string DeploymentOrModelName = "";string Key = "";List<ChatMessage> ChatMessages = new List<ChatMessage>();string prompt = "";string ErrorMessage = "";bool Processing = false;// Declare an embedding collection and a list to store similaritiesList<ArticleResultsDTO> similarities = new List<ArticleResultsDTO>();}

Add these remaining methods inside the procedural code block.

Add the following method that will retrieve the Azure OpenAI key and model from the appsettings.json file:

protected override void OnInitialized(){// Get the Azure OpenAI Service configuration valuesEndpoint =_configuration["AzureOpenAIServiceOptions:Endpoint"] ?? "";DeploymentOrModelName =_configuration["AzureOpenAIServiceOptions:DeploymentOrModelName"] ?? "";Key =_configuration["AzureOpenAIServiceOptions:Key"] ?? "";RestartChatGPT();}

Next add the OnAfterRenderAsync method that will call the ScrollToBottom JavaScript method that is contained on the _Host.cshtml page:

protected override async TaskOnAfterRenderAsync(bool firstRender){try{await _jsRuntime.InvokeAsync<string>("ScrollToBottom", "chatcontainer");}catch{// do nothing if this fails}}

Add the following method that will be raised when the Restart button is clicked:

void RestartChatGPT(){ErrorMessage = "";prompt = "How can I bring my own data to Azure OpenAI?";// Create a new list of ChatPrompt objects and initialize it with the// system's introductory messageChatMessages = new List<ChatMessage>();string SystemMessage = "You are helpful Assistant.";SystemMessage += "You will always reply with a Markdown formatted response.";ChatMessages.Add(new ChatMessage(ChatRole.System,SystemMessage));StateHasChanged();}

Add the method that will create and embedding of the search prompt and then compare its vectors against the vectors stored in the database:

async Task CallSearchData(){// Set the in-progress flag to trueProcessing = true;// Notify the framework that the state has changed and the UI should be updatedStateHasChanged();try{similarities.Clear();// Create a new OpenAIClient object// with the provided API key and EndpointOpenAIClient openAIClient = new OpenAIClient(new Uri(Endpoint),new AzureKeyCredential(Key));// Get embeddings for the search textvar SearchEmbedding =openAIClient.GetEmbeddings("text-embedding-ada-002",new EmbeddingsOptions(prompt));// Get embeddings as an array of floatsvar EmbeddingVectors =SearchEmbedding.Value.Data[0].Embedding.Select(d => (float)d).ToArray();// Loop through the embeddingsList<VectorData> AllVectors = new List<VectorData>();for (int i = 0; i < EmbeddingVectors.Length; i++){var embeddingVector = new VectorData{VectorValue = EmbeddingVectors[i]};AllVectors.Add(embeddingVector);}// Convert the floats to a single string to pass to the functionvar VectorsForSearchText ="[" + string.Join(",", AllVectors.Select(x => x.VectorValue)) + "]";// Call the SQL function to get the similar content articlesvar SimularContentArticles =@Service.GetSimilarContentArticles(VectorsForSearchText);// Loop through SimularContentArticlesforeach (var Article in SimularContentArticles){// Add to similarities collectionsimilarities.Add(new ArticleResultsDTO(){Article = Article.ArticleName,Sequence = Article.ArticleSequence,Contents = Article.ArticleContent,Match = Article.cosine_distance ?? 0});}// Sort the results by similarity in descending ordersimilarities.Sort((a, b) => b.Match.CompareTo(a.Match));// Take the top 10 resultssimilarities = similarities.Take(10).ToList();// Sort by the first colum then the second columnsimilarities.Sort((a, b) => a.Sequence.CompareTo(b.Sequence));similarities.Sort((a, b) => a.Article.CompareTo(b.Article));// Call Azure OpenAI APIawait CallChatGPT();}catch (Exception ex){// Create an error notification messagevar Notification = new NotificationMessage(){Severity = NotificationSeverity.Error,Summary = "Error",Detail = ex.Message,Duration = 40000};// Show the notificationNotificationService.Notify(Notification);// Set the in-progress flag to falseProcessing = false;// Notify the framework that the state has changed// and the UI should be updatedStateHasChanged();}}

The preceding method calls the following method to call the Azure OpenAI service:

async Task CallChatGPT(){// Set the in-progress flag to trueProcessing = true;// Notify the framework that the state has changed and the UI should be updatedStateHasChanged();try{// Concatonate colum 3 from similarities collection into Knowledgestring ExistingKnowledge = "";foreach (var item in similarities){ExistingKnowledge +=$"#Article: {item.Article} #Article Contents: {item.Contents}";}// This model's maximum context length is 8192 tokens// Remove old chat messages to avoid exceeding the limitRemoveOldChatMessags();// Create a new OpenAIClient object// with the provided API key and EndpointOpenAIClient client = new OpenAIClient(new Uri(Endpoint),new AzureKeyCredential(Key));// Add the new message to chatMessagesChatMessages.Add(new ChatMessage(ChatRole.User, prompt));// Add the existing knowledge to the chatMessages listChatMessages.Add(new ChatMessage(ChatRole.System, GetInstruction(ExistingKnowledge)));// Create a new ChatCompletionsOptions objectvar chatCompletionsOptions = new ChatCompletionsOptions(){Temperature = (float)0.0,MaxTokens = 2000,NucleusSamplingFactor = (float)1.00,FrequencyPenalty = 0,PresencePenalty = 0,};// Add the Chat messages to the chatCompletionsOptions objectforeach (var message in ChatMessages){chatCompletionsOptions.Messages.Add(message);}// Call the GetChatCompletionsAsync methodResponse<ChatCompletions> responseWithoutStream =await client.GetChatCompletionsAsync(DeploymentOrModelName,chatCompletionsOptions);// Get the ChatCompletions object from the responseChatCompletions result = responseWithoutStream.Value;// Create a new Message object with the response and other details// and add it to the messages listvar choice = result.Choices.FirstOrDefault();if (choice != null){if (choice.Message != null){ChatMessages.Add(choice.Message);}}}catch (Exception ex){// Create an error notification messagevar Notification = new NotificationMessage(){Severity = NotificationSeverity.Error,Summary = "Error",Detail = ex.Message,Duration = 40000};// Show the notificationNotificationService.Notify(Notification);}// Set the in-progress flag to falseProcessing = false;// Clear the promptprompt = "";// Notify the framework that the state has changed// and the UI should be updatedStateHasChanged();}

The CallChatGPT method calls the following method to remove older chat messages so that we don’t exceed the number of tokens that the model allows:

int CurrentWordCount = 0;private void RemoveOldChatMessags(){// Copy current chat messages to a new listvar CopyOfChatMessages = new List<ChatMessage>(ChatMessages);// Clear the chat messagesChatMessages = new List<ChatMessage>();// Create a new LinkedList of ChatMessagesLinkedList<ChatMessage> ChatPromptsLinkedList = new LinkedList<ChatMessage>();// Loop through the ChatMessages and add them to the LinkedListforeach (var item in CopyOfChatMessages){ChatPromptsLinkedList.AddLast(item);}// Set the current word count to 0CurrentWordCount = 0;// Reverse the chat messages to start from the most recent messagesforeach (var chat in ChatPromptsLinkedList.Reverse()){if (chat.Content != null){int promptWordCount = chat.Content.Split(new char[] { ' ', '\t', '\n', '\r' },StringSplitOptions.RemoveEmptyEntries).Length;if (CurrentWordCount + promptWordCount >= 1000){// This message would cause the total to exceed 1000 words,// so break out of the loopbreak;}// Add to ChatMessagesChatMessages.Insert(0, chat);// Update the current word countCurrentWordCount += promptWordCount;}}}

The Prompt

The key to the RAG pattern is constructing a prompt that takes in the output from the vector search and uses it as grounding.

When constructing any prompt, the following is recommended:

- Explain what you want

- Explain what you don't want

- Provide examples

This is the code, called by the CallChatGPT method, that will take in the grounding information retrieved from the database (using a vector), and construct the prompt sent to the Azure OpenAI service:

public string GetInstruction(string Knowledge){string instruction = $@"Answer questions using the given knowledge ONLY.For tabular information return it as an HTML table.Always return markdown format.Each knowledge has a #Article: source name and an #Article Contents: with the actual informationDo not return the ##Knowledge: section only return the ##Answer: sectionAlways include the source name for each knowledge you use in the answer.Don't cite knowledge that is not available in the knowledge list.If you cannot answer using the knowledge list only, say you don't know.You have this knowledge available: {Knowledge}### EXAMPLE 1Question: 'What Microsoft Blazor?'##Knowledge:#Article: Blazor One #Article Contents: Blazor allows you to build web apps.#Article: Blazor One #Article Contents: Both client and server code is written in C#, allowing you to share code and libraries.#Article: Blazor Two #Article Contents: It uses C# instead of JavaScript allowing you to share code and libraries.##Answer:Blazor apps are composed of reusable web UI components implemented using C#, HTML, and CSS.Both client and server code is written in C#, allowing you to share code and libraries.References: [Blazor One], [Blazor Two]### EXAMPLE 2Question: 'What happens in a performance review'##Knowledge:##Answer:I don't know###";return instruction.Trim();}

Links

What Is Azure OpenAI And Why Would You Want To Use It?

Bring Your Own Data to Azure OpenAI

Creating A Blazor Chat Application With Azure OpenAI

Download

The project is available on the Downloads page on this site.

You must have Visual Studio 2022 (or higher) installed to run the code.