7/3/2023 Admin

What Is Azure OpenAI And Why Would You Want To Use It?

Would you like to create an application that can understand natural human language and respond in natural human language? If so, generative AI models can provide this ability. They are artificial intelligence platforms that generate a variety of outputs based on massive training datasets, neural networks and deep learning architecture. The OpenAI research company creates these generative AI models to produce content such as text, code, and images based on natural language prompts.

Azure OpenAI is a service provided by Microsoft that allows businesses and developers to integrate OpenAI’s language and computer vision models into their own cloud apps. The service provides high-performance AI models at production scale with industry-leading uptime.

Azure OpenAI is used to power several Microsoft apps and experiences, including GitHub Copilot, Power BI, and Microsoft Designer. The service is available through REST APIs, and C#, JavaScript, Java, and Python SDKs. Azure OpenAI offers private networking, regional availability, and responsible AI content filtering.

Exploring Azure OpenAI

In this article we will show you how to get started with Azure OpenAI by applying for access and setting it up. After that, we will explore Azure OpenAI and show you how to deploy a model. We will also cover completions in the playground and chat in the playground. Finally, we will show you how to get the authorization keys to create your own applications using Azure OpenAI.

Set-up Azure OpenAI

The first requirement is to have a Microsoft Azure account. If you don’t already have one, you can get one at: https://azure.microsoft.com/

(Note: this also requires a Microsoft account. If you don’t have one, get one here: https://signup.live.com/)

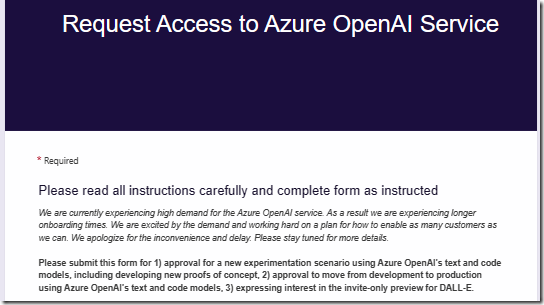

After you have created a Microsoft Azure account, you can apply for access to Azure OpenAI Service at: https://aka.ms/oai/access.

![]()

Once approved (you will get an email response), you can then go to: portal.azure.com and select Create a resource.

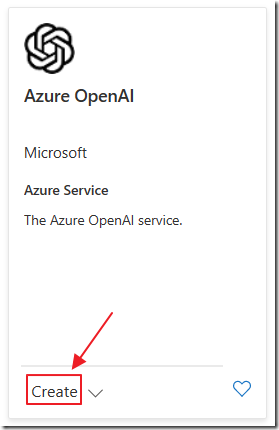

Search for the Azure OpenAI service and click Create.

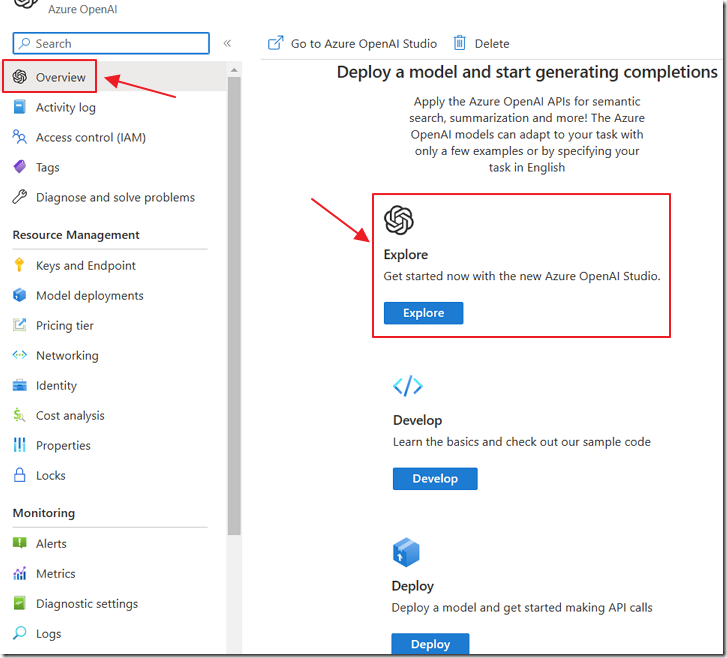

After it is created, in the Overview section, click the Explore button.

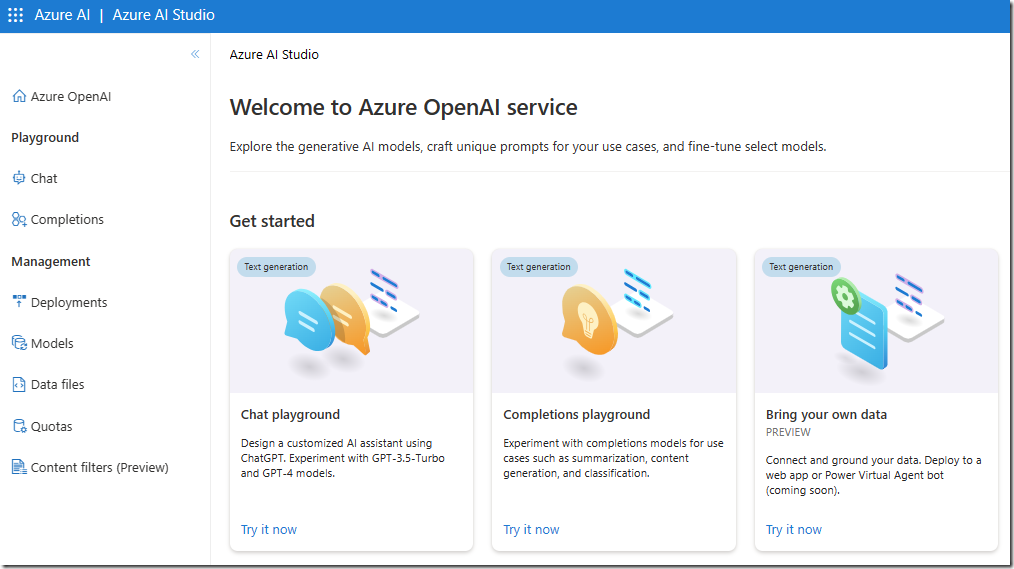

This will take you to the landing page of the Azure Open AI Studio that provides documentation and code examples.

Note: You can also access the Azure Open AI Studio directly at: https://oai.azure.com.

When you go directly to the studio, you may be required to select the Azure directory, subscription, and OpenAI resource.

Deploy A Model

In order to begin using Azure OpenAI, you need to choose an Open AI model and deploy it. An Open AI model is a pre-trained machine learning algorithm that can be used to perform specific tasks such as natural language processing or image recognition. Microsoft provides these OpenAI base models and the option to create customized models based on the OpenAI models.

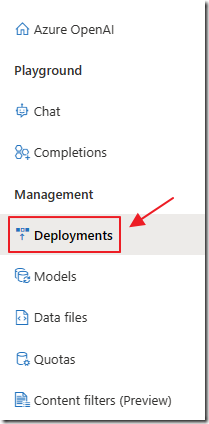

In the Management section select Deployments.

![]()

Select Create new deployment.

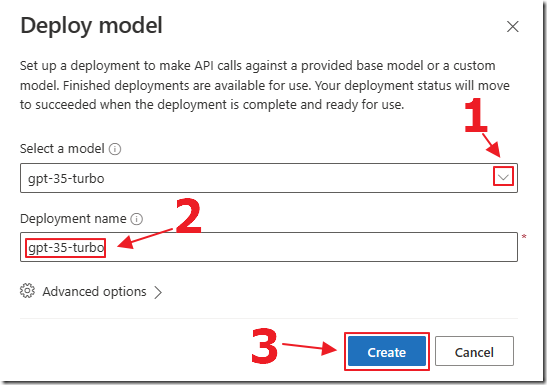

For this tutorial, select the gpt-35-turbo model from the Select a model drop-down.

Enter a deployment name to help you identify the model. Note that the deployment name will be used in your code to call the model.

For your first deployment, leave the Advanced options set to the defaults. However, later you can assign a content filter to your deployment and adjust the Tokens per Minute (TPM) Quota.

Completions

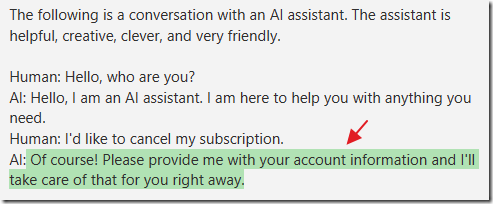

Completions allow you to generate text using the selected machine learning model. You provide a prompt (the start of the text) and the model generates a completion (the rest of the text). You can use this for a variety of tasks, such as generating product descriptions, writing emails, or even creating entire articles.

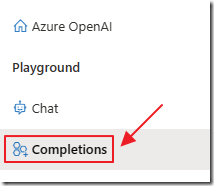

In the Playground section select Completions.

This will open the Completions playground.

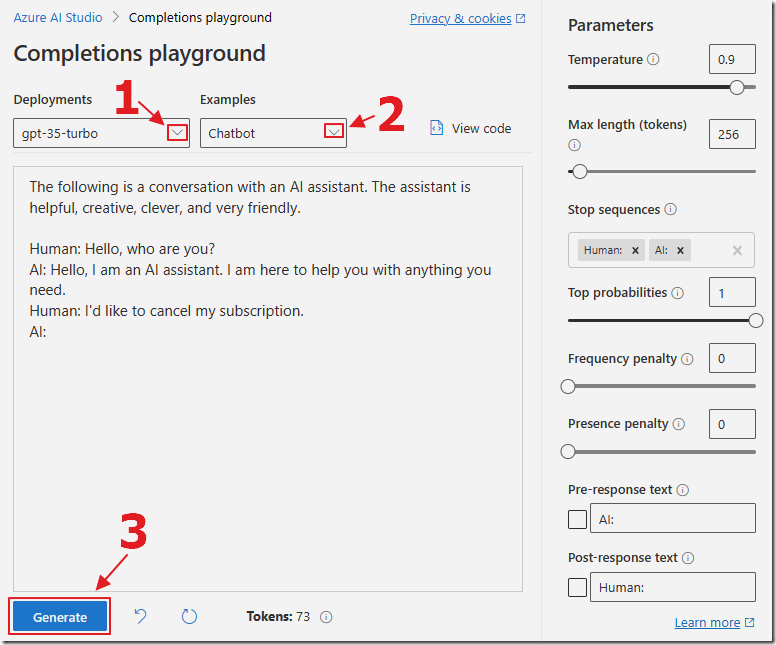

- In the Deployments dropdown select gpt-35-turbo

- In the Examples dropdown select Chatbot

This provides a prompt that will be sent to the model.

The prompt is like a question you ask the model. It helps the model understand what you want it to do.

Press the Generate button

(note: Ignore any warning about using and deploying another model that may appear).

The model will complete the prompt based in the instructions and the sample text.

Anatomy of a Prompt

When constructing any prompt, the following is recommended:

- Explain what you want

- Explain what you don't want

- Provide examples

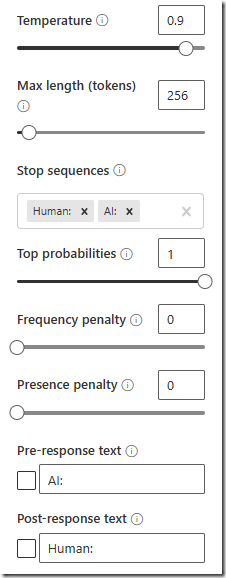

Model Parameters

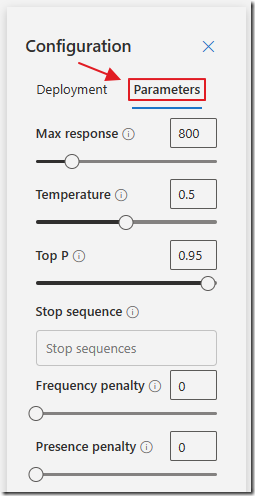

When passing a prompt to the model, there are several parameters that you can adjust:

- Temperature – Controls how creative the model is allowed to be. If you set it high, the model might come up with more unusual ideas. If you set it low, the model might stick to more common ideas.

- Max length (tokens) – Limits how much the model can write. It’s like telling someone to write a story in 16 sentences or less. If you go over the limit, the model might not be able to finish. This is expressed in tokens. A token can be a word or just chunks of characters. For example, the word “hamburger” gets broken up into the tokens “ham”, “bur” and “ger”, while a short and common word like “pear” is a single token.

- Stop sequences - This lets you set special words, that when the model encounters them in its response, it will stop writing.

- Top probabilities – This is another way of controlling how creative the model is allowed to be. It’s like telling someone to only use words that are in the top 10% of their vocabulary. If you set it high, the model might use more unusual words. If you set it low, the model might stick to more common words.

- Frequency penalty – This value tells the model how much it should avoid repeating itself. If the value is high, the model will be less likely to repeat itself. If the value is low, the model will be more likely to repeat itself.

- Presence penalty –This value tells the model how much it should avoid talking about new topics. If the value is high, the model will be less likely to talk about new topics. If the value is low, the model will be more likely to talk about new topics.

The following additional options were not enabled in the example:

- Pre-response text – Inserts text after the user’s input and before the model’s response. This can help prepare the model for a response.

- Post-response text – Inserts text after the model’s response

More on Tokens

The tokens passed to a model and the tokens used by the model in the response are how Microsoft OpenAI charges you. This is based on the model used and the number of tokens used.

You can see the pricing here: https://azure.microsoft.com/en-us/pricing/details/cognitive-services/openai-service/

Tokens are the basic units that are used by OpenAI models to understand and work with text. In the context of language models, each token is assigned a unique vector (an array of numbers) based on its meaning, context, and relationship with other tokens. These vectors are numerical representations of the tokens.

When the model processes text, it converts the tokens into these numerical vectors. This allows the model to perform mathematical calculations, such as comparing the relationships between words. After generating a response, the model then converts the vectors back into tokens to produce human-readable text.

Chat

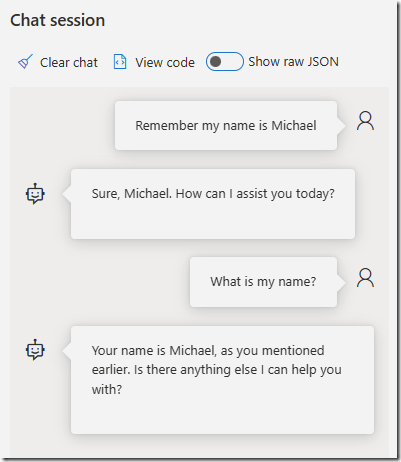

In a normal human conversation, things discussed earlier in the conversation are remembered and there is no need to repeat them. Azure Open AI has a chat mode that mimics this.

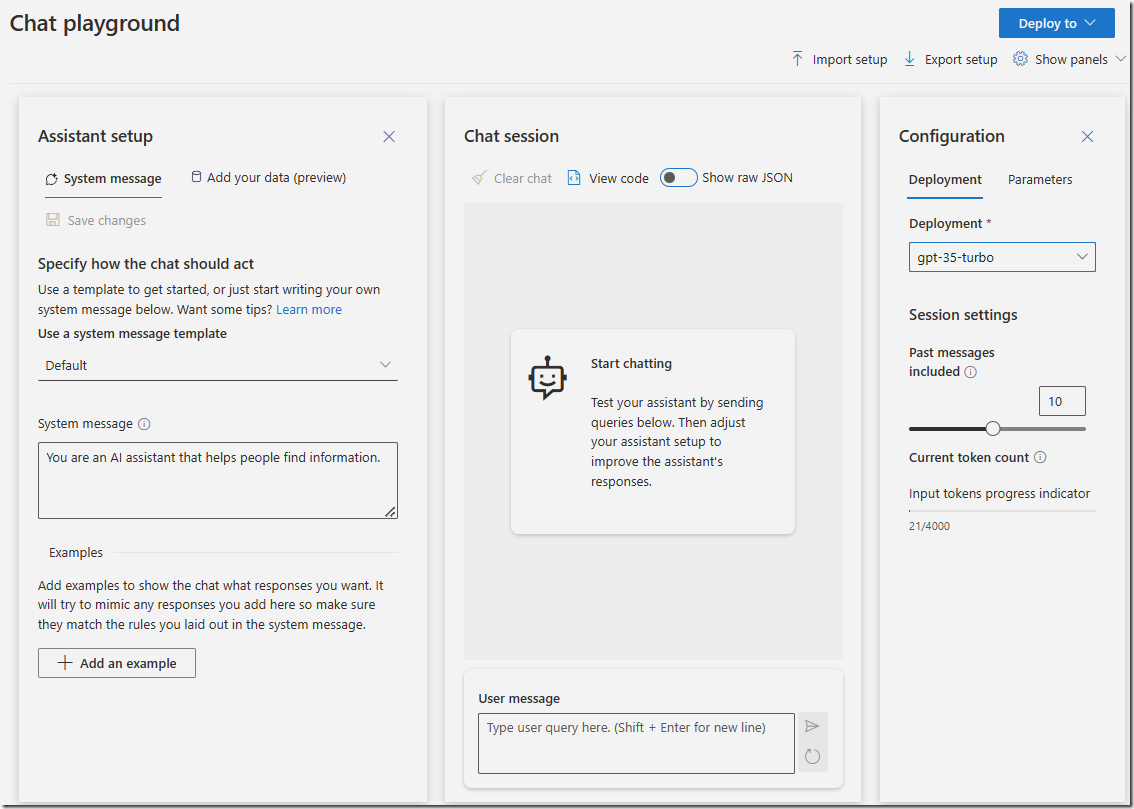

In the Playground section select Chat.

In the Chat playground, you can set a System message that defines the chat settings and context. The assistant will try to follow the tone and any rules you give in your system message.

You can also click the Add an example button to add some few-shot examples to help the assistant learn what to do. Few-shot means providing a few examples to illustrate how the user and the assistant should talk. This is different from zero-shot, which means giving no examples at all.

You can then enter prompts as you did with completions. However, in the chat mode, Microsoft OpenAI will remember information covered previously in the conversation and use that information in its response to the prompts.

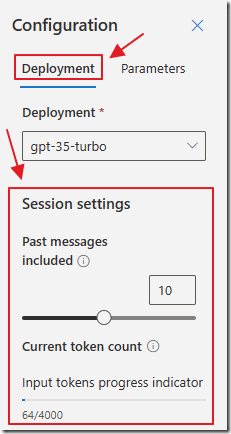

However, this memory is limited and as the conversation continues the model will forget previous information. The amount of memory is expressed in tokens. You can control the number of past responses retained and view the current token count in the Configuration section on the Deployment tab.

You can also set the prompt parameters, as you could in the completions section, by selecting Parameters in the Configuration section.

Note: Chat mode only works with models that begin with gpt-.

Creating Your Own Applications

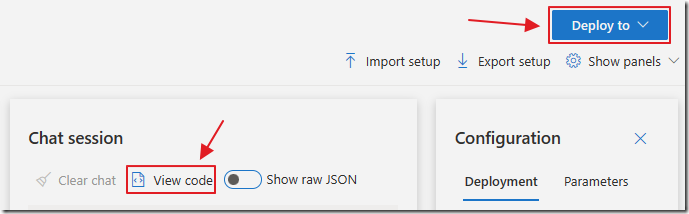

You can create your own applications, that call into the Azure OpenAI service using the REST API or the SDKs by selecting the Deploy to dropdown, which will open a deployment wizard, or clicking the View code button.

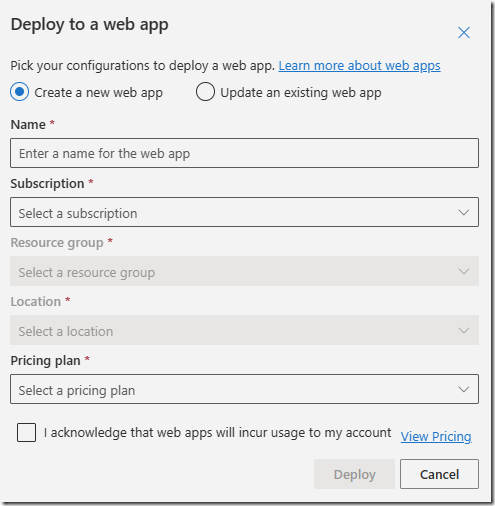

If you select the Deploy to dropdown a dialog will appear that will allow you to specify the settings to create a deployment to an Azure web app.

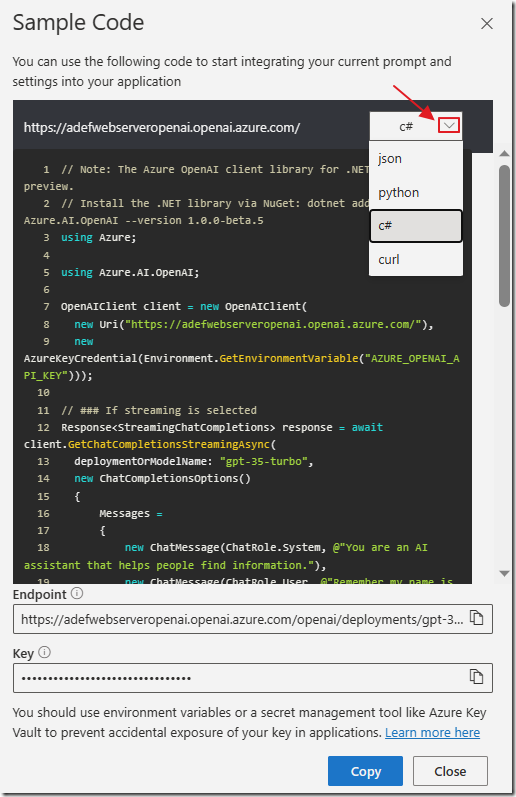

If you click the View code button it will open a dialog that will allow you to select a language. After selecting a language, code will appear with instructions on how to implement it. The required endpoint setting and key will appear at the bottom of the dialog.

Links

Get started with Azure OpenAI Service

Build natural language solutions with Azure OpenAI Service

Apply prompt engineering with Azure OpenAI service