6/25/2023 Admin

OpenAI Functions Calling the Poor Developer's Vector Database

ChatGPT is a state-of-the-art conversational AI that can handle various tasks and scenarios, but it relies on the data that it was trained on. To access any external sources of information, ChatGPT requires a plug-in, which is not available when using the API to call ChatGPT from your own application.

This poses a challenge for users who want to customize ChatGPT with their own private data. How can you make ChatGPT more responsive and relevant to your specific needs?

The solution is to use Functions.

Rag Pattern VS Functions

As described in the article: Use a Poor Developers Vector Database to Implement The Retrieval Augmented Generation (RAG) pattern is a technique for building natural language generation systems that can retrieve and use relevant information from external sources.

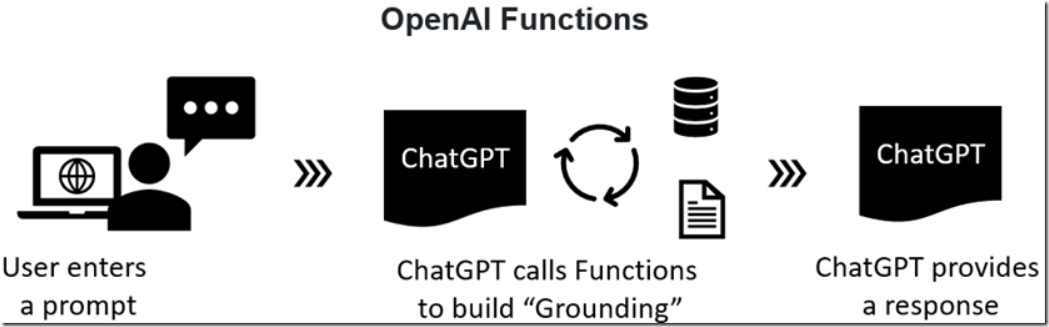

The concept is to first retrieve a set of passages that are related to the search query, then use them to supply grounding to the prompt, to finally generate a natural language response that incorporates the retrieved information.

Using OpenAI Functions can achieve the same results, but rather than pushing grounding to ChatGPT it calls functions to pull in the grounding information.

In addition, ChatGPT can call these functions to perform external actions such as updating a database, sending an email, or triggering a service to turn off a light bulb.

ChatGPT is able to make multiple recursive function calls to obtain information and remains in control of the overall orchestration. This can eliminate the need for LangChain or other AI Agent frameworks.

Functions are covered more in depth in the article: Implementing Recursive ChatGPT Function Calling in Blazor.

The Sample Application

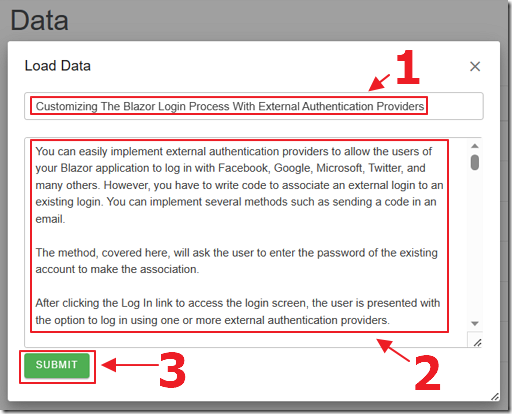

When you set-up and run the sample application (located on the Downloads page of this site), the first step is to navigate to the Data page and click the LOAD DATA button.

Enter a title for the Article and paste in the contents for the Article and click the SUBMIT button.

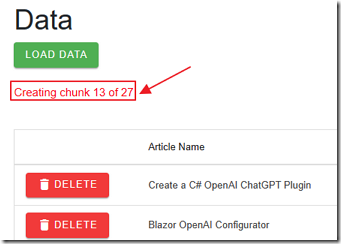

The contents of the Article will be split up into chunks of 200 word segments and will be passed to OpenAI to create embeddings.

The embeddings will consist of an array of vectors that will be stored in the SQL server database.

A popup will indicate when the process is complete.

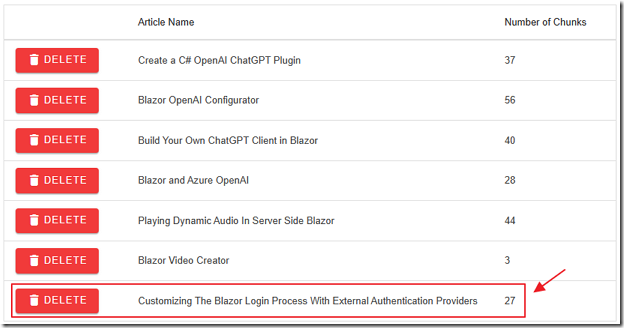

The Article will display in the Article list.

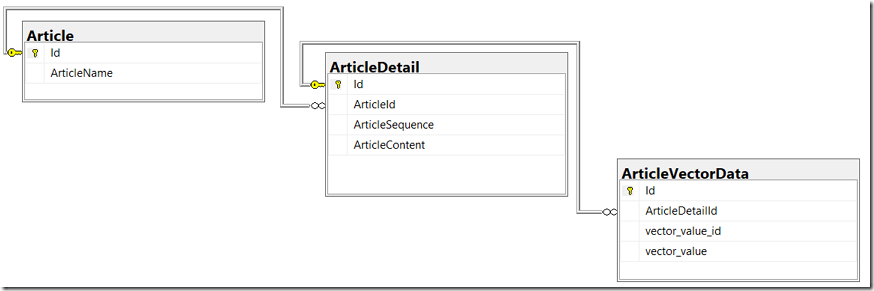

The structure of the database is that the Article table has associated records stored in the ArticleDetail table and they have associated vectors stored in the ArticleVectorData table.

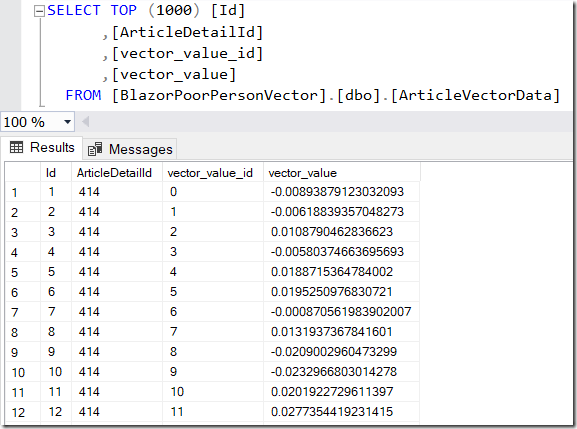

Each ArticleDetail contains an array of 1536 vectors, each stored in a separate row in the ArticleVectorData table.

Each vector for a ArticleDetail has a vector_value_id that is the sequential position of the vector in the array of vectors returned by the embedding for an ArticleDetail.

To compare the vectors (stored in the vector_value field) that we get from the search request (in the next step), we will use a cosign similarity calculation.

We will match each vector using its vector_value_id for each vector.

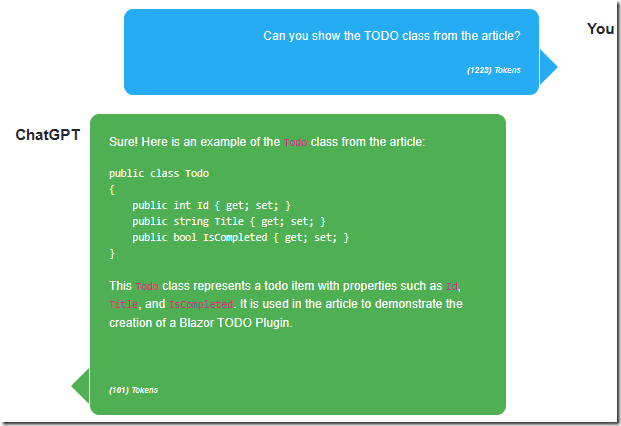

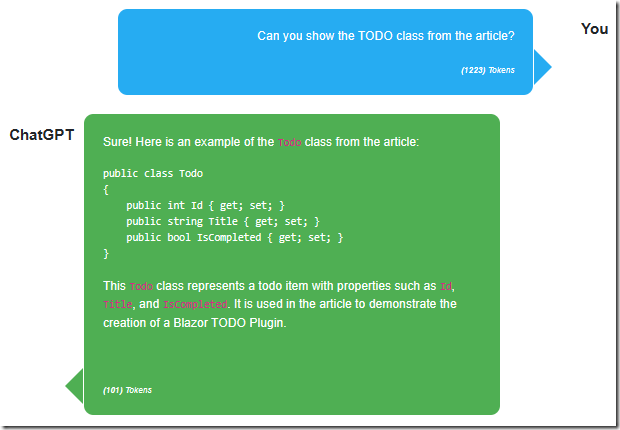

Navigate to the Chat page.

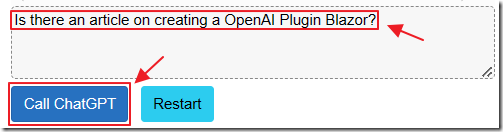

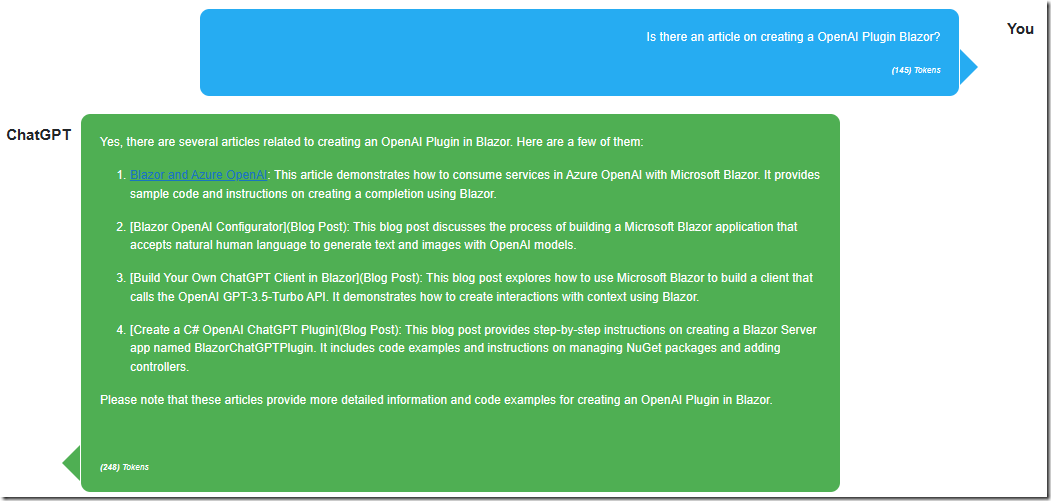

We can enter a search request and press the Call ChatGPT button.

The Chat window will display the response from OpenAI completions after the results of the vector search have been passed to the completion prompt.

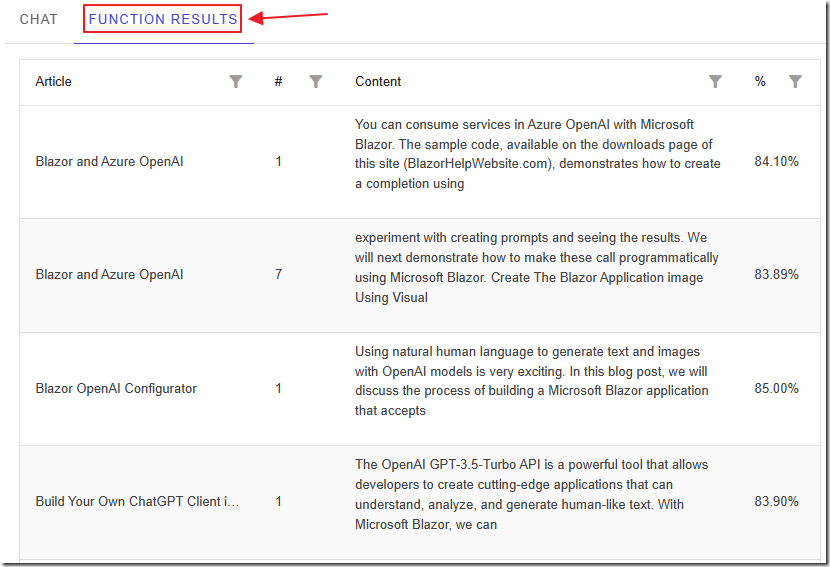

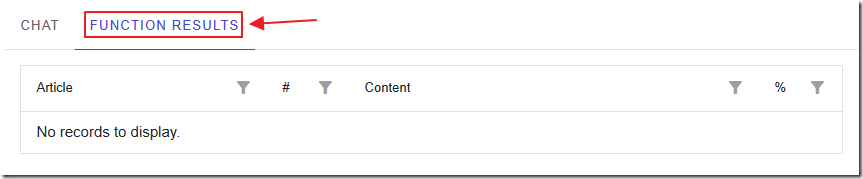

We can click on the FUNCTION RESULTS tab to see the top 10 results retrieved from the vector search.

The results will indicate the Article and the content from the chunk.

The match percentage will be shown, and the chunks in each Article will be listed in the order that they appear in the Article.

This is important because we need to feed the chunks to the prompt that will be sent to the OpenAI completion API in order so that the information makes sense to the OpenAI language model.

We can switch back to the Chat window and continue the conversation.

We can switch to the FUNCTION RESULTS tab and see that for some queries ChatGPT does not need to call a Function and instead answers the prompt using information already retrieved or that it already has as part of its training.

The key is that ChatGPT decides when it needs to perform a vector search to retrieve grounding.

The UI Code

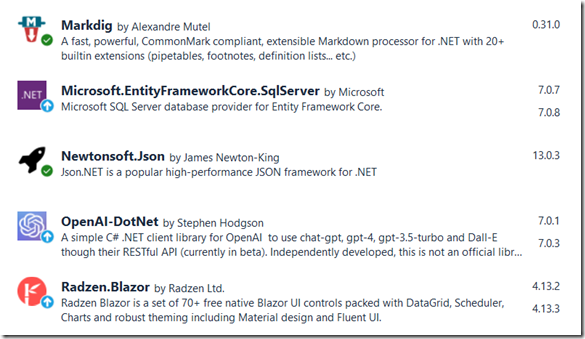

The application uses the following Nuget packages:

- Microsoft.EntityFrameworkCore.SqlServer

- Newtonsoft.Json

- Radzen.Blazor – Blazor UI

- OpenAI-DotNet – Connects to OpenAI

- Markdig – Displays nicely formatted responses from OpenAI in the chat window

The Chat.razor page uses the following markup to display the chat messages:

<p style="font-size:small"><b>Total Tokens:</b> @TotalTokens <b>Current Word Count:</b> @CurrentWordCount</p><div id="chatcontainer" style="height:550px; width:80%; overflow: scroll;">@foreach (var item in ChatMessages){<div>@if (item.Role == Role.User){<div style="float: right; margin-right: 20px; margin-top: 10px"><b>You</b></div><div class="user"><div class="msg">@item.Prompt<br /><br /><div style="font-size:xx-small;"><i><b>(@item.Tokens)</b> Tokens</i></div></div></div>}else{@if (item.Role == Role.Assistant){<div style="float: left; margin-left: 20px; margin-top: 10px"><b>ChatGPT </b></div><div class="assistant"><div class="msg">@if (item.Prompt != null){@((MarkupString)item.Prompt.ToHtml())}<br /><br /><div style="font-size:xx-small;"><i><b>(@item.Tokens)</b> Tokens</i></div></div></div>}}</div>}</div>

To invoke Markdig to display nicely formatted results from OpenAI the following two things must be implemented:

#1 – The prompt sent to OpenAI must contain this (see highlighted part):

// Add the first message to the chat prompts to indicate the System messagechatPrompts.Insert(0,new Message(Role.System,@"You are helpful Assistant.You will always reply with a Markdown formatted response.You never include links to articles or blog posts, only the name."));

#2 – This StringExtension by David Pine:

// Copyright (c) David Pine. All rights reserved.// Licensed under the MIT License.using Markdig;namespace Azure.OpenAI.Client.Extensions;public static class StringExtensions{private static readonly MarkdownPipeline s_pipeline = new MarkdownPipelineBuilder().ConfigureNewLine("\n").UseAdvancedExtensions().UseEmojiAndSmiley().UseSoftlineBreakAsHardlineBreak().Build();public static string ToHtml(this string markdown) => string.IsNullOrWhiteSpace(markdown) is false? Markdown.ToHtml(markdown, s_pipeline): "";}

This allows the following line to work:

@((MarkupString)item.Prompt.ToHtml())

Calling OpenAI

When the end user enters a prompt and clicks the Call ChatGPT button, the following code runs:

// Set Processing to true to indicate that the method is processingProcessing = true;// Call StateHasChanged to refresh the UIStateHasChanged();// Clear any previous error messagesErrorMessage = "";// Clear similaritiessimilarities = new List<ArticleResultsDTO>();// Create a new OpenAIClient object// with the provided API key and organizationvar api = new OpenAIClient(new OpenAIAuthentication(ApiKey, Organization));// Create a colection of chatPromptsList<Message> chatPrompts = new List<Message>();// Add the existing Chat messages to chatPromptschatPrompts = AddExistingChatMessags(chatPrompts);// Add the new message to chatPromptschatPrompts.Add(new Message(Role.User, prompt));

This calls the AddExistingChatMessages method that retrieves all the previous saved messages in the conversation and strips out older messages so that we don’t exceed the amount of space that the ChatGPT model allows:

private List<Message> AddExistingChatMessags(List<Message> chatPrompts){// Create a new LinkedList of ChatMessagesLinkedList<ChatMessage> ChatPromptsLinkedList = new LinkedList<ChatMessage>();// Loop through the ChatMessages and add them to the LinkedListforeach (var item in ChatMessages){ChatPromptsLinkedList.AddLast(item);}// Set the current word count to 0CurrentWordCount = 0;// Reverse the chat messages to start from the most recent messagesforeach (var item in ChatPromptsLinkedList.Reverse()){if (item.Prompt != null){int promptWordCount = item.Prompt.Split(new char[] { ' ', '\t', '\n', '\r' },StringSplitOptions.RemoveEmptyEntries).Length;if (CurrentWordCount + promptWordCount >= 1000){// This message would cause the total to exceed 1000 words,// so break out of the loopbreak;}// Add the message to the chat promptschatPrompts.Insert(0, new Message(item.Role, item.Prompt, item.FunctionName));CurrentWordCount += promptWordCount;}}

If we don’t do this, after a few back and forth chat messages we would get an error like this:

{"error": {"message": "This model's maximum context length is 4097 tokens.However, your messages resulted in 4344 tokens(4252 in the messages, 92 in the functions).Please reduce the length of the messages or functions.","type": "invalid_request_error","param": "messages","code": "context_length_exceeded"}}

Defining The Function

The function, available to ChatGPT, is defined using the following code:

var DefinedFunctions = new List<Function>{new Function("VectorDatabaseSearch",@"Retrieves content from a vector database on articles related to Blazor.Use this function to answer any user question that mentions the word Blazor.".Trim(),new JsonObject{["type"] = "object",["properties"] = new JsonObject{["prompt"] = new JsonObject{["type"] = "string",["description"] = @"A question related to Blazor,e.g. how can I use Blazor to play Audio?Use only the information returned in your response.".Trim()}},["required"] = new JsonArray { "prompt" }})};

The following code calls ChatGPT. Notice it passes the function, to the functions property. The functionCall property is currently set to “auto” which means ChatGPT can decide if it wants to call a function in response to this prompt. This can be set to instruct ChatGPT to call a specific defined function, or not to call any functions at all:

// Call ChatGPT// Create a new ChatRequest object with the chat prompts and pass// it to the API's GetCompletionAsync methodvar chatRequest = new ChatRequest(chatPrompts,functions: DefinedFunctions,functionCall: "auto",model: "gpt-3.5-turbo-0613", // Must use this model or highertemperature: 0.0,topP: 1,frequencyPenalty: 0,presencePenalty: 0);var result = await api.ChatEndpoint.GetCompletionAsync(chatRequest);

The following code examines the result of the call to ChatGPT and determines if ChatGPT wants to call the defined function.

If ChatGPT wants to call a function, we call the ExecuteFunction method.

We do this in a While loop because ChatGPT may want to call the function multiple times:

// See if as a response ChatGPT wants to call a functionif (result.FirstChoice.FinishReason == "function_call"){// Chat GPT wants to call a function// To allow ChatGPT to call multiple functions// We need to start a While loopbool FunctionCallingComplete = false;while (!FunctionCallingComplete){// Call the functionchatPrompts = await ExecuteFunction(result, chatPrompts);// Get a response from ChatGPT (now that is has the results of the function)chatRequest = new ChatRequest(chatPrompts,functions: DefinedFunctions,functionCall: "auto",model: "gpt-3.5-turbo-0613", // Must use this model or highertemperature: 0.0,topP: 1,frequencyPenalty: 0,presencePenalty: 0);result = await api.ChatEndpoint.GetCompletionAsync(chatRequest);if (result.FirstChoice.FinishReason == "function_call"){// Keep loopingFunctionCallingComplete = false;}else{// Break out of the loopFunctionCallingComplete = true;}}}else{// ChatGPT did not want to call a function}

Performing the Vector Database Search

The following code shows the ExecuteFunction method that is contained in the While loop shown earlier:

private async Task<List<Message>> ExecuteFunction(ChatResponse ChatResponseResult, List<Message> ParamChatPrompts){// Get the argumentsvar functionArgs =ChatResponseResult.FirstChoice.Message.Function.Arguments.ToString();// Get the function namevar functionName = ChatResponseResult.FirstChoice.Message.Function.Name;// Variable to hold the function resultstring functionResult = "";// Call the functionawait PerformVectorDatabaseSearch(functionArgs);// Get the resultsfunctionResult = JsonSerializer.Serialize<List<ArticleResultsDTO>>(similarities);// Create a new Message object with the user's prompt and other// details and add it to the messages listChatMessages.Add(new ChatMessage{Prompt = functionResult,Role = Role.Function,FunctionName = functionName,Tokens = ChatResponseResult.Usage.PromptTokens ?? 0});// Call ChatGPT again with the results of the functionParamChatPrompts.Add(new Message(Role.Function, functionResult, functionName));return ParamChatPrompts;}

This calls the following method that actually performs the vector database search:

async Task PerformVectorDatabaseSearch(string InputPrompt){// Create a new instance of OpenAIClient using the ApiKey and Organizationvar api = new OpenAIClient(new OpenAIAuthentication(ApiKey, Organization));// Get the model detailsvar model =await api.ModelsEndpoint.GetModelDetailsAsync("text-embedding-ada-002");// Get embeddings for the search textvar SearchEmbedding =await api.EmbeddingsEndpoint.CreateEmbeddingAsync(InputPrompt, model);// Get embeddings as an array of floatsvar EmbeddingVectors =SearchEmbedding.Data[0].Embedding.Select(d => (float)d).ToArray();// Loop through the embeddingsList<VectorData> AllVectors = new List<VectorData>();for (int i = 0; i < EmbeddingVectors.Length; i++){var embeddingVector = new VectorData{VectorValue = EmbeddingVectors[i]};AllVectors.Add(embeddingVector);}// Convert the floats to a single string to pass to the functionvar VectorsForSearchText ="[" + string.Join(",", AllVectors.Select(x => x.VectorValue)) + "]";// Call the SQL function to get the similar content articlesvar SimularContentArticles =@Service.GetSimilarContentArticles(VectorsForSearchText);// Loop through SimularContentArticlesforeach (var Article in SimularContentArticles){// Add to similarities collectionsimilarities.Add(new ArticleResultsDTO(){Article = Article.ArticleName,Sequence = Article.ArticleSequence,Contents = Article.ArticleContent,Match = Article.cosine_distance ?? 0});}// Sort the results by similarity in descending ordersimilarities.Sort((a, b) => b.Match.CompareTo(a.Match));// Take the top 10 resultssimilarities = similarities.Take(10).ToList();// Sort by the first colum then the second columnsimilarities.Sort((a, b) => a.Sequence.CompareTo(b.Sequence));similarities.Sort((a, b) => a.Article.CompareTo(b.Article));}

This is the function that is called by the preceding code:

/*From GitHub project: Azure-Samples/azure-sql-db-openai*/CREATE function [dbo].[SimilarContentArticles](@vector nvarchar(max))returns tableasreturn with cteVector as(selectcast([key] as int) as [vector_value_id],cast([value] as float) as [vector_value]fromopenjson(@vector)),cteSimilar as(select top (10)v2.ArticleDetailId,sum(v1.[vector_value] * v2.[vector_value]) /(sqrt(sum(v1.[vector_value] * v1.[vector_value]))*sqrt(sum(v2.[vector_value] * v2.[vector_value]))) as cosine_distancefromcteVector v1inner joindbo.ArticleVectorData v2 on v1.vector_value_id = v2.vector_value_idgroup byv2.ArticleDetailIdorder bycosine_distance desc)select(select [ArticleName] from [Article] where id = a.ArticleId) as ArticleName,a.ArticleContent,a.ArticleSequence,r.cosine_distancefromcteSimilar rinner joindbo.[ArticleDetail] a on r.ArticleDetailId = a.idGO

A key to this solution is that this function runs fast because we created the following columstore index when we created the database table.

(See: Vector Similarity Search with Azure SQL database and OpenAI for more information):

CREATE NONCLUSTERED COLUMNSTORE INDEX[ArticleDetailsIdClusteredColumnStoreIndex] ON[dbo].[ArticleVectorData]([ArticleDetailId])WITH (DROP_EXISTING = OFF, COMPRESSION_DELAY = 0) ON [PRIMARY]

For more information on the details, see: Use a Poor Developers Vector Database to Implement The RAG Pattern

Links

Function calling and other API updates

openai-cookbook/How_to_call_functions_for_knowledge_retrieval.ipynb

openai-cookbook/How_to_call_functions_for_knowledge_retrieval

RageAgainstThePixel/OpenAI-DotNet

Implementing Recursive ChatGPT Function Calling in Blazor

Use a Poor Developers Vector Database to Implement

Calling OpenAI GPT-3 From Microsoft Blazor

Build Your Own ChatGPT Client in Blazor

Download

The project is available on the Downloads page on this site.

You must have Visual Studio 2022 (or higher) installed to run the code.