6/11/2023 Admin

Use a Poor Developers Vector Database to Implement The RAG Pattern

OpenAI completions is a powerful natural language generation service that can create engaging and realistic text. However, sometimes you may want to customize your text with your own business data, such as your company name, products, services, etc.

In this article, we will show you how to use a vector database to store and retrieve your business data and use it to enhance your OpenAI completions text. You will learn how to create a vector database, how to query it using natural language, and how to integrate it with OpenAI completions.

Most importantly we will show how to use a normal SQL server database to save hundreds, even thousands of dollars than if you used a normal vector database.

One of the advantages of using a vector database is that you can feed the granular search results to an OpenAI prompt to produce completions, which can provide answers based on the facts from your data.

This is similar to what Bing Chat and Google Bard are doing, but with your own private data. Moreover, if you decide to use the Microsoft OpenAI service, your data stays private and secure.

YouTube Video

Watch the YouTube video on this blog post at: https://www.youtube.com/watch?v=gscoZt_miFI

The Sample Application

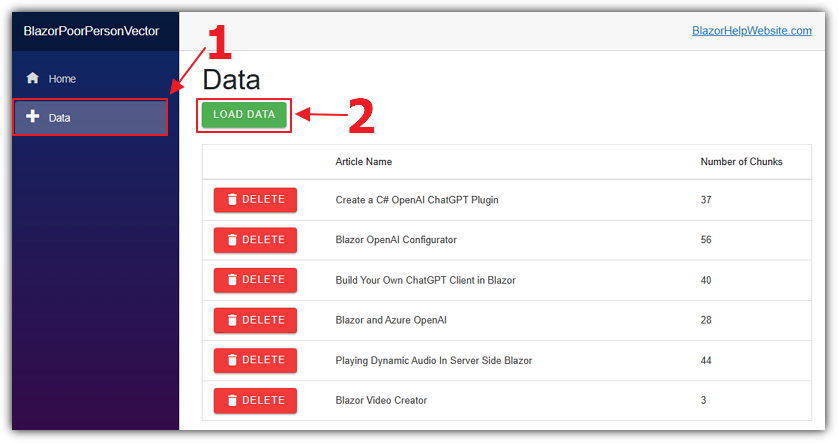

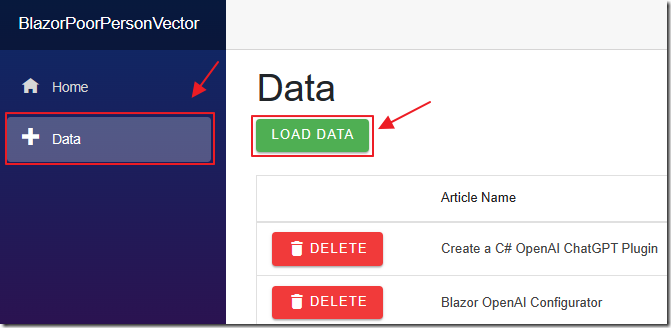

When you set-up and run the sample application (located on the Downloads page of this site), the first step is to navigate to the Data page and click the LOAD DATA button.

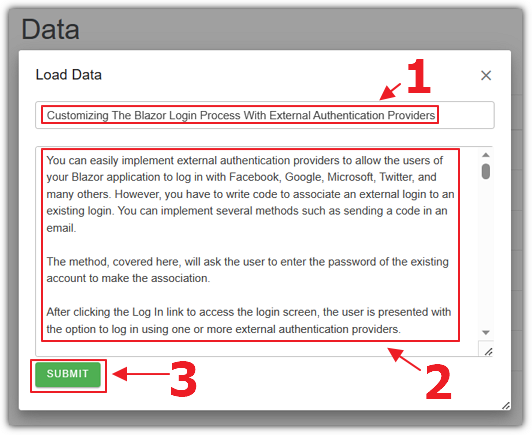

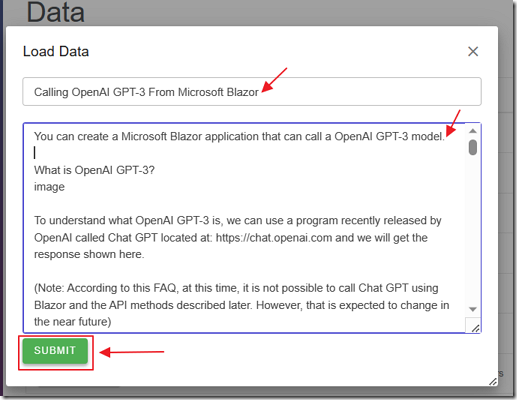

Enter a title for the Article and paste in the contents for the Article and click the SUBMIT button.

The contents of the Article will be split up into chunks of 200 word segments and will be passed to OpenAI to create embeddings.

The embeddings will consist of an array of vectors that will be stored in the SQL server database.

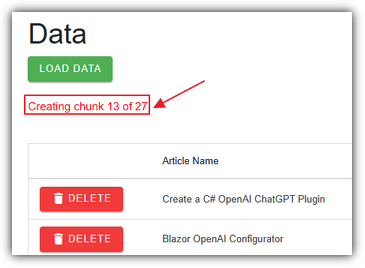

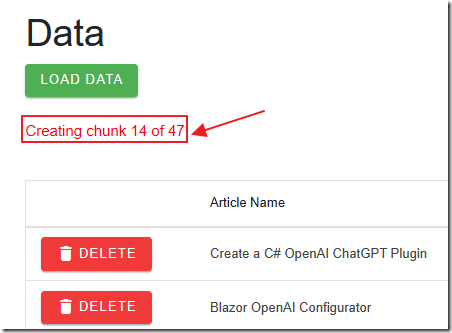

A popup will indicate when the process is complete.

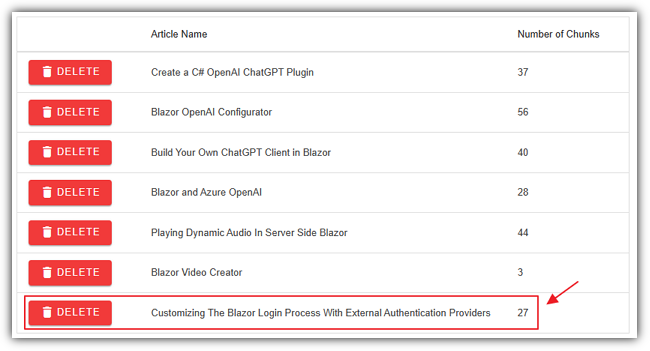

The Article will display in the Article list.

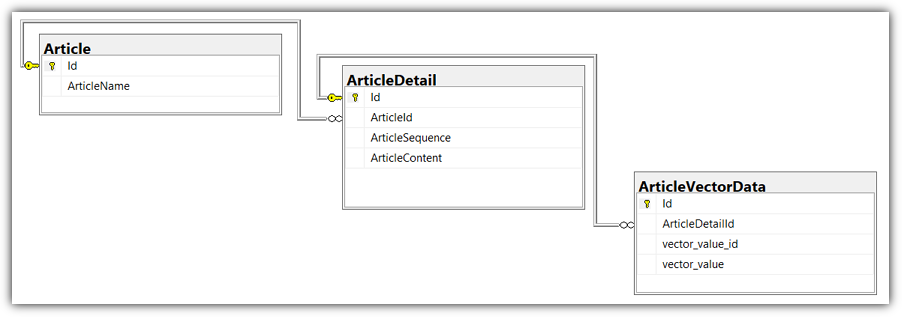

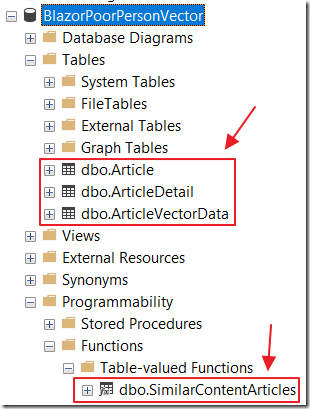

The structure of the database is that the Article table has associated records stored in the ArticleDetail table and they have associated vectors stored in the ArticleVectorData table.

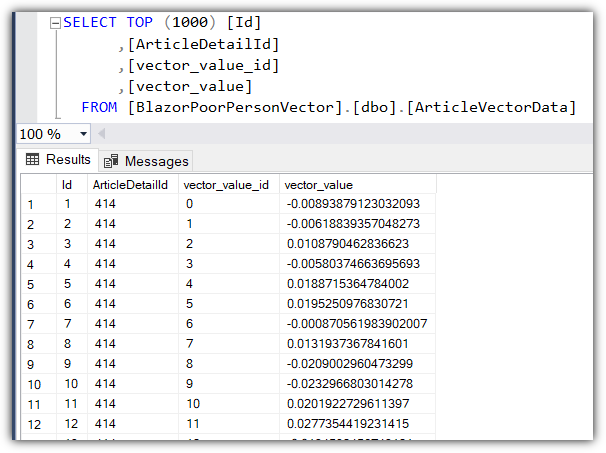

Each ArticleDetail contains an array of 1536 vectors, each stored in a separate row in the ArticleVectorData table.

Each vector for a ArticleDetail has a vector_value_id that is the sequential position of the vector in the array of vectors returned by the embedding for an ArticleDetail.

To compare the vectors (stored in the vector_value field) that we get from the search request (in the next step), we will use a cosign similarity calculation.

We will match each vector using its vector_value_id for each vector.

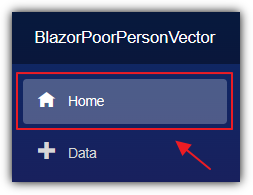

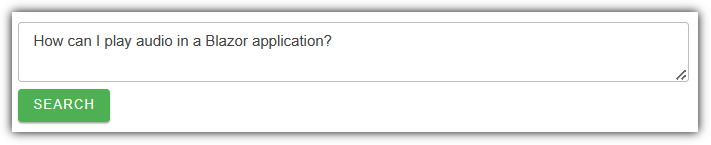

Navigate to the Home page.

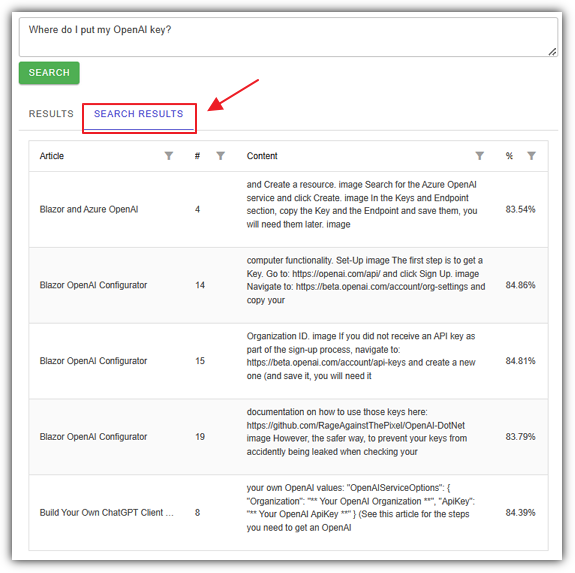

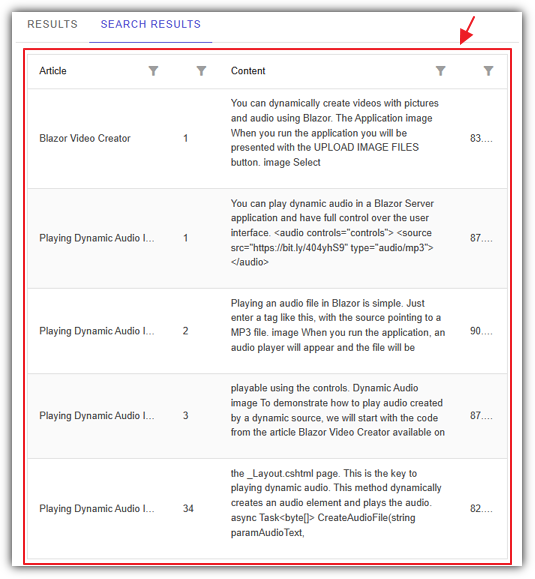

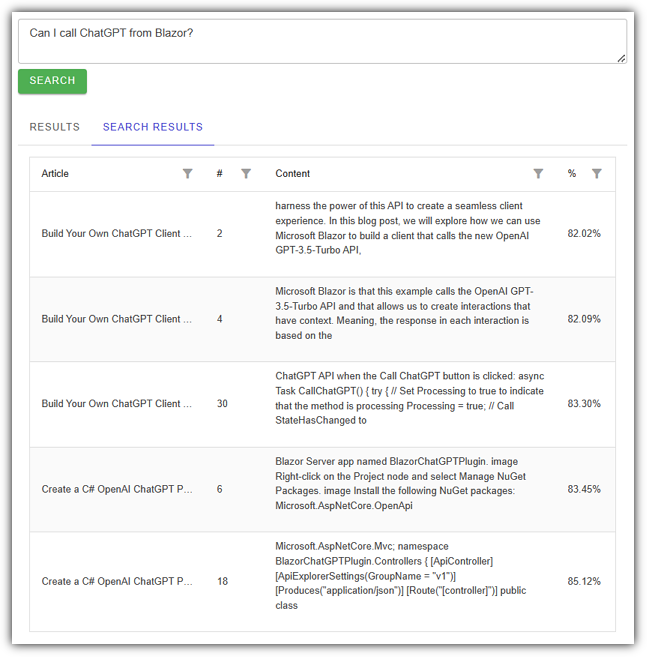

When we enter a search request and press the SEARCH button, we can click on the SEARCH RESULTS tab to see the top 10 results retrieved from the vector search.

The results will indicate the Article and the content from the chunk.

The match percentage will be shown, and the chunks in each Article will be listed in the order that they appear in the Article.

This is important because we need to feed the chunks to the prompt that will be sent to the OpenAI completion API in order so that the information makes sense to the OpenAI language model.

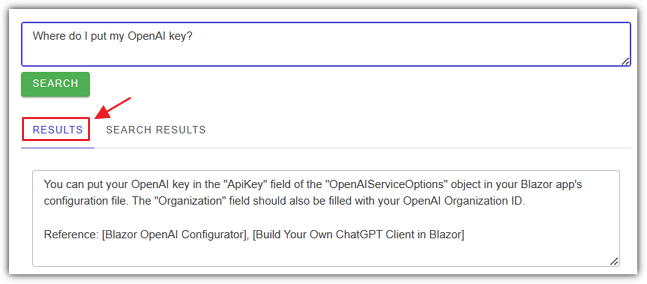

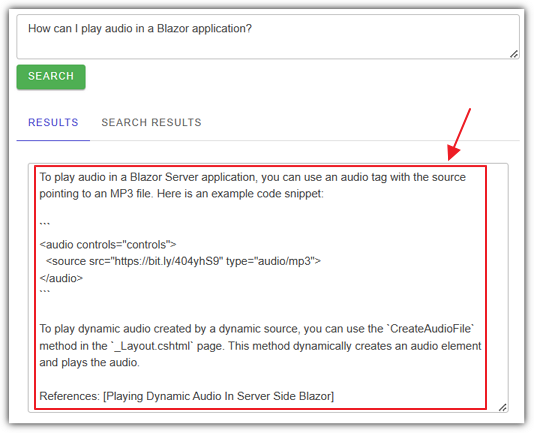

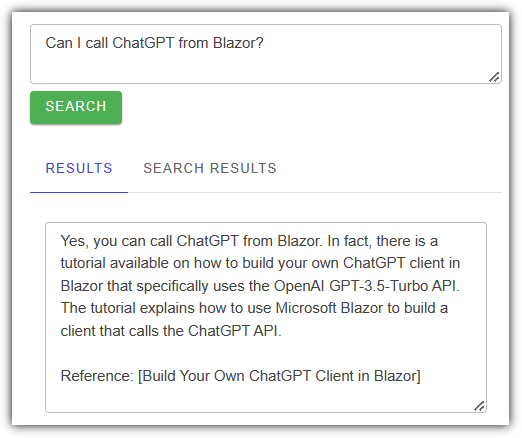

Clicking on the RESULTS tab will display the response from OpenAI completions after the results of the vector search have been passed to the completion prompt.

What is a Large Language Model (LLM)?

A Large Language Model (LLM) is a type of artificial intelligence system that can generate natural language texts based on a given input. It is trained on a large amount of text data, such as books, articles, websites, and social media posts, and learns to predict the next word or phrase based on the previous ones.

A LLM can complete various kinds of prompts, such as questions, sentences, paragraphs, or stories. For example, if the prompt is “The sky is”, a LLM might complete it with “blue”, “cloudy”, or “full of stars”. If the prompt is “Once upon a time”, a LLM might complete it with “there was a princess who lived in a castle”, “there was a boy who had a magic bean”, or “there was a dragon who guarded a treasure”.

What is the RAG Pattern?

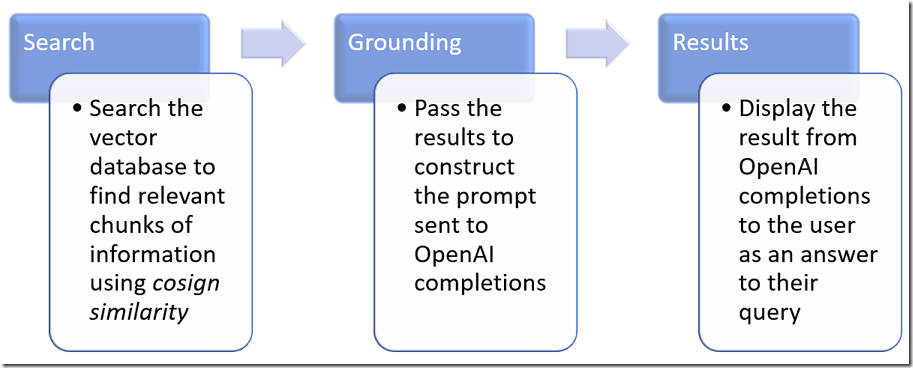

Retrieval Augmented Generation (RAG) pattern is a technique for building natural language generation systems that can retrieve and use relevant information from external sources.

The concept is to first retrieve a set of passages that are related to the search query, then use them to supply grounding to the prompt, to finally generate a natural language response that incorporates the retrieved information.

To ground a model means to provide it with some factual or contextual information that can help it produce more accurate and coherent outputs.

For example, if the prompt is “Who is the president of France?”, the model needs to know some facts about the current political situation in France.

If the prompt is “How are you feeling today?”, the model needs to know some context about the previous conversation or the user’s mood.

One way to ground a model is to use the RAG pattern and retrieve relevant information from external sources, and supply that information to the prompt.

Why You Need A Vector Database

The RAG pattern requires granular chunks of information and we want them to be as accurate as possible. However, we have to limited how many of these chunks of information we use because OpenAI completions are constrained by the size of the prompt (expressed in tokens with a token being about 1.5 words). The input plus the output of a completion cannot exceed the token limitation of the OpenAI model being used.

A vector search, as opposed to a usual keyword search, is able to return results, that are semantically related to the search query, to properly ground the model and allow it to produce a relevant and coherent response to the user.

This requires a vector database and vector databases can be complex to set up and expensive to operate.

Using SQL server avoids that. This article explains how to use SQL server for vector searches: Vector Similarity Search with Azure SQL database and OpenAI.

Get Your Embeddings Here

To obtain the vectors needed for the vector search, we process the text by calling an OpenAI model that produces embeddings that consist of an array of floats.

An embedding is a mathematical representation of a word or phrase that captures its meaning in a high-dimensional space.

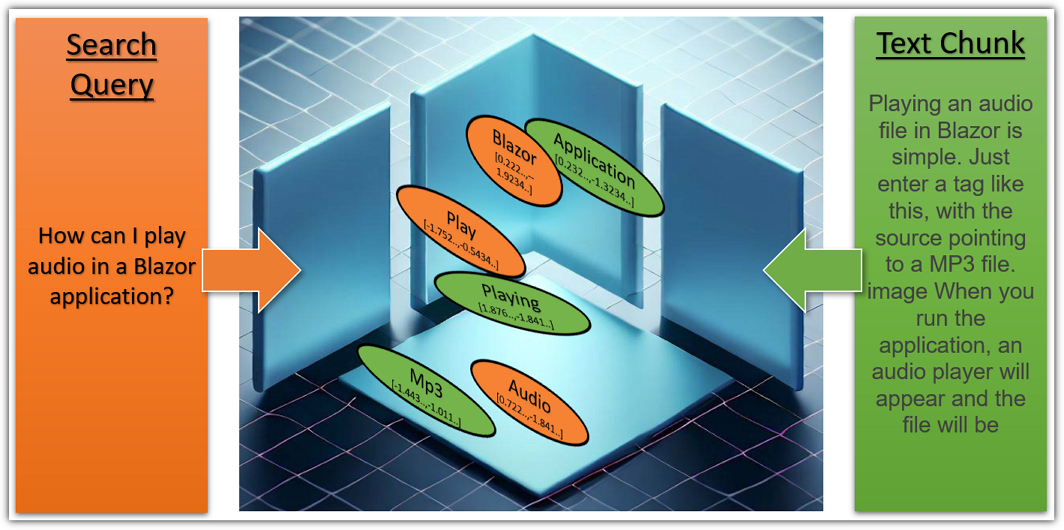

For example, a search query is entered regarding playing audio in a Blazor application.

The search query is turned into embeddings and cosign similarity is used to calculate its vectors against the vectors in the text chunks stored in the database.

The top 10 results are returned and the text chunks are used as grounding for the model, and passed in the prompt that is sent to the OpenAI completion API.

The user receives a well grounded coherent response.

Download and Set-Up The Sample Application

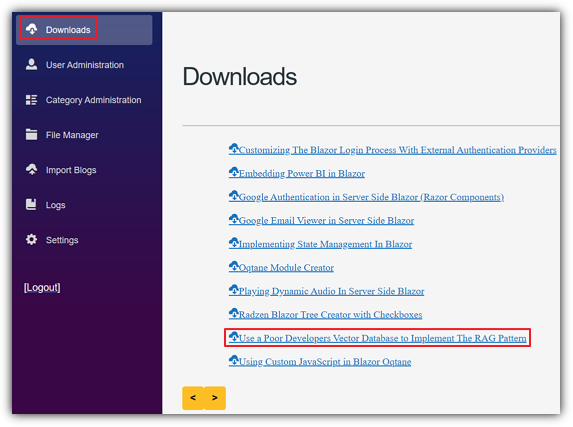

To run the application, first download it from the Downloads page: https://blazorhelpwebsite.com/filedownloads

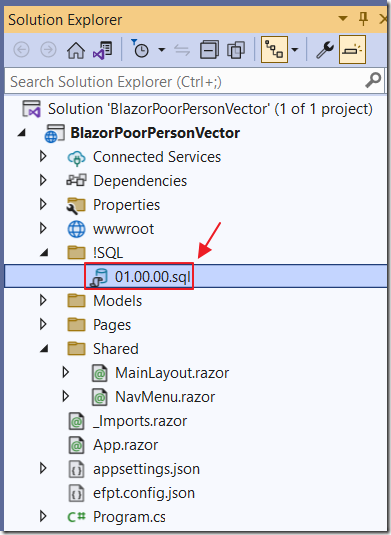

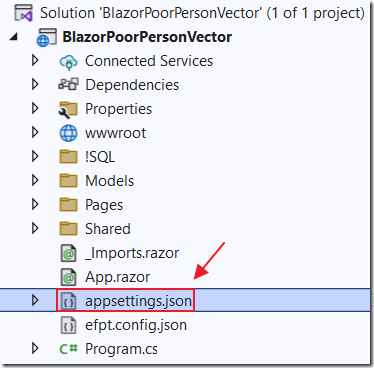

Unzip it and open it in Visual Studio 2022 or higher.

Open the 01.00.00.sql script in the !SQL directory.

Create a database in Microsoft SQL Server called BlazorPoorPersonVector and run the script.

This will create the required database objects.

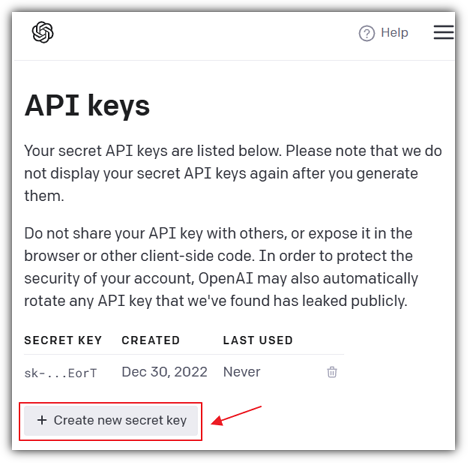

If you don’t already have a OpenAI API key, go to: https://openai.com/api/ and click Sign Up.

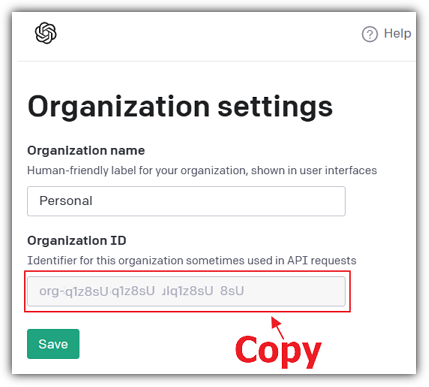

Navigate to: https://beta.openai.com/account/org-settings and copy your Organization ID.

If you did not receive an API key as part of the sign-up process, navigate to: https://beta.openai.com/account/api-keys

and create a new one (and save it, you will need it later).

Open the appsettings.json file and enter your OpenAI API key and Organization ID.

Explore The Code

The first step is to navigate to the Data page and click the LOAD DATA button to open the dialog.

This code opens the dialog:

// Show the Load Data dialogvar result = await dialogService.OpenAsync<LoadDataDialog>($"Load Data",null,new DialogOptions(){Width = "700px",Height = "512px",Resizable = true,Draggable = true});

Enter a title and article contents and click the SUBMIT button.

This code creates chunks of 200 words each, calls OpenAI to get the embeddings for each chunk, and inserts the vectors from the embeddings into the database:

// Get the article from the dialogvar article = (ArticleDTO)result;// Check that article contains dataif ((article.Title.Trim() != "") && (article.Contents.Trim() != "")){// Split the article into chunksvar ArticleChuks = SplitTextIntoChunks(article.Contents.Trim(), 200);// Create Article objectvar objNewArticle = new Article();objNewArticle.ArticleName = article.Title;// Add article to databasevar InsertArticle = await @Service.AddArticleAsync(objNewArticle);// Create an instance of the OpenAI clientvar api = new OpenAIClient(new OpenAIAuthentication(ApiKey, Organization));// Get the model detailsvar model =await api.ModelsEndpoint.GetModelDetailsAsync("text-embedding-ada-002");// Add article details to databaseforeach (var chunk in ArticleChuks){// Update the statusStatus =$"Creating chunk {ArticleChuks.IndexOf(chunk) + 1} of {ArticleChuks.Count}";StateHasChanged();// Get embeddings for the chunkvar embeddings =await api.EmbeddingsEndpoint.CreateEmbeddingAsync(chunk, model);// Create ArticleDetail objectvar objNewArticleDetail = new ArticleDetail();objNewArticleDetail.Article = InsertArticle;objNewArticleDetail.ArticleContent = chunk;objNewArticleDetail.ArticleSequence = ArticleChuks.IndexOf(chunk) + 1;// Add article detail to databasevar InsertArticleDetail =await @Service.AddArticleDetailAsync(objNewArticleDetail, embeddings);}// Refresh the gridStatus = "";StateHasChanged();// Get the articles from the databasearticles = await @Service.GetArticlesAsync();// Show a success dialogawait dialogService.Alert("Article added to database","Success",new AlertOptions() { OkButtonText = "Ok" });

The screen will show the progress.

These are the methods, called by the previous code, that create the chunks and clean up the text:

// The method that splits the text into chunks of a given sizepublic static List<string> SplitTextIntoChunks(string text, int chunkSize){// Initialize an empty list to store the chunksList<string> chunks = new List<string>();// Fix up textvar NormalizedText = NormalizeText(text);// Split the text by whitespace charactersstring[] words = NormalizedText.Split();// Loop through the words and add them to the current chunkstring currentChunk = "";foreach (string word in words){// If adding the word would exceed the chunk size,// add the current chunk to the list and start a new oneif (currentChunk.Length + word.Length + 1 > chunkSize){chunks.Add(currentChunk);currentChunk = "";}// Add the word to the current chunk with a spacecurrentChunk += word + " ";}// Add the last chunk to the list if it is not emptyif (currentChunk != ""){chunks.Add(currentChunk);}// Return the list of chunksreturn chunks;}public static string NormalizeText(string s, string sepToken = " \n "){// Replace multiple whitespace with single space and trims = Regex.Replace(s, @"\s+", " ").Trim();// Remove all instances of ". ,"s = Regex.Replace(s, @". ,", "");// Remove all instances of multiple dotss = s.Replace("..", ".");s = s.Replace(". .", ".");// Remove all instances of multiple commass = s.Replace("\n", "");s = s.Trim();return s;}

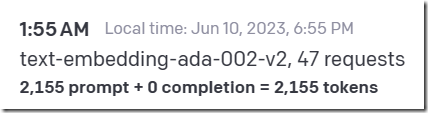

If we log into OpenAI and look at the usage (https://platform.openai.com/account/usage)

We can see that the cost to create 47 embeddings of 200 words each uses 2,155 tokens.

The current cost for embeddings is $0.0004 per 1K tokens.

Perform A Vector Search

On the Home page a user can enter a search query.

When they click the SEARCH button the following code executes to create an embedding of the search query and call the SQL function to calculate the cosign similarity with the other documents in the database:

// Clear the similarities collectionsimilarities.Clear();// Create a new instance of OpenAIClient using the ApiKey and Organizationvar api = new OpenAIClient(new OpenAIAuthentication(ApiKey, Organization));// Get the model detailsvar model =await api.ModelsEndpoint.GetModelDetailsAsync("text-embedding-ada-002");// Get embeddings for the search textvar SearchEmbedding =await api.EmbeddingsEndpoint.CreateEmbeddingAsync(InputText, model);// Get embeddings as an array of floatsvar EmbeddingVectors =SearchEmbedding.Data[0].Embedding.Select(d => (float)d).ToArray();// Loop through the embeddingsList<VectorData> AllVectors = new List<VectorData>();for (int i = 0; i < EmbeddingVectors.Length; i++){var embeddingVector = new VectorData{VectorValue = EmbeddingVectors[i]};AllVectors.Add(embeddingVector);}// Convert the floats to a single string to pass to the functionvar VectorsForSearchText ="[" + string.Join(",", AllVectors.Select(x => x.VectorValue)) + "]";// Call the SQL function to get the similar content articlesvar SimularContentArticles =@Service.GetSimilarContentArticles(VectorsForSearchText);

This is the function that is called:

/*From GitHub project: Azure-Samples/azure-sql-db-openai*/CREATE function [dbo].[SimilarContentArticles](@vector nvarchar(max))returns tableasreturn with cteVector as(selectcast([key] as int) as [vector_value_id],cast([value] as float) as [vector_value]fromopenjson(@vector)),cteSimilar as(select top (10)v2.ArticleDetailId,sum(v1.[vector_value] * v2.[vector_value]) /(sqrt(sum(v1.[vector_value] * v1.[vector_value]))*sqrt(sum(v2.[vector_value] * v2.[vector_value]))) as cosine_distancefromcteVector v1inner joindbo.ArticleVectorData v2 on v1.vector_value_id = v2.vector_value_idgroup byv2.ArticleDetailIdorder bycosine_distance desc)select(select [ArticleName] from [Article] where id = a.ArticleId) as ArticleName,a.ArticleContent,a.ArticleSequence,r.cosine_distancefromcteSimilar rinner joindbo.[ArticleDetail] a on r.ArticleDetailId = a.idGO

A key to this solution is that this function runs fast because we created the following columstore index when we created the database table.

(See: Vector Similarity Search with Azure SQL database and OpenAI for more information):

CREATE NONCLUSTERED COLUMNSTORE INDEX[ArticleDetailsIdClusteredColumnStoreIndex] ON[dbo].[ArticleVectorData]([ArticleDetailId])WITH (DROP_EXISTING = OFF, COMPRESSION_DELAY = 0) ON [PRIMARY]

The following code parses and sorts the result:

// Loop through SimularContentArticlesforeach (var Article in SimularContentArticles){// Add to similarities collectionsimilarities.Add(new ArticleResultsDTO(){Article = Article.ArticleName,Sequence = Article.ArticleSequence,Contents = Article.ArticleContent,Match = Article.cosine_distance ?? 0});}// Sort the results by similarity in descending ordersimilarities.Sort((a, b) => b.Match.CompareTo(a.Match));// Take the top 10 resultssimilarities = similarities.Take(10).ToList();// Sort by the first colum then the second columnsimilarities.Sort((a, b) => a.Sequence.CompareTo(b.Sequence));similarities.Sort((a, b) => a.Article.CompareTo(b.Article));

The results are available on the SEARCH RESULTS tab:

Finally, the following code passes the results from the vector search to a prompt (by calling GetInstruction(knowledge) shown later) and passing that result as a Role.System message.

It then adds a Role.User message that contains the original search query by the user, and passes that to OpenAI to get a completion:

// concatenate column 3 from similarities collection into Knowledgestring ExistingKnowledge = "";foreach (var item in similarities){ExistingKnowledge +=$"#Article: {item.Article} #Article Contents: {item.Contents}";}// Create a new instance of OpenAIClient using the ApiKey and Organizationvar api = new OpenAIClient(new OpenAIAuthentication(ApiKey, Organization));// Create a new list of chatMessages objectsvar chatMessages = new List<Message>();// Add the existing knowledge to the chatMessages listchatMessages.Add(new Message(Role.System, GetInstruction(ExistingKnowledge)));// Add the user input to the chatMessages listchatMessages.Add(new Message(Role.User, InputText));// Call ChatGPT// Create a new ChatRequest object with the chat prompts and pass// it to the API's GetCompletionAsync method// temperature is set to 0.0, which controls the randomness// Lower values produce more deterministic outputs.var chatRequest = new ChatRequest(chatMessages,temperature: 0.0,topP: 1,frequencyPenalty: 0,presencePenalty: 0,model: Model.GPT3_5_Turbo);var result = await api.ChatEndpoint.GetCompletionAsync(chatRequest);// Output resultAIOutput = result.FirstChoice;// If "##Answer:" is in the output then remove everything before itif (AIOutput.Contains("##Answer:")){AIOutput = AIOutput.Substring((AIOutput.IndexOf("##Answer:") + 9));}// Clear InputTextInputText = "";

(Note: We are actually using the OpenAI ChatGPT endpoint to get the completion, not the normal OpenAI completion endpoint because the ChatGPT endpoint is faster, cheaper, and produces better results).

The user is then shown the results:

The Prompt

The key to the RAG pattern is constructing a prompt that takes in the output from the vector search and uses it as grounding.

When constructing any prompt, the following is recommended:

- Explain what you want

- Explain what you don't want

- Provide examples

This is the prompt that is used:

public string GetInstruction(string Knowledge){string instruction = $@"Answer questions using the given knowledge ONLY.For tabular information return it as an HTML table.Do not return markdown format.Each knowledge has a #Article: source name and an #Article Contents: with the actual informationDo not return the ##Knowledge: section only return the ##Answer: sectionAlways include the source name for each knowledge you use in the answer.Don't cite knowledge that is not available in the knowledge list.If you cannot answer using the knowledge list only, say you don't know.You have this knowledge available: {Knowledge}### EXAMPLE 1Question: 'What Microsoft Blazor?'##Knowledge:#Article: Blazor One #Article Contents: Blazor allows you to build web apps.#Article: Blazor One #Article Contents: Both client and server code is written in C#, allowing you to share code and libraries.#Article: Blazor Two #Article Contents: It uses C# instead of JavaScript allowing you to share code and libraries.##Answer:Blazor apps are composed of reusable web UI components implemented using C#, HTML, and CSS.Both client and server code is written in C#, allowing you to share code and libraries.References: [Blazor One], [Blazor Two]### EXAMPLE 2Question: 'What happens in a performance review'##Knowledge:##Answer:I don't know###";return instruction.Trim();}

Download

The project is available on the Downloads page on this site.

You must have Visual Studio 2022 (or higher) installed to run the code.

Links

- Vector Similarity Search with Azure SQL database and OpenAI

- OpenAI Embeddings API - Searching Financial Documents

- Revolutionize your Enterprise Data with ChatGPT: Next-gen Apps w/ Azure OpenAI and Cognitive Search

- Riding the AI Wave with Vector Databases: How they work (and why VCs love them)

- How to use the Azure OpenAI Embedding model to find the most relevant documents

- Grounding LLMs

- What are Columnstore Indexes? - Simple Talk (red-gate.com)