6/28/2025 Admin

Creating a Blazor Aspire Local AI Chat Bot

Local Internal AI Chat Bot is a Blazor web application designed to bring the power of AI-driven chat and knowledge retrieval to your local environment. Leveraging Ollama hosting a phi3.5 model and advanced vector search, this project enables users to interact with a chat bot that can answer questions using custom, user-provided data.

Documents are chunked, embedded, and stored for fast semantic search, ensuring relevant and accurate responses. The interface, built with Radzen components, allows for easy data management and real-time chat experiences.

Best of all, the entire project runs entirely on your computer, you do not need to subscribe to an external AI service such as OpenAI.

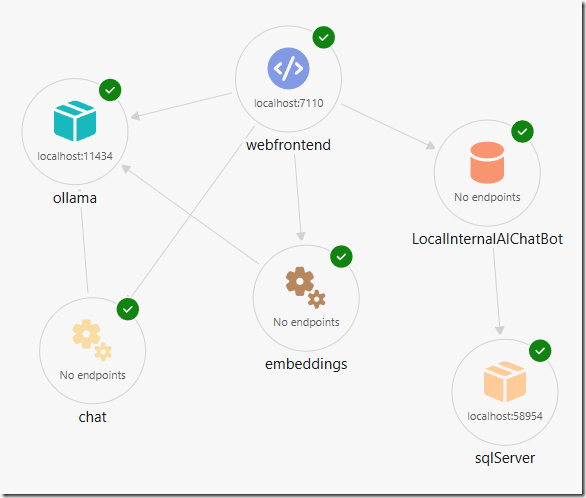

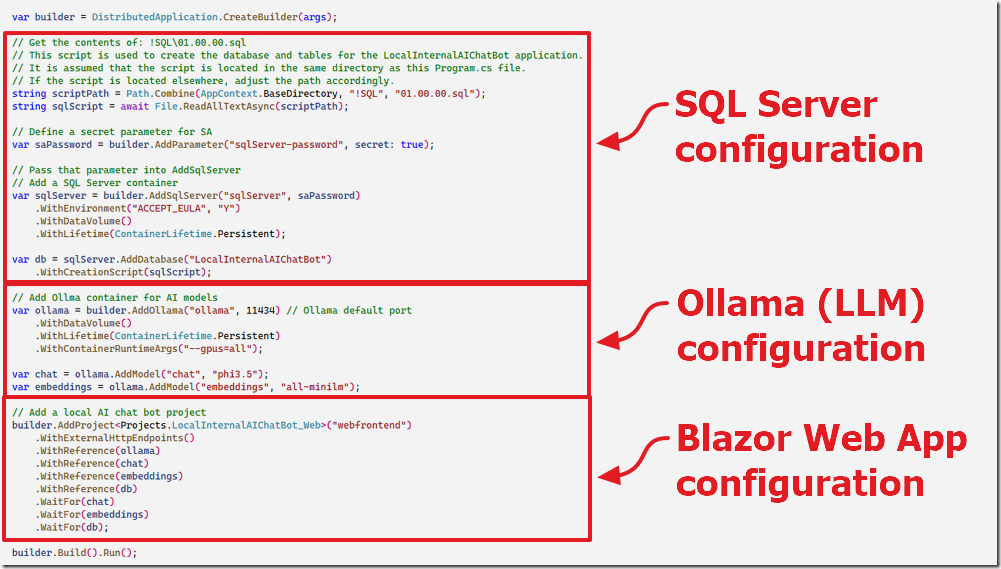

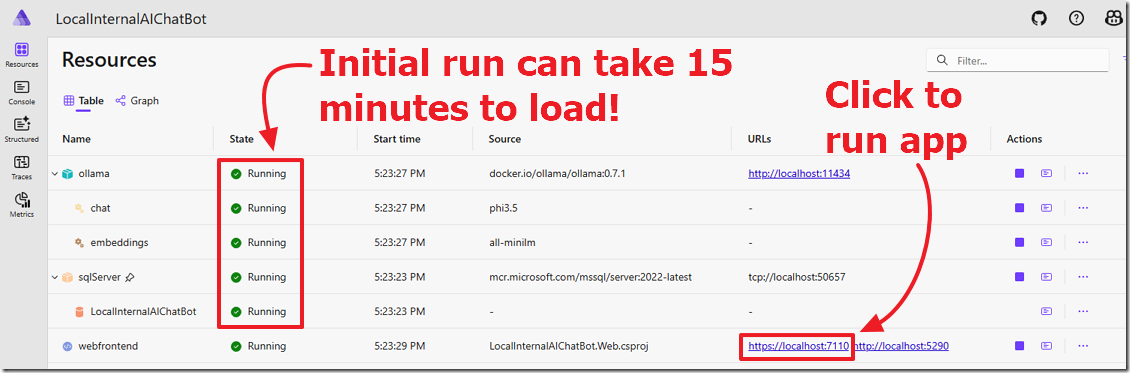

The Aspire solution is composed of the following components:

- webfrontend – Blazor web app

- ollama (container)

- chat – AI chat service using phi.3.5 model

- embeddings – AI vector embedding service

- sqlServer (container)

- LocalInternalAIChatBot – sql database

The Project Code

To run the Microsoft Aspire-based Local Internal AI Chat Bot project, you'll need a development environment equipped with Visual Studio 2022 or later, which supports the .NET Aspire workload and provides seamless integration for distributed applications.

Additionally, containerization is essential for running services like Ollama and SQL Server, so either Docker Desktop or Podman Desktop must be installed and running on your system.

These tools enable Aspire to orchestrate containers for AI models, databases, and supporting services as part of its distributed application model. Make sure WSL 2 is enabled on Windows if you're using Docker, and confirm your machine supports virtualization features required by these container platforms.

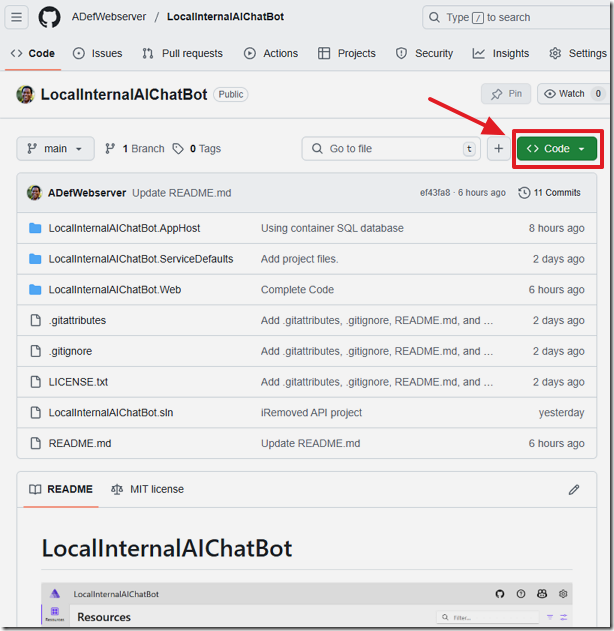

Download the code from the GitHub repo at: https://github.com/ADefWebserver/LocalInternalAIChatBot.

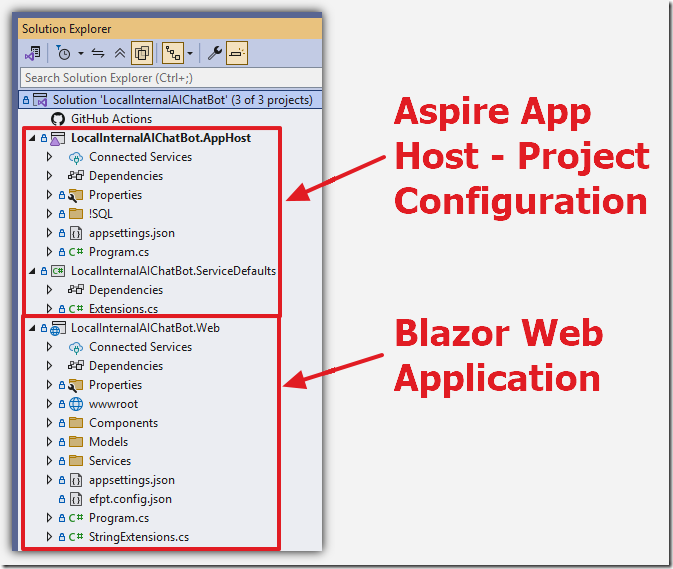

When you open the project in Visual Studio you will see the Aspire App Host provides orchestration, configuration, and observability for your distributed solution, while the Blazor web application delivers the interactive user experience and AI-powered features, leveraging shared service defaults and infrastructure provided by Aspire.

Aspire App Host

The Aspire App Host project is configured in the Program.cs file in the AppHost project.

The App Host project, together with the Service Defaults project is designed to orchestrate, configure, and run multiple services as a single application.

It provides:

• Service Discovery: Automatically registers and discovers services within the solution. This configures and launches the SQL Server container and Ollama container.

• Resilience & Health Checks: Adds health endpoints and resilience policies for robust microservice communication.

• OpenTelemetry Integration: Enables distributed tracing and metrics collection out-of-the-box.

• Centralized Configuration: Manages configuration and environment variables for all services.

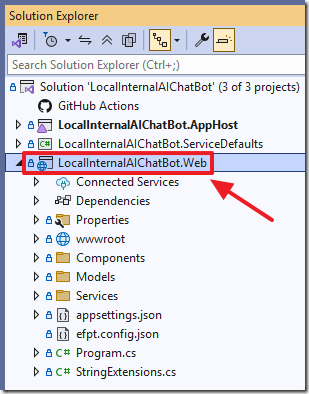

Blazor Web Application

The Blazor Web project is contained in the Web project.

The Blazor web application is a app built with Blazor Server, targeting .NET 9. Its structure includes:

• Pages/Components: Razor files (e.g., Home.razor, RagChat.razor) define the UI and user interactions.

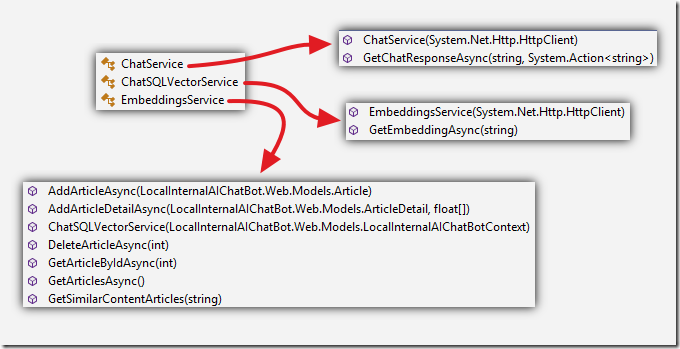

• Services: C# classes (e.g., ChatService, EmbeddingsService, ChatSQLVectorService) handle business logic, API calls, and AI integration.

• DTOs/Models: Data transfer objects and models represent chat messages, articles, and embeddings.

• Dependency Injection: Services are injected into components for clean separation of concerns.

• Radzen Components: UI is built using Radzen Blazor components for grids, dialogs, and forms.

• AI Integration: The app communicates with AI endpoints (e.g. Ollama) for chat and embeddings, using HTTP APIs and streaming responses.

Run The Project

In Visual Studio, run the solution.

This will open the Aspire dashboard in your web browser.

Note: The initial load of Ollama and SqlServer can take 15 minutes to download the containers. This is a one-time operation and the project will take seconds to load on subsequent runs.

When the WebFrontEnd (Blazor app) is in the Running state, click the https: link to run the application.

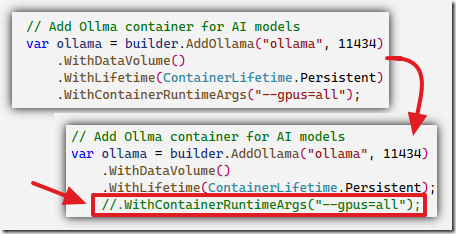

If the Ollama container fails to load, open the Program.cs file in the AppHost project and comment out the line:

.WithContainerRuntimeArgs("--gpus=all");Note: If after making this change, the application times out, you will need to run the application on hardware that allows you to enable GPUs.

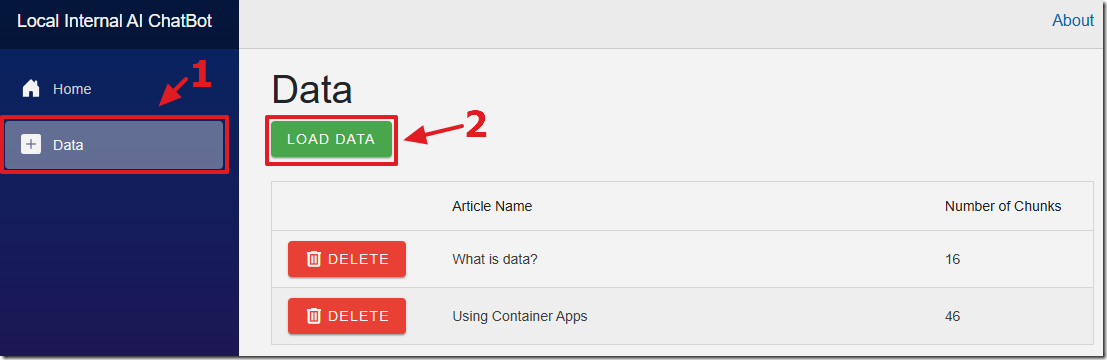

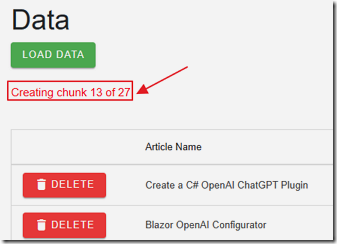

The first step is to navigate to the Data page and click the LOAD DATA button.

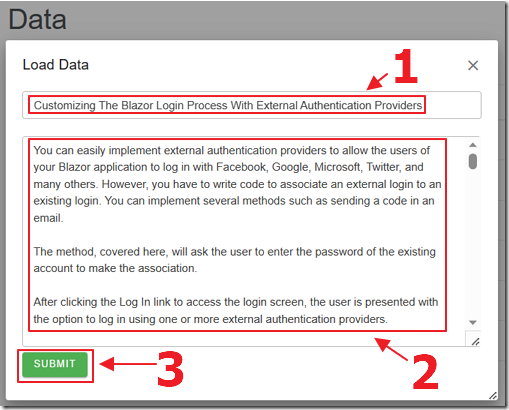

Enter a title for the Article and paste in the contents for the Article and click the SUBMIT button.

The contents of the Article will be split up into chunks of 200 word segments and will be passed to Ollama to create embeddings.

The embeddings will consist of an array of vectors that will be stored in the SQL server database.

A popup will indicate when the process is complete.

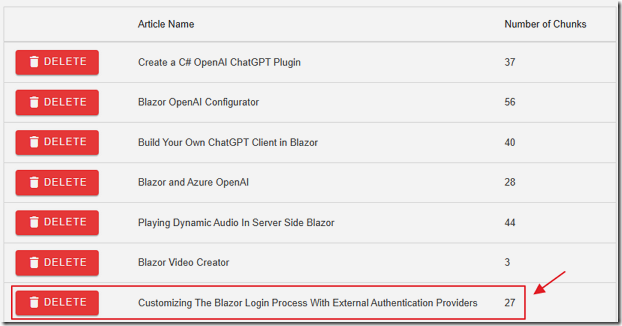

The Article will display in the Article list.

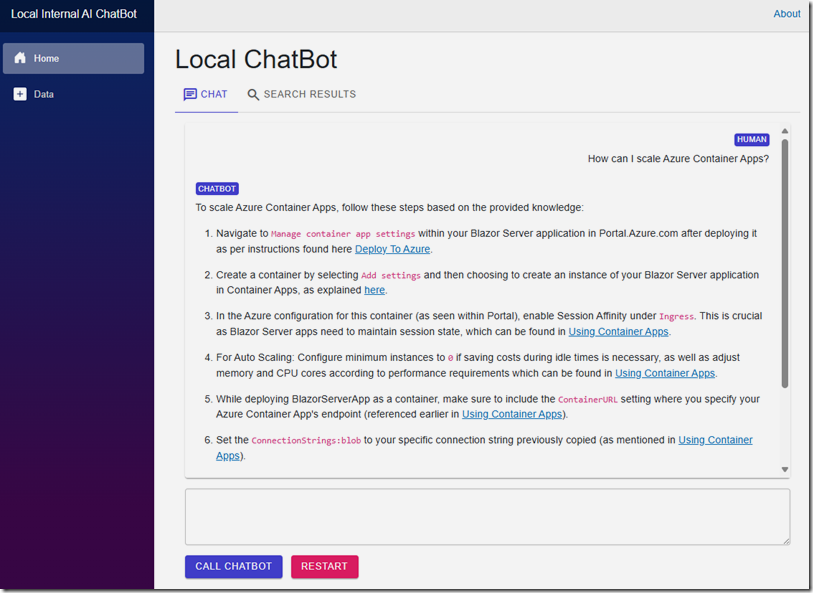

The Chat Page

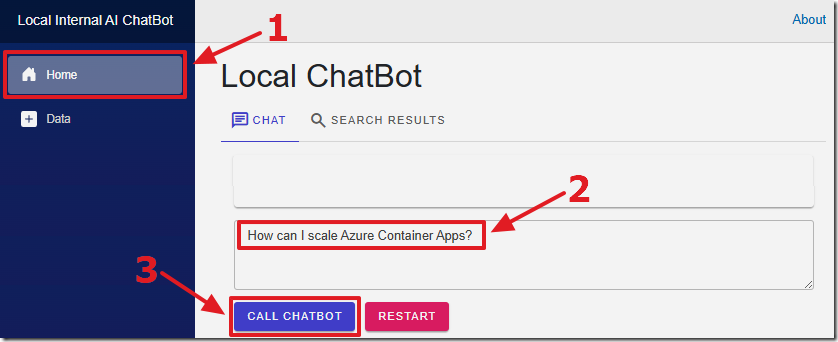

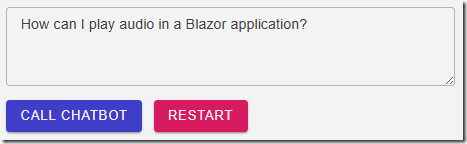

Navigate to the Home page.

Enter a search request and press the CALL CHATBOT button.

The response will display.

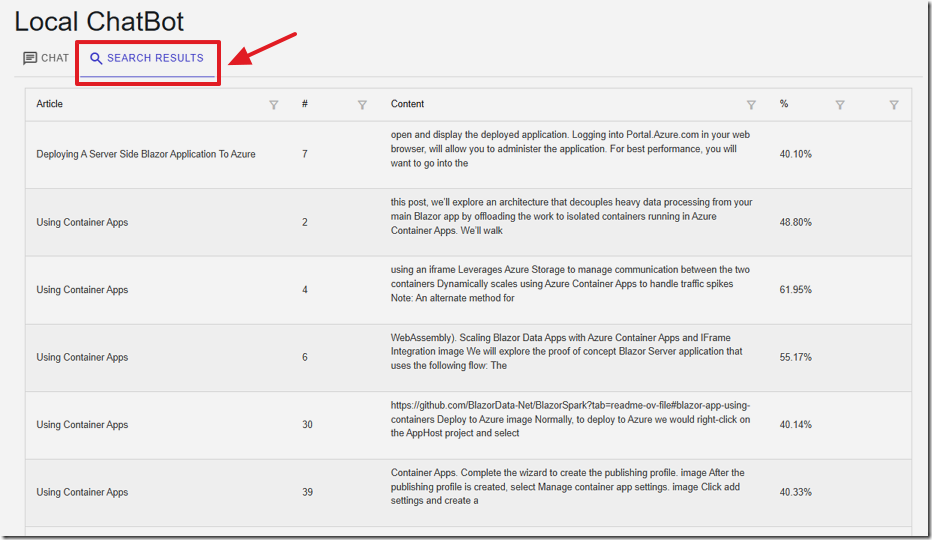

Search Results

We can click on the SEARCH RESULTS tab to see the top results retrieved from the vector search.

The results will indicate the Article and the content from the chunk.

The match percentage will be shown, and the chunks in each Article will be listed.

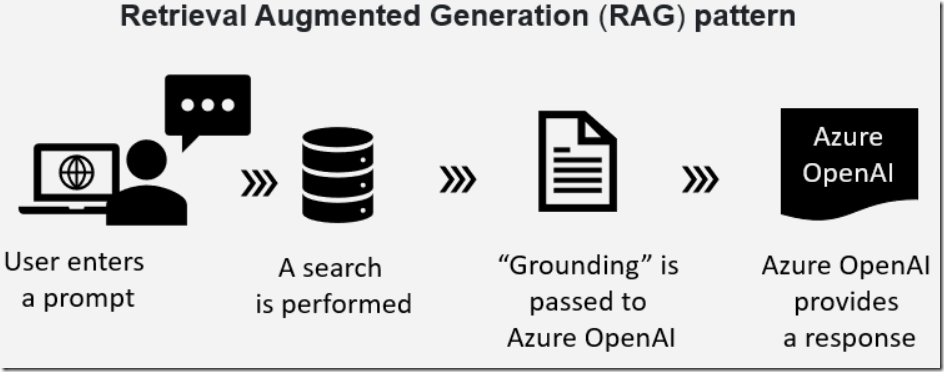

How It Works – The RAG Pattern

This application implements the Retrieval Augmented Generation (RAG) pattern.

As described in the article: Bring Your Own Data to Azure OpenAI the RAG pattern is a technique for building natural language generation systems that can retrieve and use relevant information from external sources.

The concept is to first retrieve a set of passages that are related to the search query, then use them to supply grounding to the prompt, to finally generate a natural language response that incorporates the retrieved information.

Getting Embeddings

To obtain the vectors needed for the vector search, we process the text by calling a model that produces embeddings that consist of an array of floats.

An embedding is a mathematical representation of a word or phrase that captures its meaning in a high-dimensional space.

For example, if a query is entered regarding playing audio in a Blazor application.

The query is turned into embeddings and cosine similarity is used to calculate its vectors against the vectors in the text chunks stored in the database.

The text chunks, associated with the closest matching vectors, is then provided to the prompt, as grounding, that is then passed to the large language model.

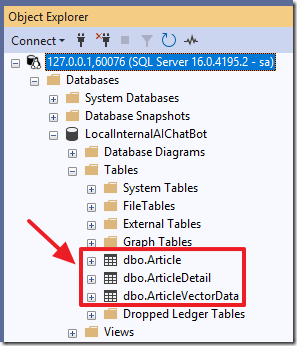

How It Works – The Database

When the application is running open SQL Server Management Studio.

You can obtain the SQL Server port number and SQL Server password from the Aspire dashboard.

You can then connect to the database and view the data.

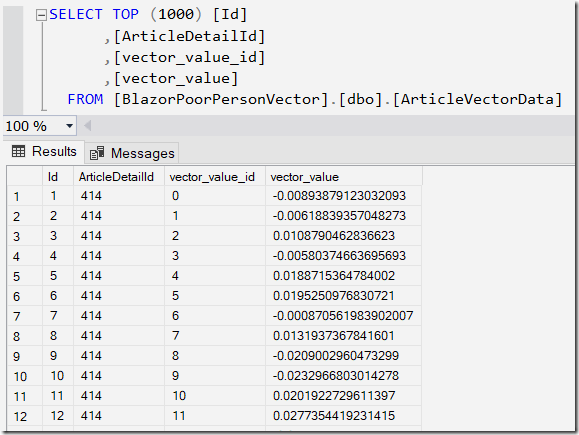

The structure of the database is that the Article table has associated records stored in the ArticleDetail table and they have associated vectors stored in the ArticleVectorData table.

Each ArticleDetail contains an array of vectors, each stored in a separate row in the ArticleVectorData table.

Each vector for a ArticleDetail has a vector_value_id that is the sequential position of the vector in the array of vectors returned by the embedding for an ArticleDetail.

To compare the vectors (stored in the vector_value field) that we get from the search request, we will use a cosine similarity calculation.

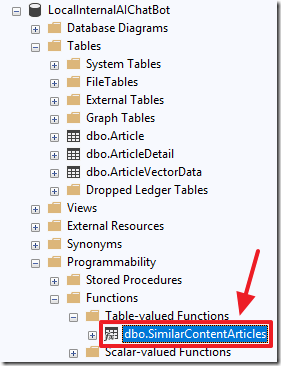

We match each vector using its vector_value_id for each vector using the SimilarContentArticles function.

This is the code for the function:

/*From GitHub project: Azure-Samples/azure-sql-db-openai*/ALTER function [dbo].[SimilarContentArticles](@vector nvarchar(max))returns tableasreturn with cteVector as(selectcast([key] as int) as [vector_value_id],cast([value] as float) as [vector_value]fromopenjson(@vector)),cteSimilar as(select top (10)v2.ArticleDetailId,sum(v1.[vector_value] * v2.[vector_value]) /(sqrt(sum(v1.[vector_value] * v1.[vector_value]))*sqrt(sum(v2.[vector_value] * v2.[vector_value]))) as cosine_distancefromcteVector v1inner joindbo.ArticleVectorData v2 on v1.vector_value_id = v2.vector_value_idgroup byv2.ArticleDetailIdorder bycosine_distance desc)select(select [ArticleName] from [Article] where id = a.ArticleId) as ArticleName,a.ArticleContent,a.ArticleSequence,r.cosine_distancefromcteSimilar rinner joindbo.[ArticleDetail] a on r.ArticleDetailId = a.id

How It Works – The Code

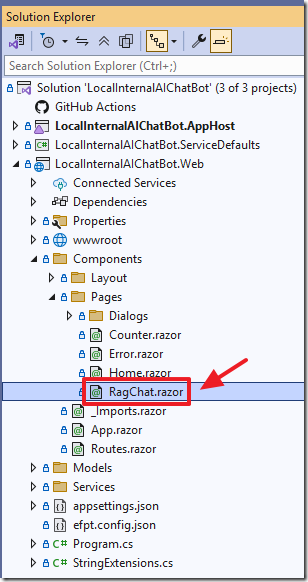

Data is imported into the database (for use in the RAG process) by code contained in the RagChat.razor control.

The following code is called when the import process begins:

// Check that article contains dataif ((article.Title.Trim() != "") && (article.Contents.Trim() != "")){// Split the article into chunksvar ArticleChuks = SplitTextIntoChunks(article.Contents.Trim(), 200);// Create Article objectvar objNewArticle = new Article();objNewArticle.ArticleName = article.Title;// Add article to databasevar InsertArticle = await @Service.AddArticleAsync(objNewArticle);// Add article details to databaseforeach (var chunk in ArticleChuks){// Update the statusStatus =$"Creating chunk {ArticleChuks.IndexOf(chunk) + 1} of {ArticleChuks.Count}";StateHasChanged();// Get embeddings for the chunkvar embeddings = await embeddingsService.GetEmbeddingAsync(chunk);// Create ArticleDetail objectvar objNewArticleDetail = new ArticleDetail();objNewArticleDetail.Article = InsertArticle;objNewArticleDetail.ArticleContent = chunk;objNewArticleDetail.ArticleSequence = ArticleChuks.IndexOf(chunk) + 1;// Add article detail to databasevar InsertArticleDetail =await @Service.AddArticleDetailAsync(objNewArticleDetail, embeddings);}// Refresh the gridStatus = "";StateHasChanged();// Get the articles from the databasearticles = await @Service.GetArticlesAsync();// Show a success dialogawait dialogService.Alert("Article added to database","Success",new AlertOptions() { OkButtonText = "Ok" });}

This calls the embedding model, hosted in the Ollama service, that produces the embedding composed of vectors, using the following code:

/// <summary>/// A service class responsible for generating vector embeddings from a given text prompt/// by making an HTTP request to an Ollama-based embedding endpoint./// </summary>public class EmbeddingsService(HttpClient _httpClient){/// <summary>/// Asynchronously retrieves an embedding vector for the provided prompt text./// </summary>/// <param name="prompt">The input text for which the embedding should be generated.</param>/// <returns>A float array representing the embedding vector.</returns>/// <exception cref="Exception">Thrown if the HTTP request fails/// or the expected JSON structure is not returned.</exception>public async Task<float[]> GetEmbeddingAsync(string prompt){// Specify the name of the model used to generate embeddingsstring _model = "all-minilm";// Construct an anonymous object representing the JSON body of the POST request.// The server expects a model identifier and a prompt string to generate embeddings.var request = new{model = _model,prompt = prompt};// Make an asynchronous HTTP POST request to the "/api/embeddings" endpoint,// sending the request object as JSON.var response = await _httpClient.PostAsJsonAsync("/api/embeddings", request);// If the HTTP response status is not successful (200 OK, etc.), throw an errorif (!response.IsSuccessStatusCode)throw new Exception($"Embedding request failed: {response.StatusCode}");// Read the response content as a stream to allow efficient parsing without full bufferingusing var contentStream = await response.Content.ReadAsStreamAsync();// Parse the JSON content from the response stream into a JsonDocumentusing var jsonDoc = await JsonDocument.ParseAsync(contentStream);// Access the root of the parsed JSON documentvar root = jsonDoc.RootElement;// Try to extract the "embedding" property from the root JSON object.// If the property doesn't exist, the expected response structure is invalid.if (!root.TryGetProperty("embedding", out var embeddingElement))throw new Exception("Missing 'embedding' in response.");// The "embedding" field should be an array of numbers.// Convert each JSON number to a float using .GetSingle(), and return as a float[]return embeddingElement.EnumerateArray().Select(e => e.GetSingle()).ToArray();}}

The chat UI is contained in the Home.razor control.

When the user enters a prompt and clicks the CALL CHATBOT button the following code is called

to get the embedding for the prompt and to then search the vector database searching for matches:

similarities.Clear();// Get embeddings for the chunkvar embeddings = await embeddingsService.GetEmbeddingAsync(prompt);// Loop through the embeddingsList<VectorData> AllVectors = new List<VectorData>();for (int i = 0; i < embeddings.Length; i++){var embeddingVector = new VectorData{VectorValue = embeddings[i]};AllVectors.Add(embeddingVector);}// Convert the floats to a single string to pass to the functionvar VectorsForSearchText ="[" + string.Join(",", AllVectors.Select(x => x.VectorValue)) + "]";// Call the SQL function to get the similar content articlesvar SimularContentArticles =@Service.GetSimilarContentArticles(VectorsForSearchText);// Loop through SimularContentArticlesforeach (var Article in SimularContentArticles){// Add to similarities collectionsimilarities.Add(new ArticleResultsDTO(){Article = Article.ArticleName,Sequence = Article.ArticleSequence,Contents = Article.ArticleContent,Match = Article.cosine_distance ?? 0});}// Sort the results by similarity in descending ordersimilarities.Sort((a, b) => b.Match.CompareTo(a.Match));// Take the top 10 resultssimilarities = similarities.Take(10).ToList();// Sort by the first colum then the second columnsimilarities.Sort((a, b) => a.Sequence.CompareTo(b.Sequence));similarities.Sort((a, b) => a.Article.CompareTo(b.Article));// Call Azure OpenAI APIawait CallChatGPT();

This code calls a method in the ChatSQLVectorServices class:

public List<SimilarContentArticlesResult>GetSimilarContentArticles(string vector){return _context.SimilarContentArticles(vector).ToList();}

This method calls the SQL function covered earlier.

The following code uses the results of that search to build a final prompt that is then sent to the large language model:

// Step 1: Build knowledge basestring ExistingKnowledge = string.Join(" ", similarities.Select(item =>$"#Article: {item.Article} #Article Contents: {item.Contents}"));// Step 2: Add user messageChatMessageDTOs.Add(new ChatMessageDTO(ChatRole.User, prompt));// Step 3: Add placeholder assistant messagevar assistantMessage = new ChatMessageDTO(ChatRole.Assistant, "");ChatMessageDTOs.Add(assistantMessage);StateHasChanged(); // Render placeholder// Step 4: Call the chat service to get the responsestring SystemMessage = "You are helpful Assistant.";SystemMessage += "You will always reply with a Markdown formatted response.";string ChatBotPrompt =$"###System Message:\n{SystemMessage}\n\n##Question:\n{prompt}\n\n";ChatBotPrompt = ChatBotPrompt +$"##Instruction:\n{GetInstruction(ExistingKnowledge)}";await chatService.GetChatResponseAsync(ChatBotPrompt, async chunk =>{assistantMessage.content += chunk;await InvokeAsync(StateHasChanged); // Notify Blazor of changes});

The Prompt

When constructing any prompt, the following is recommended:

- Explain what you want

- Explain what you don't want

- Provide examples

This is the code, that will take in the information retrieved from the database, and provide information for the prompt that is sent to the large language model:

public string GetInstruction(string Knowledge){string instruction = $@"#1 Answer questions using the given knowledge ONLY.#2 Each knowledge has a #Article: source name and an #Article Contents: with the actual information#3 Do not return the ##Knowledge: section only return the contents of the ##Answer: section#4 Always include the source name for each knowledge you use in the answer.#5 Don't cite knowledge that is not available in the knowledge list.#6 If you cannot answer using the knowledge list only, say you don't know.### KNOWLEDGE BEGIN{Knowledge}### KNOWLEDGE END#7 These are examples of how to answer questions:### EXAMPLE 1Question: 'What Microsoft Blazor?'##Knowledge:#Article: Blazor One #Article Contents: Blazor allows you to build web apps.#Article: Blazor One #Article Contents: Both client and server code is written in C#, allowing you to share code and libraries.#Article: Blazor Two #Article Contents: It uses C# instead of JavaScript allowing you to share code and libraries.##Answer BEGIN ##Blazor apps are composed of reusable web UI components implemented using **C#**, **HTML**, and **CSS**.Both client and server code is written in **C#**, allowing you to share code and libraries.References: [Blazor One], [Blazor Two]##Answer END ##### EXAMPLE 2Question: 'What happens in a performance review'##Knowledge:##Answer BEGIN ##I don't know the answer to this question.##Answer END ##";return instruction.Trim();}

Download Code

Links

- Local Internal AI Chat Bot (GitHub)

- Azure OpenAI RAG Pattern using a SQL Vector Database

- Creating A Blazor Chat Application With Azure OpenAI

- Create a .NET AI app to chat with custom data using the AI app template extensions

- .NET Aspire

- Docker Desktop

- Podman Desktop

- How to install Linux on Windows with WSL

- SQL Server Management Studio