10/27/2025 Admin

Overcoming limitations When Using AI

Over the past years I’ve been experimenting and building applications that use generative AI. In this post I’ll be sharing some of the challenges and limitations I’ve discovered along the way, and most importantly, how I overcome them.

The main problems I have found with AI, are in basically three areas:

- AI can’t do math very well

- AI can’t write stories very well

- AI can’t create applications very well

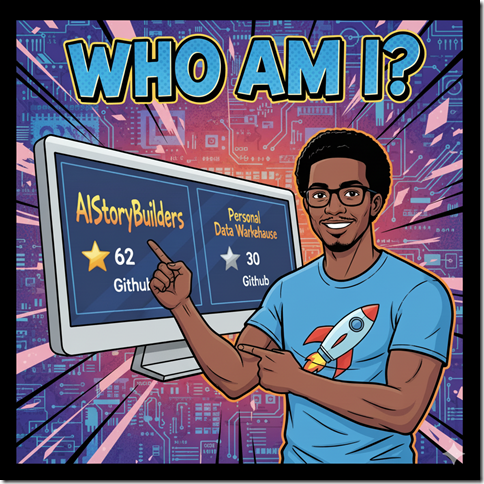

Who Am I?

I’ve been working with AI coding for about three years now, ever since ChatGPT first appeared. In that time, I’ve built a number of projects including:

-

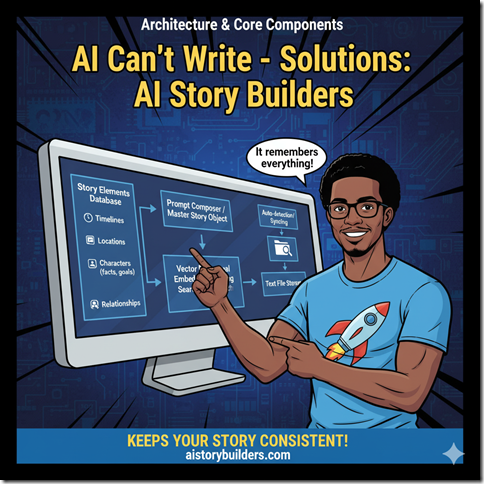

AIStoryBuilders — An open-source tool that helps writers build narratives using structured context.

-

Personal Data Warehouse — An open-source tool that lets you build your own local data warehouse, import data, ask plain-English questions, and have AI generate the code for transformations.

I have also written several articles including:

Why We Like AI

So, why do we like AI so much?

At its core, AI makes us more productive. It can write text fast and generate code at incredible speed. That doesn’t mean it’s perfect, far from it, but it does mean we can get more done in less time.

Here are a few of the advantages I’ve observed:

-

Speed & scale: AI can generate large volumes of text, code, or ideas in seconds.

-

Assistance: It acts like a collaborative partner where we provide oversight and guidance.

But (and this is key) “more done” is only valuable if it’s done well.

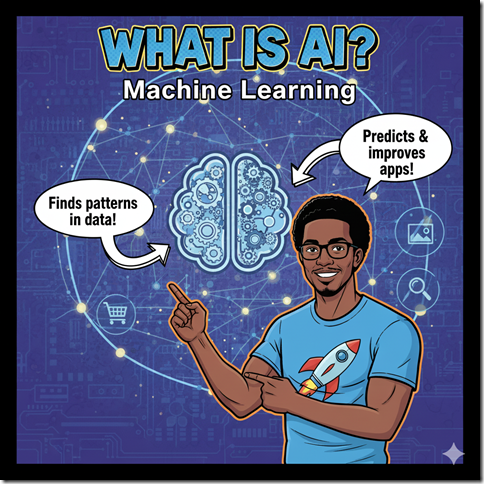

What Is AI?

Let’s take a moment to break down what we actually mean when we say “AI”.

Machine Learning

This is the part of AI that analyzes large amounts of data, finds patterns, and makes predictions. It powers everyday technologies we take for granted, from recommendation systems and speech recognition to image analysis and fraud detection.

While this *is* “AI” it is not the AI most people are using right now, and it is not the “AI” people mean when they say “AI”.

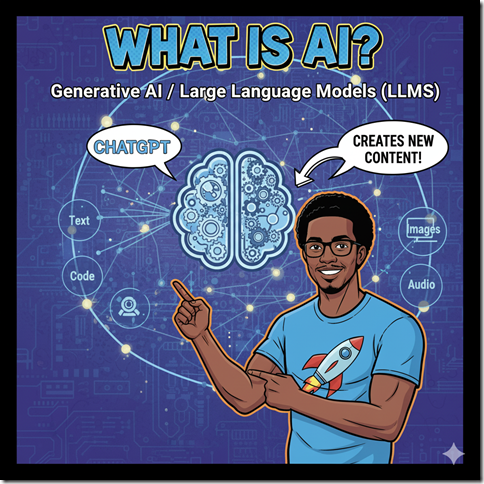

Generative AI & LLMs

When people say “AI” they usually mean “generative AI”, powered by Large Language Models (LLMs). These models don’t just predict, they create. They can generate text, images, code, even audio, by learning from massive datasets. That’s what makes tools like ChatGPT possible.

The key difference:

- Machine Learning predicts what’s likely to happen in the future.

- Generative AI produces new content.

Why (All) AI Has Limitations

Here’s the key point: AI is not alive.

Living things have desires, even a tree can decide to grow through your drain pipes if that’s where it needs to go. AI, on the other hand, has no will. It’s just a program that follows instructions, and those instructions come from us.

That means AI always requires human guidance to be useful. Without it, it’s just text and tokens, powerful, but aimless.

Here are some concrete implications from my experience:

-

No intrinsic goals: AI doesn’t know why it’s doing something. It does what it’s told (and sometimes what it infers).

-

Context-dependency: If the context is missing or inconsistent, the AI will make assumptions, and often wrong ones.

-

Scope limitations: An LLM might handle thousands of tokens, but it doesn’t have unlimited memory of your world; it needs structure to keep on track.

That’s why, in both of my, I build in databases, metadata, and human oversight. AI still does the heavy lifting (suggestions, code, text), and humans supply the meaning, structure, and final polish.

Problems with AI: Math

One of the first problems you notice when working with AI is that it can’t really do math.

Large Language Models (LLMs) like ChatGPT or Gemini aren’t calculators, they don’t “solve” equations the way a human or a computer program designed for mathematics would. Instead, they generate words based on patterns they’ve learned from vast amounts of text.

This means that when you ask an AI to add numbers or perform a calculation, it’s not actually reasoning through the math; it’s predicting what a correct-looking answer might be. The result can sound perfectly confident and well-formatted, but still be entirely wrong. This tendency to produce plausible but incorrect results highlights one of AI’s biggest limitations: it excels at language, not logic or precision.

Solutions

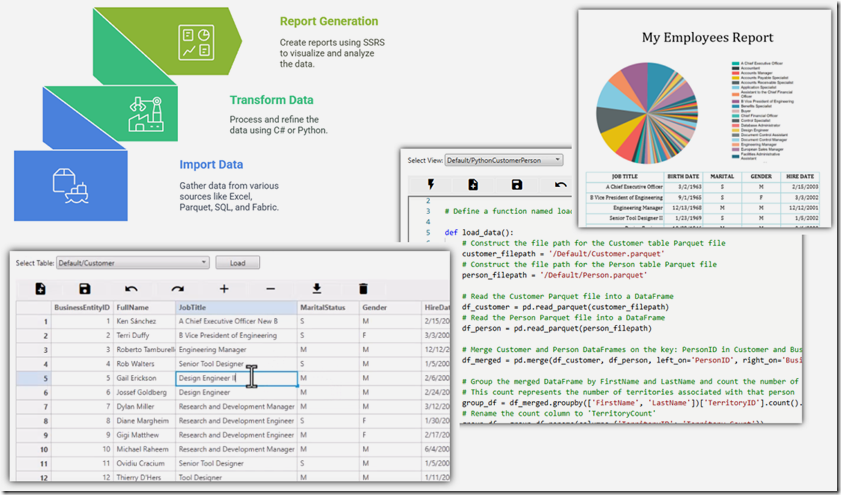

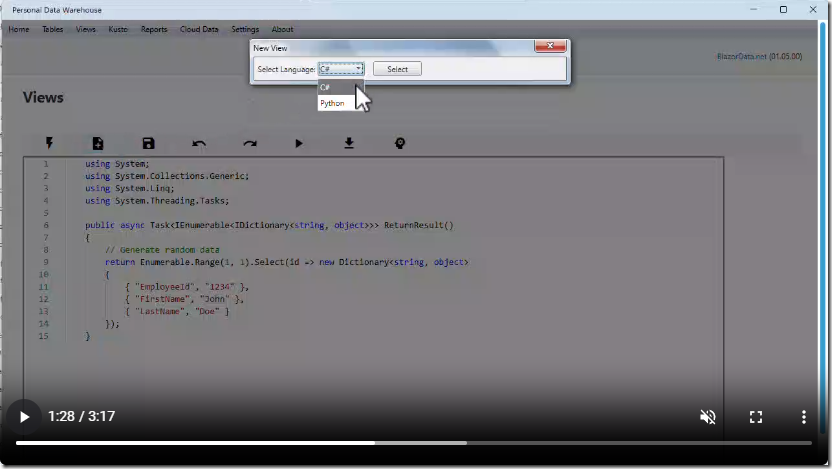

In the Personal Data Warehouse, rather than asking the AI to compute the numbers directly, I ask it to generate code (in C# or Python) which can then execute and compute the math correctly.

This application allows you to import your data, transform it using AI to perform calculations, and report and export the results.

You can see a video of the application at this link.

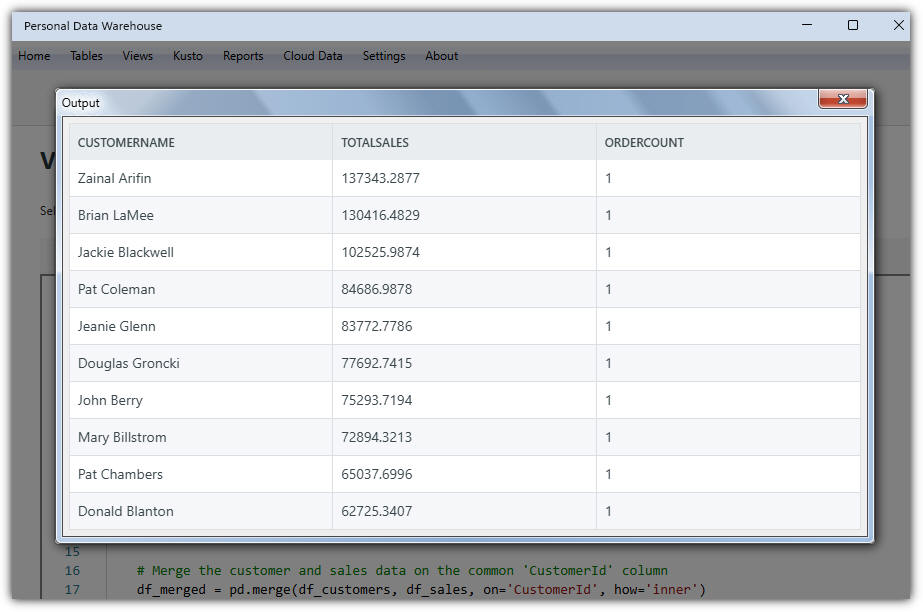

An example of how the application works

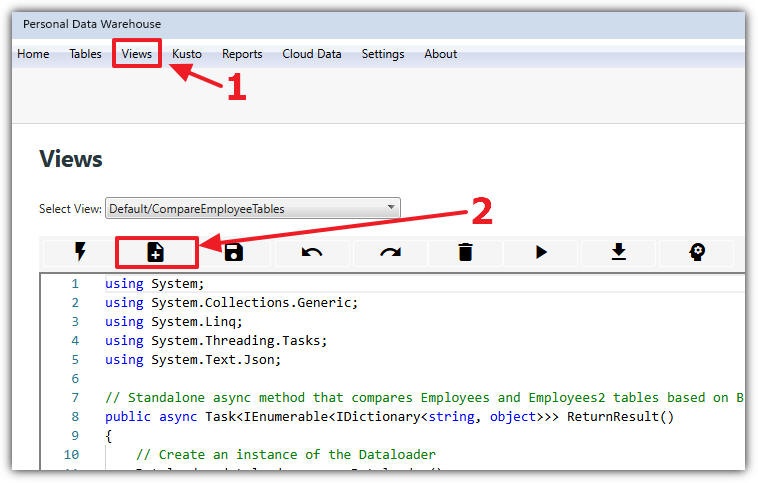

We can go to the Views page and click New.

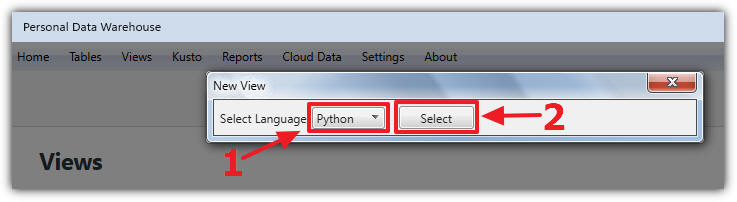

Choose Python as the language and click Select.

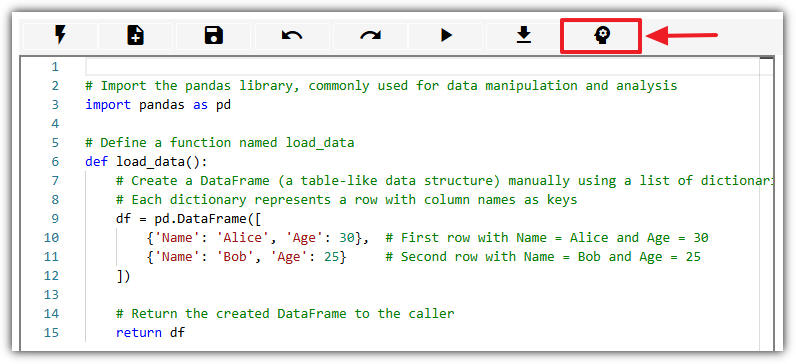

If AI is enabled, click the AI button.

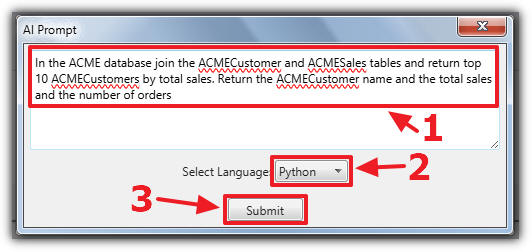

Enter the following prompt:

In the ACME database, join the ACMECustomer and ACMESales tables. Return the top 10 customers by total sales. Include customer name, total sales, and the number of orders.

The AI will generate code similar to this:

# Import the pandas library used for data manipulationimport pandas as pd# Define a function named load_datadef load_data():# Construct file paths for the ACME Customer and Sales tablescustomers_filepath = '/ACME/Customer.parquet'sales_filepath = '/ACME/Sales.parquet'# Read the ACME Customer table from its Parquet filedf_customers = pd.read_parquet(customers_filepath)# Read the ACME Sales table from its Parquet filedf_sales = pd.read_parquet(sales_filepath)# Merge the customer and sales data on the common 'CustomerId' columndf_merged = pd.merge(df_customers, df_sales, on='CustomerId', how='inner')# Convert the 'TotalDue' column to numeric, in case it is stored as a stringdf_merged['TotalDue'] = pd.to_numeric(df_merged['TotalDue'], errors='coerce')# Group by customer information to calculate total sales and order countdf_grouped = df_merged.groupby(['CustomerId', 'FirstName', 'LastName']).agg(TotalSales=('TotalDue', 'sum'), # Sum of TotalDue for each customerOrderCount=('SalesOrderID', 'count') # Count of orders for each customer).reset_index()# Create a full customer name by concatenating FirstName and LastNamedf_grouped['CustomerName'] = df_grouped['FirstName'] + ' ' + df_grouped['LastName']# Sort the customers by TotalSales in descending order to get the highest total sales at the topdf_sorted = df_grouped.sort_values(by='TotalSales', ascending=False)# Select the top 10 customerstop10_customers = df_sorted.head(10)# Select only the desired columns for the final outputresult_df = top10_customers[['CustomerName', 'TotalSales', 'OrderCount']]# Return the resulting DataFramereturn result_df

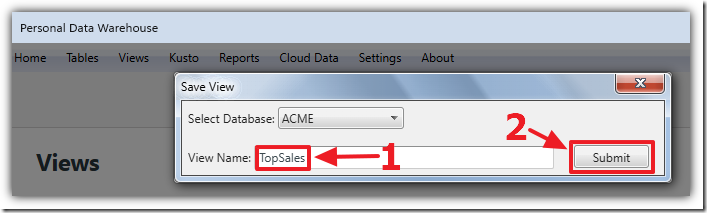

Select the ACME database, name the script TopSales, and click Submit.

The calculation of the total sales will be correct because the AI was not required to make the calculation, only to create a program to make the calculation.

Basically, LLMs are good at generating code snippets to answer a mathematical question, but not reliable for raw math calculations.

Problems with AI: Writing Fiction Stories

Another big challenge is writing fictional stories. AI struggles with long-form coherence and consistency across an extended narrative.

It might describe a character as blonde in one chapter, and then later say they have brown hair. Or it might create plot holes, like having an illiterate character suddenly write a letter.

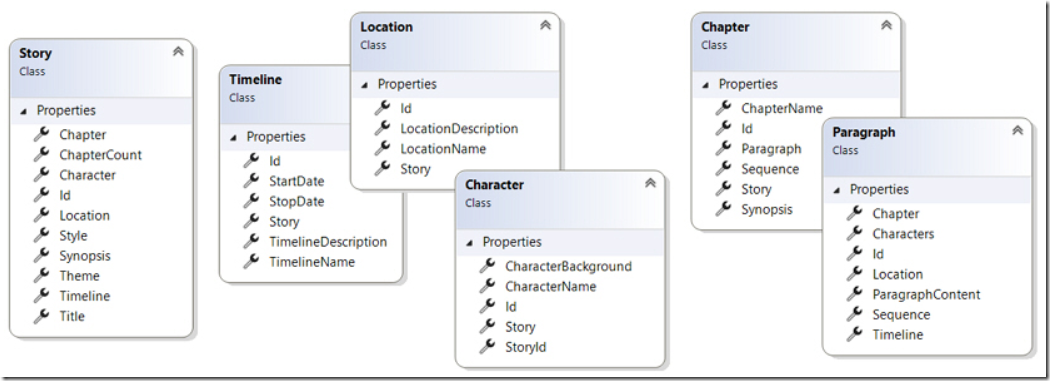

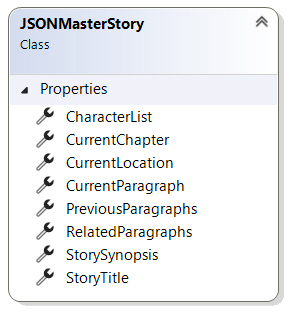

That’s why I built AIStoryBuilders. It uses a proprietary database that tracks, Locations, Characters, Chapters, and Sections.

For example, each paragraph (Section) is associated with a Timeline, Location, and one or more Characters.

This allows the AI to stay on track with the story and properly handle things like flashbacks or variations in narrative flow.

What’s helpful about this approach:

-

Metadata-rich structure: By giving the AI concrete anchors (who, when, where), you minimize hallucinations or contradictions.

-

Flexible workflow: You can add new details mid-story and the system will integrate them.

-

Iterative writing: The writer remains in control, guiding tone, revisions, and direction, the AI doesn’t replace the author.

I often say: The AI becomes a reliable collaborator, not a chaotic co-author. It gives suggestions and drafts, but you steer the ship.

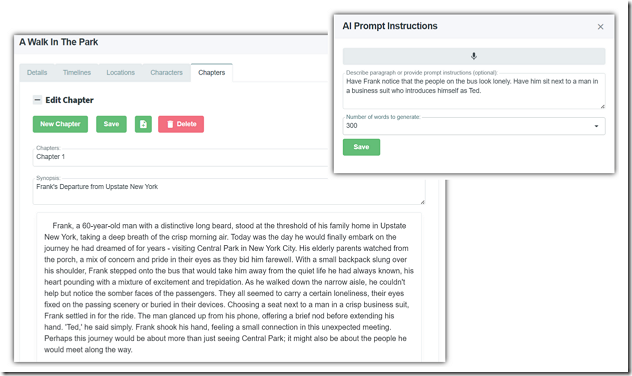

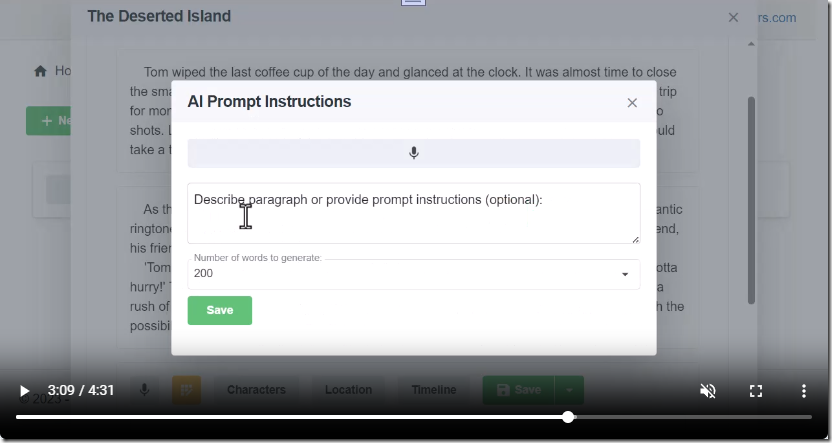

AI Story Builders in Practice

Here’s how AIStoryBuilders works in practice:

When you want to generate or edit a paragraph, you hit the AI button.

The system gathers:

-

the metadata (current character states, location, timeline)

-

the recent paragraphs of story (to maintain flow)

-

any relevant context (prior events, character goals)

It then builds a Master Story Object, a structured prompt combining all this information, and sends that to the AI.

From there:

-

The AI returns a draft paragraph.

-

You review or revise it.

-

The system updates the database as needed (new characters, location shifts, timeline changes).

-

The next paragraph starts with fresh context.

To make all this work, I needed to create a database, and a program that would allow you to manage all that information. The design of the database is very important because it determines what the program can do. It was at this point that I had to draw upon my years of writing long form fiction to ensure it would capture the important elements of any story.

You can see a video of the application at this link.

The benefits are:

-

Coherence: Because you’re grounding the AI in the story world.

-

Reducing repetition: Because new paragraphs reference previous content, avoiding “Didn’t I already say that?”.

-

Iterative control: Because you can always stop, revise, adjust the metadata, then prompt again.

But it’s not perfect:

-

The AI still has token limits (so extremely long context becomes expensive).

-

A human editor is still required to polish tone, resolve deeper plot issues, and ensure emotional resonance.

-

It’s a tool, not the author; the creative direction remains yours.

Problems with AI: Creating Applications

AI can feel almost magical when it comes to code generation. It can scaffold a page, wire up APIs, and even create database access layers in seconds.

But ask it to build an entire working application, something that runs reliably, scales properly, and integrates across multiple services, and the magic quickly fades.

The problem isn’t syntax. It’s architecture. AI doesn’t understand system design. It imitates patterns it’s seen before, but it can’t reason about dependencies, configuration, orchestration, or deployment pipelines. The result is often a tangle of half-working parts that collapse when connected together.

That became clear when I created the following blog post and video that created an RFP Responder, an AI-driven system that automates generation of responses for requests for proposals.

The concept seemed simple, but, in practice, it revealed the limits of what AI can actually build.

You can see a video of the entire process at this link.

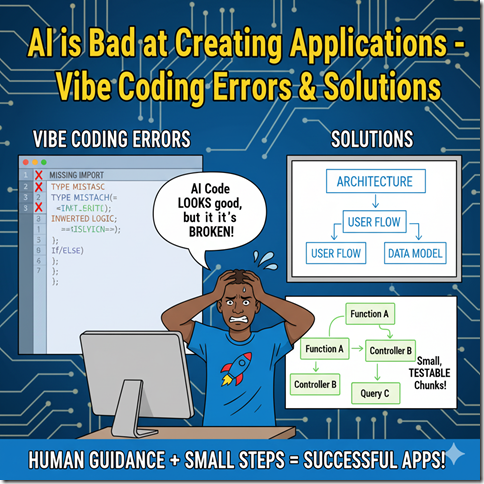

Missing Architectural Awareness

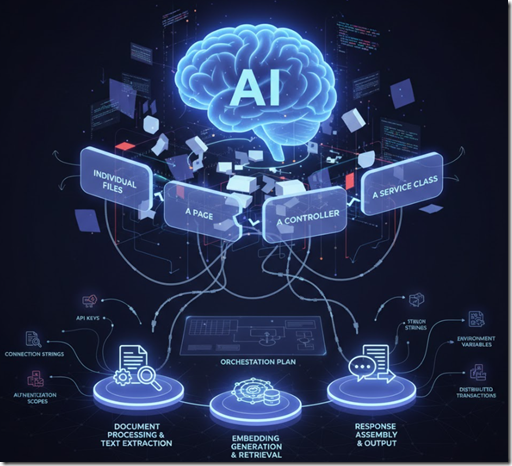

When asked to “build a Blazor app that reads RFPs and generates responses,” AI can output individual files, a page, a controller, and a service class.

But it has no awareness of the infrastructure beneath.

To work, the RFP Responder requires multiple coordinated services:

-

One for document processing and text extraction

-

One for embedding generation and retrieval

-

One for response assembly and output

Without a defined orchestration plan, these services never line up. AI doesn’t know which service should start first, or how connection strings and API keys should propagate between them. It doesn’t handle environment variables, authentication scopes, or distributed transactions.

Lesson: AI can’t design orchestration. Humans must define startup order, dependency flow, and integration boundaries.

Integration Without Understanding

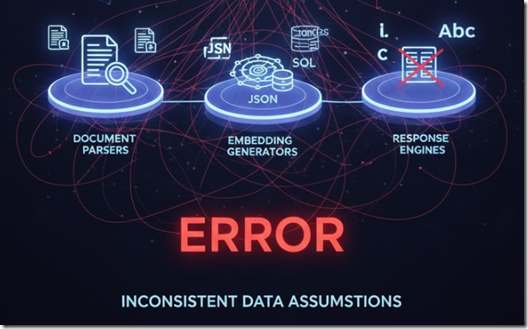

The RFP Responder depends on multiple components talking to one another, document parsers, embedding generators, and response engines.

Each has to agree on how data is stored and exchanged.

When left entirely to AI, these modules often make inconsistent assumptions:

-

One might save embeddings as JSON, another expects SQL tables.

-

One might lowercase identifiers, another requires case-sensitive lookups.

Each component looks correct in isolation, yet nothing works together.

Lesson: AI builds fragments; humans weave the fabric. Integration still requires schema alignment, API contracts, and shared logic.

Context and Data Management

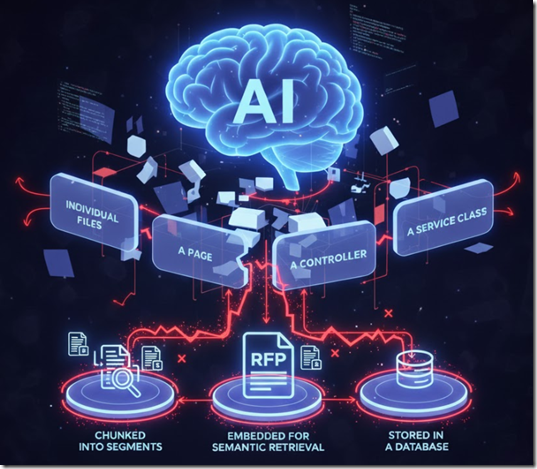

RFPs are long and complex documents. To generate useful responses, the system needs context, and context is not self-managing.

In the RFP Responder, each document is:

-

Chunked into segments

-

Embedded for semantic retrieval

-

Stored in a database

-

Queried by similarity at runtime

AI can write the code for each of those steps, but it doesn’t know how to connect them into a repeatable pipeline. Without human design, you end up with scripts that run once and fail the next time.

Lesson: AI doesn’t manage data pipelines. It must be told what to process, how to store it, and when to retrieve it.

Human Oversight and Verification

In the RFP Responder, certain steps — like “Load RFPs,” “Generate Embeddings,” and “Assemble Proposal” — still require human initiation.

That’s intentional. It ensures oversight before automation runs.

If AI-generated code operated fully unsupervised, it could silently create corrupted data — invalid vectors, mismatched IDs, or duplicated records.

By keeping humans “in the loop,” the process remains traceable and reliable.

Lesson: AI automation needs checkpoints. Verification isn’t optional; it’s engineering.

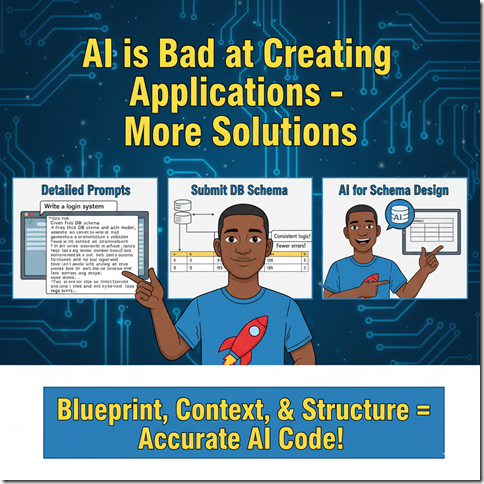

The Real Solution: Human-Defined Blueprints

The ultimate takeaway is this:

AI can generate, but it cannot orchestrate.

Before you ask an AI to build something, you must already have a human-defined blueprint, the architecture, data flow, and integration pattern. Once that exists, AI can fill in code for each piece.

This is the same philosophy behind my projects:

-

AIStoryBuilders provides narrative structure and metadata before prompting the AI.

-

Personal Data Warehouse defines tables and schemas before asking the AI to generate code.

In both cases, AI performs best when the human designs the system and the AI fills the gaps.

AI doesn’t fail at coding, it fails at coordination.

Building real applications requires an understanding of sequence, dependencies, testing, error handling, deployment, and maintenance, things that emerge only from human design thinking.

So when AI gets it wrong, it’s not proof that AI is weak. It’s proof that architecture still matters.

Closing Thoughts

So, to wrap up…

AI is powerful, but it’s not magic. It works best when guided by humans who provide structure, context, and oversight. My projects — AIStoryBuilders and Personal Data Warehouse — are examples of how we can build tools that overcome AI’s weaknesses and make it a true collaborator.

The future isn’t about AI replacing us. The future is about humans and AI working together — each doing what they do best.

AI is only as creative, accurate, or productive as the human behind it. When we give it direction, context, and purpose, it can do incredible things — from writing novels to building applications. But without us, it’s just a collection of probabilities.