1/28/2023 Admin

Playing Dynamic Audio In Server Side Blazor

(Note: YouTube video is at: https://www.youtube.com/watch?v=TRgYmTNmXT8&t=33s)

You can play dynamic audio in a Blazor Server application and have full control over the user interface.

<audio controls="controls"><source src="https://bit.ly/404yhS9" type="audio/mp3"></audio>

Playing an audio file in Blazor is simple. Just enter a tag like this, with the source pointing to a MP3 file.

When you run the application, an audio player will appear and the file will be playable using the controls.

Dynamic Audio

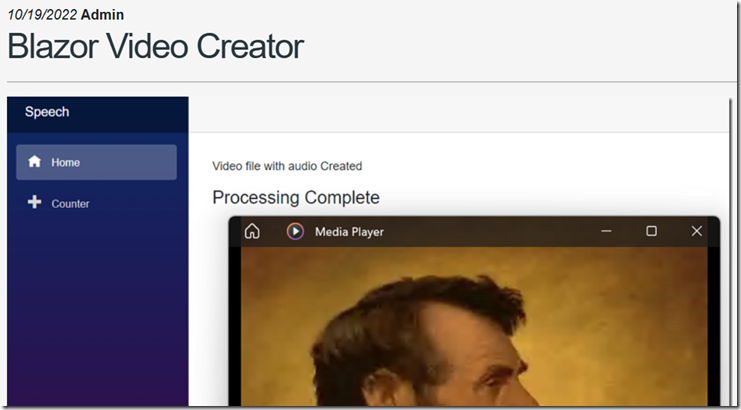

To demonstrate how to play audio created by a dynamic source, we will start with the code from the article Blazor Video Creator available on BlazorHelpWebsite.com.

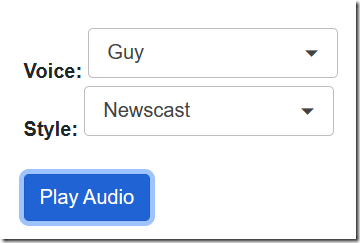

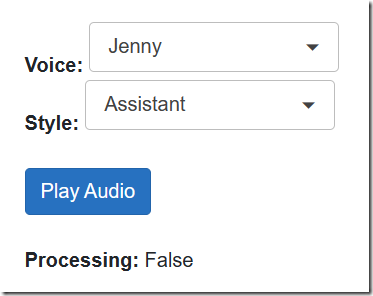

<b>Voice:</b><RadzenDropDown Data="@ColVoices"@bind-Value="@SelectedVoiceId"TextProperty="VoiceName"ValueProperty="Id"Change=@(args => OnChange(args, "DropDown"))Style="width:200px" /><br /><b>Style:</b><RadzenDropDown Data="@ColStyles.Where(x => x.VoiceIdNumber == SelectedVoiceId)"@bind-Value="@SelectedStyleId"TextProperty="StyleName"ValueProperty="Id"Style="width:200px" /><br /><br /><button class="btn btn-primary"@onclick="(() => LoadAudio())">Play Audio</button><br /><br /><p><b>Processing:</b> @Processing</p>

We start with this UI markup.

This will contain two dropdowns, once for the voice and one for the voice style.

When the Play Audio button is clicked, the Processing label will turn to True.

During this period, a REST based call to the Microsoft Cognitive Services endpoint at tts.speech.microsoft.com is made to request an audio file, for the selected voice, in the selected style, saying the words "This is an audio test".

The audio is returned as a string of bytes and emitted as audio.

The Code

// Load the voicesColVoices = new List<Voices>();ColVoices.Add(new Voices() { Id = 1, VoiceId = "en-US-JennyNeural", VoiceName = "Jenny" });ColVoices.Add(new Voices() { Id = 2, VoiceId = "en-US-JaneNeural", VoiceName = "Jane" });ColVoices.Add(new Voices() { Id = 3, VoiceId = "en-US-DavisNeural", VoiceName = "Davis" });ColVoices.Add(new Voices() { Id = 4, VoiceId = "en-US-GuyNeural", VoiceName = "Guy" });

For the Voice dropdown we create a simple collection of the possible voices.

// Load the stylesColStyles = new List<VoiceStyles>();//en-US-JennyNeuralColStyles.Add(new VoiceStyles() { Id = 1, VoiceIdNumber = 1, StyleId = "assistant", StyleName = "Assistant" });ColStyles.Add(new VoiceStyles() { Id = 2, VoiceIdNumber = 1, StyleId = "chat", StyleName = "Chat" });ColStyles.Add(new VoiceStyles() { Id = 3, VoiceIdNumber = 1, StyleId = "customerservice", StyleName = "Customer Service" });ColStyles.Add(new VoiceStyles() { Id = 4, VoiceIdNumber = 1, StyleId = "newscast", StyleName = "Newscast" });ColStyles.Add(new VoiceStyles() { Id = 5, VoiceIdNumber = 1, StyleId = "angry", StyleName = "Angry" });ColStyles.Add(new VoiceStyles() { Id = 6, VoiceIdNumber = 1, StyleId = "cheerful", StyleName = "Cheerful" });ColStyles.Add(new VoiceStyles() { Id = 7, VoiceIdNumber = 1, StyleId = "sad", StyleName = "Sad" });ColStyles.Add(new VoiceStyles() { Id = 8, VoiceIdNumber = 1, StyleId = "excited", StyleName = "Excited" });ColStyles.Add(new VoiceStyles() { Id = 9, VoiceIdNumber = 1, StyleId = "friendly", StyleName = "Friendly" });ColStyles.Add(new VoiceStyles() { Id = 10, VoiceIdNumber = 1, StyleId = "terrified", StyleName = "Terrified" });ColStyles.Add(new VoiceStyles() { Id = 11, VoiceIdNumber = 1, StyleId = "shouting", StyleName = "Shouting" });ColStyles.Add(new VoiceStyles() { Id = 12, VoiceIdNumber = 1, StyleId = "unfriendly", StyleName = "Unfriendly" });ColStyles.Add(new VoiceStyles() { Id = 13, VoiceIdNumber = 1, StyleId = "whispering", StyleName = "Whispering" });ColStyles.Add(new VoiceStyles() { Id = 14, VoiceIdNumber = 1, StyleId = "hopeful", StyleName = "Hopeful" });

Each voice has a list of associated styles. This is the collection created for the Jenny Neural voice. We associate the style to the voice using the VoiceIdNumber property.

void OnChange(object value, string name){// Reset SelectedStyleIdint voiceId = (int)value;SelectedStyleId = ColStyles.Where(x => x.VoiceIdNumber == voiceId).FirstOrDefault().Id;}

When a voice is changed in the dropdown, the OnChange event is fired. It will set the collection of styles to those associated with the selected voice.

The style dropdown, bound to the collection, will display the styles for the selected voice.

Playing the Dynamic Audio

async void LoadAudio(){// Set the processing flagProcessing = true;SubscriptionKey = _configuration["MicrsosofCognitiveServices:SubscriptionKey"];SpeechRegion = _configuration["MicrsosofCognitiveServices:SpeechRegion"];TokenUri = $"https://{SpeechRegion}.api.cognitive.microsoft.com/sts/v1.0/issueToken";DestinationURL = $"https://{SpeechRegion}.tts.speech.microsoft.com/cognitiveservices/v1";// Get selected Voice and Stylevar SelectedVoice = ColVoices.Where(x => x.Id == SelectedVoiceId).FirstOrDefault();var SelectedStyle = ColStyles.Where(x => x.Id == SelectedStyleId).FirstOrDefault();// Get the bytes for the audio filevar bytes = await CreateAudioFile("This is an audio test.",SelectedVoice.VoiceId, SelectedStyle.StyleId);if (bytes != null){// Put the bytes in a memory streamvar stream = new MemoryStream(bytes);// Play the audio fileusing var streamRef = new DotNetStreamReference(stream: stream);await JS.InvokeVoidAsync("PlayAudioFileStream", streamRef);}else{Error = "Error creating audio file.";}// Reset the processing flagProcessing = false;// Force a UI refreshStateHasChanged();}

When the Play Audio button is pressed the LoadAudio method is called.

This gets selected voice and style, gets the bytes for the audio file, puts the bytes in a memory stream, and plays the audio file.

Notice that to play the audio, it passes the bytes in the memory stream to the PlayAudioFileStream method using JS.InvokeVoidAsync.

<script>window.PlayAudioFileStream = async (contentStreamReference) => {const arrayBuffer = await contentStreamReference.arrayBuffer();const blob = new Blob([arrayBuffer]);const url = URL.createObjectURL(blob);var sound = document.createElement('audio');sound.src = url;sound.type = 'audio/mpeg';document.body.appendChild(sound);sound.load();sound.play();sound.onended = function () {document.body.removeChild(sound);URL.revokeObjectURL(url);};}</script>

The PlayAudioFileStream method is a JavaScript method contained in the _Layout.cshtml page. This is the key to playing dynamic audio. This method dynamically creates an audio element and plays the audio.

async Task<byte[]> CreateAudioFile(string paramAudioText, string paramSelectedVoice, string paramSelectedStyle){byte[] AudioResponse = null;try{Error = "";using (var client = new HttpClient()){// Gte a auth tokenclient.DefaultRequestHeaders.Add("Ocp-Apim-Subscription-Key", SubscriptionKey);UriBuilder uriBuilder = new UriBuilder(TokenUri);var result = await client.PostAsync(uriBuilder.Uri.AbsoluteUri, null);var AuthToken = await result.Content.ReadAsStringAsync();// Set Headersclient.DefaultRequestHeaders.Authorization =new AuthenticationHeaderValue("Bearer", AuthToken);client.DefaultRequestHeaders.Add("User-Agent", "curl");client.DefaultRequestHeaders.Add("X-Microsoft-OutputFormat","audio-16khz-128kbitrate-mono-mp3");// Create the SSMLvar ssml = @"<speak version='1.0' xml:lang='en-US' xmlns='http://www.w3.org/2001/10/synthesis' ";ssml = ssml + @"xmlns:mstts='http://www.w3.org/2001/mstts'>";ssml = ssml + @$"<voice name='{paramSelectedVoice}'>";ssml = ssml + @$"<mstts:express-as style='{paramSelectedStyle}'>";ssml = ssml + @$"{paramAudioText}";ssml = ssml + @"</mstts:express-as>";ssml = ssml + @$"</voice>";ssml = ssml + @$"</speak>";// Call the serviceHttpResponseMessage response =await client.PostAsync(new Uri(DestinationURL),new StringContent(ssml,Encoding.UTF8, "application/ssml+xml"));if (response.IsSuccessStatusCode){//Read as a byte arrayvar bytes = await response.Content.ReadAsByteArrayAsync().ConfigureAwait(false);AudioResponse = bytes;}}}catch (Exception ex){Error = ex.Message;}return AudioResponse;}

For completeness, we also show the CreateAudioFile method, called by the LoadAudio method, that calls the Microsoft Cognitive Services endpoint to get the audio as a byte array.

This uses SSML syntax, to instruct the service to return audio, using the selected voice and style.

Download

The project is available on the Downloads page on this site.

You must have Visual Studio 2022 (or higher) installed to run the code.